See the latest book content here.

5 Convolutional Neural Networks

Consider first this cute video:

In the video we see that “Chaser” the dog can recongize a thousand stuffed animals based on hearing their name. It requires him to recognize the spoken name, recognize the image, and make the associated connections. This type of thinking task is very topical for this unit where we’ll see how convolutions, mixed with artificial neural networks can be very powerful. They allow autonomous systems can be trained to classify images, and much more.

This resource by A. Amidi and S. Amidi provides a useful summary of some of the material that we cover here. Here is the interactive version.

We begin with some background about convolutions and then move onto neural networks to see how convolutions interpaly with neural networks.

5.1 Background on convolutions

A convolution can also be defined as an operation on two functions. This is often the case when considering continuous time convolutions as is done in electrical engineering, control theory, and the study of differential equations. However in our context, we mostly focus on vectors, matrices, and tensors. A convolution is an operation on two vectors, matrices, or tensors, that returns a third vector, matrix, or tensor.

5.1.1 Convolutions in LTI systems

LTI systems are concepts from control theory and signal processing that have influenced machine learning an led to the development of convolutional neural networks. To get a feel for the importance of convolutions lets first consider linear time invariant (LTI) systems where we focus on scalar valued, discrete time systems (e.g. ).

An LTI system, denoted , maps an input sequence to an output sequence (this is called a system), and has the following two properties:

Linearity: For input signals and and scalars and ,

Time invariance: Assume the input signal has output . Take now the time shifted input then output of , denoted , is the time shifted output of the original input. Namely .

It turns out that many operations on signals are well described by LTI systems. Further, it turns out that the operation of any LTI system on an input signal is equivalent to a convolution of the input signal with a special signal associated with the system, denoted the impulse response. We describe both convolutions and the impulse response shortly.

Observe that is the time shifted version of and is at and for any other . Consider any signal . It can be represented as, where is the sequence such that and for , .

We can now use this representation together with the LTI properties: Here we used the linearity property since are constants with respect to the time variable . Now denote the output of as . This is called the impulse response. Then we due to time invariance . We thus arrive at, Analogous results exist for continuous time systems, where the impulse response requires considering the generealized function, , called the Dirac delta function. In that case the convolution is determined via, This is called the convolution of and and is denoted . Hence we see that convolutions arise naturally when considering linear time invariant systems because the operation of an LTI system on any signal is simply given via the convolution of the signal with the system’s impulse response.

This video illustrates the operation of a (discrete time) convolution operation. The common phrase is "flip, shift, and add’.

It is easy to see that the convolution operation is commutative. That is . Simply do this via a change of variables (or )

Now note that if we were to define the convolution operation differently (here denoted ), as, then it no longer holds that . That is, the operation which is actually the convolution with the time-reversed signal of is no longer commutative (in signal processing this is sometimes called “the correlation”).

This argument about a ‘flipped’ (reversed) signal holds for image and higher dimensional convolutions as well. Commutativity is important in linear systems and basic signal processing as it means that the order in which convolutions is applied (when applying multiple convolutions) is inconsequential and the convolutions can be switched around if desired. However, in the context of neural networks it is not important. This is because we typically apply non-linear operations after a convolution, so there is no need to chain multiple convolutions. Hence in the neural networks context it doesn’t matter if we apply or and in fact, the ‘convolutions’ we use are typically as opposed to . The `filter’ is a learned parameter and hence it doesn’t matter if we learned or the reversed signal.

5.1.2 Convolutions in probability

Convolutions also appear naturally in the context of probability. This is when considering the distribution of a random variable that is a sum of two independent random variables. For example consider the discrete valued independent random variables and and define . For example, say that is a payoff yielding , , or with probabilities , , and respectively. Further say that is the payoff based on a fair die throw yielding payoffs or , each with probability . What is then the probability distribution of the total payoff ?

Note that a probability mass function is defined for all integer values and is zero for values outside of the support of the distribution. This allows to extend the summation outside of . Denote now the probability mass functions of , , and (sometimes called probability density functions even in this discrete case) via , , and respectively. Then we see that,

Note that is non-zero for . For example,

Or,

Or Exercise: Work out the distribution of for all other values of . We thus see that the convolution operation appears naturally in the context of probability.

5.1.3 Multiplication of polynomials and the convolution matrix

The convolution also arises naturally when multiplying polynomials. Consider for example the polynomial, and the polynomial, Now set . This is a polynomial of degree with coefficients as follows:

If we were to set for and similarly for then we could denote, However it is also useful to consider the finite sums and denote, where the sum is over pairs with and further requring and .

In this context we can also view as a vector of length , as a vector of length and as a vector of length . We can then create a matrix that encodes the values of such that, . More specifically,

In general a Toeplitz matrix is a matrix with constant entries on the diagonals. This shows that the convolution of with is a linear transformation and the matrix of that linear transformation is . This is called a Toeplitz matrix.

Since convolutions are commutative operations we can also represent the output as where is a matrix in this case,

5.2 Convolutional layers in neural networks: Key idea

In signal processing, filters are specified by means of LTI systems or convolutions and are often hand-crafted. One may specify filters for smoothing, edge detection, frequency reshaping, and similar operations. However with neural networks the idea is to automatically learn the filters and use many of them in conjunction with non-linear operations (activation functions).

As an example consider a neural network operating on sound sequence data. Say the input is of size and is based on a million sound samples (at samples per second this translates to 125 seconds or just over 2 minutes of sound recording). Assume we wish to classify if the sound recording is human conversation (positive), or other sounds (negative).

A possible deep neural network architecture may involve several layers and result in a network such as,

Such a network passes the input x through a first layer with ReLu activation, a second layer with ReLu activation, and finally a third layer with sigmoid activation returning eventuall for a positive (say human conversation) and for a negative sample. Here is a matrix with a single row and is a bias of length .

The best dimensions of the inner layers (and associated weight matrices and bias vectors) may depend on the nature of the problem and data at hand, however say we were to construct such a network and end up with the following:

- has dimension and has dimension . This means the first hidden layer has neurons.

- has dimension and has dimension . This means the second hidden layer has neurons.

- has dimension and has dimension . This means the output layer has a single neuron.

The total number of parameters of this network is then

In today’s architecture one can train such neural networks, however this is a huge number of parameters for the task at hand. In general, it is a very wasteful and inefficient use of dense matrices as parameters. Just as importantly, such trained network parameters are very specific for the type of input data on which they were trained and the network is not likely to generalize easily to variations in the input.

Note that the use of Toeplitz matrices is for mathematical purpuses and not implementation purpuses. That is, when implementing the network we don’t code (and allocate memory) for Toeplitz matrices. We rather encode the convolutions in the minimal memory footprint that they require. An alternative is to use convolutions layers where is replaced by a Toeplitz matrix, say , and is replaced by a Toeplitz matrix . Here and represent filters, similar to the impulse response presented above. In this case we can still have with the same dimension as and similarly can be set to have the same dimension as . However the number of parameters is greatly reduced as instead of and we only need the the filters and . Each of these filters can be of length , , , or similar. In any case, it is a much lower number of parameters.

The main principles that justify convolutions is locality of information and repetion of patterns within the signal. Sound samples of the input in adjacent spots are much more likely to affect each other than those that are very far away. Similarly, sounds are repeated in multiple times in the signal. While slightly simplistic, reasoning about such a sound example demonstrates this. The same principles then apply to images and other similar data.

5.2.1 Multiple channels

Convolutional neural networks present an additional key idea: multiple channels. The idea is that in each layer, we don’t keep a single representation of the transformed input (voice in this case) via neurons but rather keep a collection of representations, each resulting from the output of different filters.

The bias terms are typically scalars with one scalar bias per filter. The same holds for the general image CNNs that we develop below.

For example, in the input we have . We then operate on via the filters , , and . Here the subscript indicates the first layer whereas the superscripts , , and indicate the channels. Then in the first hidden layer we have,

This allows to use three different types of filters in the first layer, each passing through its own non-linearity. This intermediate representation (in layer 1) via the three channels and can then be further processed. When we do so, we apply convolutions filters that operate on all channels jointly. That is, the channels of layer (of which we may have again, or some other number) are each functions of the three channels of layer . We can represent this via, Later, when we move the the common CNN used for images, the filter will actually be a three dimensional filter (or volume filter). where is the index of the channel in hidden layer 2. In this expression note that we use the matrix to represent the linear operation based on the two dimensional filter . We describe such two dimensional convolutions below, and also generalize to three dimensions.

With each layer we then continue with for where denotes the number of channels in layer .

To reason about the context of channels in the sound example, consider a slightly different example. Assume the sound input data is now in stereo (left and right sound channels). This is analegous to the common image input situation discussed below where there are three input channels (red, green and blue). Now at the first convolutional layers we may have 8 channels and this means 8 filters. Each of the filters operates on both the left and the right input channels. The meaning of these 8 layers don’t directly map to ‘left’ and ‘right’ as they did in the input channels. They rather map to sound representations that involve both the input channels and have some meaning in the context of the whole network. We may then follow with a second convolutional layer that has filters (and thus results in 4 channels). Now each of these filters will involve each of the 8 channels of the first layer. This can then continue for further more layers. After these convolutional layers, one or more fully connected layers are used to ‘connect’ the features detected by the convolutional layers.

5.3 Two dimensional convolutions and extensions

Note that there is also an application of CNNs where sound data is represented as an image and then processed as a spectogram image. See for example this blog post. This is different from the elementary presentation we had above which was for the purpuse of thinking of one dimensional convolutions as a first step towards understanding CNNs.

As motivated by the discussion above, even when we consider sound data, two dimensional convolutions arise due to channels. In the case of image data, two dimensional convolutions pop up naturally from the start, and when considering channels we even need volume (3 dimensional) convolutions. We now explain these concepts.

Odd convolution kernels are more natural because they focus on a “center pixel.”

As an illustration consider a monocrhome image as an matrix with the entry of the matrix for and . Consider now a convolution kernel which is taken as an matrix (with typically a small integer such as , , etc..). The (two dimensional) convolution of the image with the kernel is another image matrix where each is obtained via, where we can initially just think of and as and , however with certain variants we can modify these index functions. We also treat as for and that are out of range.

The inner product of two vectors and is clearly . In the context of matrices or volumes the inner product of and is where converts the matrix or volume into a vector. It is important that the dimensions of and are the same and then corresponding entries are multiplied and the result is summed up to yield a scalar. Often will be part of an input image (or volume) and will be the convolution filter of the same dimension.

Hence the output of such a two dimensional convolution at index and “covers” a box in the input image at the neighborhood of with the kernel . It then carries out the vectorized inner product of and the covered area. See this illustrative animation of multiple such convolutions as used in a convolutions neural network:

5.3.1 Stride, padding, and dilation

The specific dimensions of the output image and the specific way of determining the neighborhood of via the functions and can vary. There are two common parameters which play a role, stride and padding. The stride parameter, , indicates the jump sizes with which the convolution kernel needs to slide over the image. A default is meaning that the kernel moves one pixel at a time, however or slightly higher is also common. The padding parameter, indicates how much zero padding should be placed around the input image.

To see these in action lets actually return to a one dimensional example with a very short audio signal of length . Assume that Assume we have a convolution kernel . This is a moving average convolution. In the basic form, if we again think of and as coefficients of polynomials then we have an th degree polynomial multiplied by a nd degree polynomial so we get a polynomial of degree (having coefficients). Notice that the the length of is . In this case we implicitly had the maximal padding possible , which is one less than the size of the convolution kernel. We also implictly had a stride of . We can think of this convolution as sliding the filter as follows for creating for :

Note that the zeros (two the left and two the right) of the sequence are the padding.

Remember that a convolution actually needs to reverse the kernel. However in this example our kernel is the same even when reversed. Further, in neural networks we typically don’t worry about reversing the kernel.

Now in case we were to avoid using any padding () the result would have been of length , namely,

This process of ‘getting a smaller signal’ is sometimes called downsampling. An alteratnive way to using stride is max-pooling described below. The above two cases were for the case of a stride meaning that we slide the convolution kernel by step each time. However, we sometimes wish to get a (much) smaller output signal (and or run much faster). In this case we can slide the kernel by a bigger stride. For example lets consider now and also demonstrate in this case.

This formula is a key formula to consider when constructing a CNN architecture. It allows to determine the output size from a convolutional layer. In this case the output is of length . In general the length of the output follows, where is the length of the input signal and is the length of the filter. For the first case above ( and ) we have: For the second case,

Note that in certain (less common) cases we may have uneven padding. In this case the term in the formula needs to be replaced by .

And for the third case,

In practice we almost always use square filters and square images. Now in the case of a two dimensional convolution, this formula still holds however is replaced by either the horizontal and vertical dimension of the image and in cases where the filter is not square, similarly with .

In addition to stride and padding there is a third element which is sometimes introduced: dilation. The idea of dilation is to “expand-out” the convolution by adding inserting holes within the kernel and making the kernel wider. The dilation parameter is by default and then there is no dilation. See for example this blog post. However, dilation is not as popular.

5.3.2 Some example two dimensional filters

So far we have spoken about convolutions but with the exception of the averaging kernel used above haven’t shown useful kernels. In signal processing and classic image processing we often design the convolutional kernals for the task at hand. We can do smoothing, sharpening, edge detection, and muhc more. This video presents a variety of examples:

The point is that we can “hand craft” kernels to carry out the task we are interested in. However, with CNN’s the network learns automatically about the best kernels for the task at hand.

5.3.3 Beyond two dimensions

Two dimensional convolutions are important for image processing of monochrome images, however with convolutional neural networks we often go further. We now focus on the third dimension (the channel dimension). Note that the discussion can go further to arbitrary dimensions and indeed in special situations such as medical imaging data there are more than three dimensions at play, however for our purpuses we can stick with three dimensions.

For example in the input image, we have and as the dimensions (resolution) of the image and we have for color images. The three dimensions are width (horizontal), height (vertical), and number of channels (depth). That is, the input objects are -tensors of dimensions , , and .

In general one could imagine a convolution operation in which a -tensor of dimension ‘moves around’ to create the output convolution and we can have , , and . This would in general create an output volume (so volume would be converted to volume). However, in all practical CNNs, we have that while, and hold, the third dimension is an equality: . That is, our convolutions move throughout the ‘full depth’ of the input volume. Hence we always create a matrix out of the input tensor (and we do it repeatadly to create multiple such matrices/channels).

As an example, consider the first convolutional layer which operates on the input image for which (red, green, and blue). If we are doing convolutions then . We then ‘slide’ this kernel over the input image, each time multiplying over all channels. That is each time, doing an inner product of two vectors of length .

5.3.4 One by one convolutions

If we were to consider a monochrome image, then there is no reason to carry out a convolution with a filter. It would simply be equivalaent to scaling the whole image by a constant. However, when considering volume convolutions as described above, a convolution ‘mixes through’ all of the input channels. So again, if for example considering the input layer where , a convolution would be a way to make a monochrome image based on a linear combination (given by the convolution kernel) of R, G, and B. The idea then continue to deeper layers where is not necessarily (and the channels don’t have direct physical mappings. Still, one by one convolutions are often sensible.

5.4 Putting the bits together for a CNN

Now that we understand convolutions, image convolutions, and volume convolutions, we are almost ready to construct convolutional neural networks (CNN). Mathematically a neural network is simply a composition of affine mappings, and non-linear (activation) mappings, where we alternate between an affine mapping (weights and biases) and an activation function (applied element wise). A CNN is effectivly just the same, only that some of the layers involve convolutional affine mappings which unlike dense (fully connected) mappings have fewer parameters and importantly reuse the parameters in a spatially homogeneous manner. There is only one additional key ingredient, max pooling. We describe this now.

5.4.1 Max pooling

The max pooling operation is a simple and effective way for downsampling. The operation takes an input channel, say of width and height and partions it into adjacent pixels of some specified size (often ). It then takes the maximum of each pixel and returns this as output. Hence in the case, the resulting output image is of width and .

The key effect of max pooling is that the most dominant pixel out of each four neighbouring pixels (in case of ) is selected and the others ignored. Keep in mind, that in inner-layers of convolutional neural networks the meaning of a ‘pixel’ is much closer to some ‘feature’ of something that is happening in the image.

Note that a max pooling operation is refered to by some authors and practionars as a ‘layer’. However we shall refer to it as ‘part of’ a convolutional layer. (Note that some even refer to the activation function as a layer). Like activation functions, max pooling does not have trained parameters. As an example, assume we have a convolutional layer that results in images of , and channels. If we then run a max pooling operation on this layer (say with pooling), then we end up with , and .

Important to note that max pooling is not run on the whole volume of channels but only on each channel seperatly. One may introduce stride and padding to max-pooling as well however this isn’t common.

5.4.2 Other elements: Batch normalization and Dropout

The basic elements that exist in neural networks such as batch normalization and dropout can still be used in CNNs. Batch normalization is often useful in convolutional layers, but dropout should generally not be used for convolutional layers but only for dense layers in the CNN.

5.4.3 The ‘typical’ architecture

The typical standard way to construct a CNN is to have several convolutional layers, sometimes with max pooling and sometimes without, and then have one or several dense layers just before the output layer. Further, in certain cases, dense layers can be used in the middle of the network.

As the input features flow through more and more hidden convolutional layers, more and more refined image features are detected. It will often turn out that the trained filters on initial layers will be used for rough purpuses such as edge detection. Then moving deeper into the network, the trained filters will combine properties of previous features to create more refined elements. Then towards the output layer, combining all these features with dense layers works well.

Here is are two neural network models designed to work on classifing monochrome images for 1 of 10 labels (e.g. MNIST). In this case the models are specified via Flux.jl. The full code is here. The first model, model1 is fully connected network. The second model, model2 has two convolutional layers.

You don’t have to know Julia or Flux.jl to understand the architecture of these models. The first model has hidden layers and an output layer. There are neurons in the first hidden layer and in the second hidden layer. The (hidden) activiation functions are ReLu and Tanh.

The second model has two convolutional layers. The first has convolutions and the second has convolutions. Both layers employ max pooling. The first layer has filters (and thus creates channels). The second layer has filters and thus creates channels. The convolutional layers are followed by a fully connected layer with neurons for which the weight matrix is (here comes from the previous convolutional layer).

#Julia code

using Flux

model1 = Chain(

flatten,

Dense(784, 200, relu),

Dense(200, 100, tanh),

Dense(100, 10),

softmax)

model2 = Chain(

Conv((5, 5),1=>8,relu,stride=(1,1),pad=(0,0)),

MaxPool((2,2)),

Conv((3, 3),8=>16,relu,stride=(1,1),pad=(0,0)),

MaxPool((2,2)),

flatten,

Dense(400, 10),

softmax)Question: How many parameters are in model1?

Answer: .

Question: What is the size of the output of the first layer in model2?

Answer: . Hence prior to max-pooling we have and after max-pooling .

Question: What is the size of the output of the second layer in model2?

Answer: . Hence prior to max-pooling we have and after max-pooling .

Question: Where does the number 400 come fropm and what does it mean?

Answer: The convolutional layer prior to the dense layer has . Hence the weight matrix of the dense layer operates on inputs of size .

Question: How many parameters are in model2?

Answer:

- First layer:

- Second layer:

- Third layer:

- Total: .

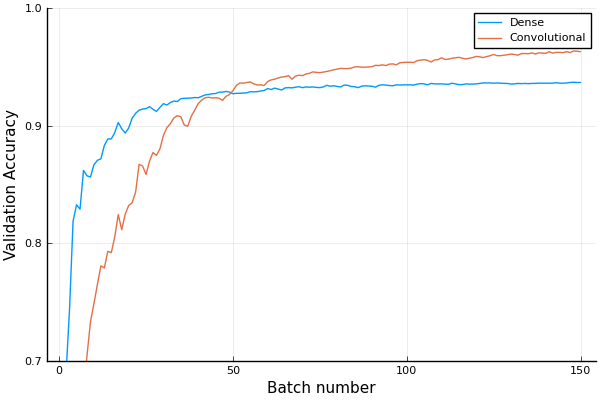

Here is a plot of the validation accuracy for both model1 and model2 when trained on MNIST images (and validated on an additional ). This is with the ADAM optimizer with a learning rate of and batch sizes of .

In this case model2 appears better and this agrees with the general fact that convolutional networks train faster and yield better performance for image data.

5.4.4 An ‘industrial strength’ architecture

Here is the VGG19 architecture.

using Metalhead

#downloads about 0.5Gb of a pretrained neural network from the web

vgg = VGG19();

for (i,layer) in enumerate(vgg.layers)

println(i,":\t", layer)

endMore on these common archictures below. For now just consider the structure of this architecture which has layers.

Observe that the number of layers with trained parameters is (ignore max pooling, dropout, softmax, and #44 which stands for a reshape function). See the source code.

The number of neurons is determined by the flow in the first 16 convolutional layers and by the fact that the input is a (image). However the number of neurons is more arbitray.

The number of output neurons is as this network is designed to classify one of labels as specified by the image net challange.

When you do ‘transfer learning’ (more on this in Unit 6), you may use a pretrained model for the convolutional layers and retrain the dense layers at the end.

1: Conv((3, 3), 3=>64, relu)

2: Conv((3, 3), 64=>64, relu)

3: MaxPool((2, 2), pad = (0, 0, 0, 0), stride = (2, 2))

4: Conv((3, 3), 64=>128, relu)

5: Conv((3, 3), 128=>128, relu)

6: MaxPool((2, 2), pad = (0, 0, 0, 0), stride = (2, 2))

7: Conv((3, 3), 128=>256, relu)

8: Conv((3, 3), 256=>256, relu)

9: Conv((3, 3), 256=>256, relu)

10: Conv((3, 3), 256=>256, relu)

11: MaxPool((2, 2), pad = (0, 0, 0, 0), stride = (2, 2))

12: Conv((3, 3), 256=>512, relu)

13: Conv((3, 3), 512=>512, relu)

14: Conv((3, 3), 512=>512, relu)

15: Conv((3, 3), 512=>512, relu)

16: MaxPool((2, 2), pad = (0, 0, 0, 0), stride = (2, 2))

17: Conv((3, 3), 512=>512, relu)

18: Conv((3, 3), 512=>512, relu)

19: Conv((3, 3), 512=>512, relu)

20: Conv((3, 3), 512=>512, relu)

21: MaxPool((2, 2), pad = (0, 0, 0, 0), stride = (2, 2))

22: #44

23: Dense(25088, 4096, relu)

24: Dropout(0.5)

25: Dense(4096, 4096, relu)

26: Dropout(0.5)

27: Dense(4096, 1000)

28: softmax5.5 Training CNN

Training of convolutional neural networks follows the same paradigm as the training of fully connected neural networks studied in unit 4. The application of a forward pass, and backpropgation yield the gradients and these are then updated using a first order method such as ADAM. The key point is that trained parameters in convolutional layers are the filters and the biases.

All the popular deep learning frameworks, Tensorflow, PyTorch, Keras, Flux (for Julia), and others allow for efficient gradient computation.

A key component of efficient training involves employing GPUs effectively. We do not deal with these aspects in this course. You may enjoy this video by Siraj Raval to get a feel for the usage of GPUs. Then the specific application of GPUs for your purpuse may depend on the language, framework, hardware, and application that you have in mind.

5.6 Four famous architectures

We now survey four famous architectures that were both research landmarks and are used today. The VGG architecture was already used a above. A complete lecture about these architectures is here:

Our purpuse is simply to highlight the key features. See also the Key papers in the development of deep learning section.

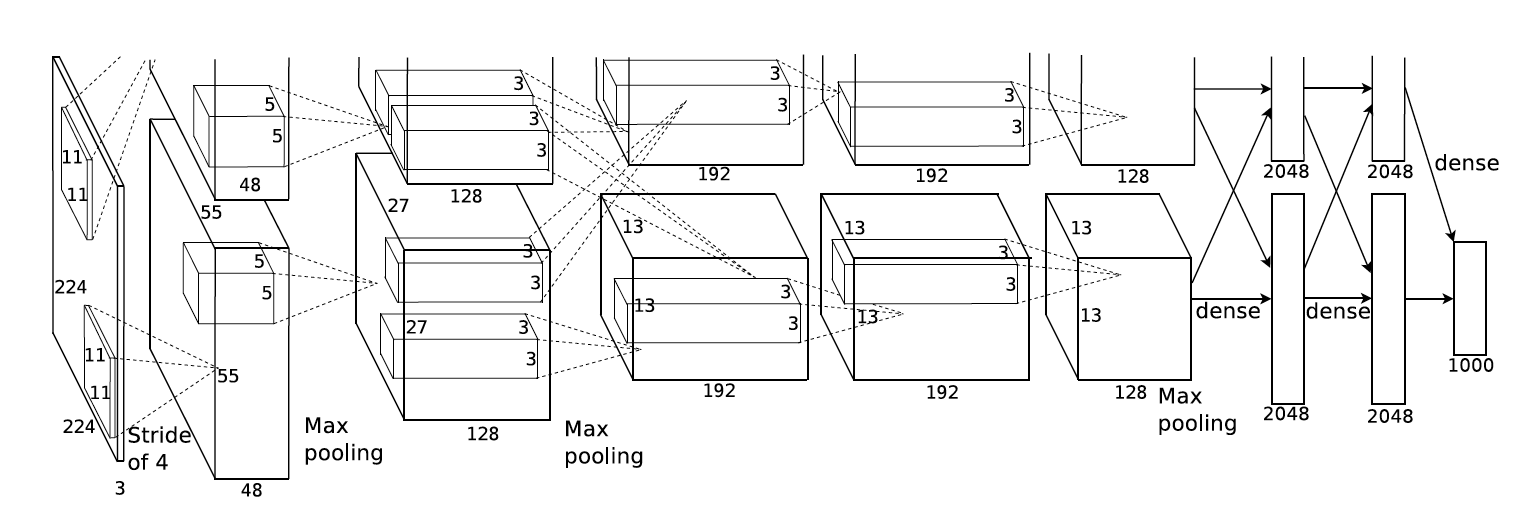

- AlexNet: A 2012 network that made history. It has eight layers; the first five are convolutional layers, some of them followed by max-pooling layers, and the last three are fully connected layers. AlexNet used convolutions in the first layer with a stride of . It also had an architecture that was taylor made to running on two parallel GPUs (it predates the current deep learning frameworks).

The AlexNet architecture, taken from the original paper

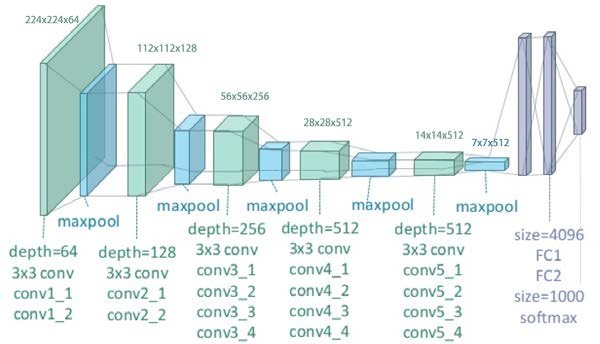

- VGG: This was the next step after AlexNet and is stil used today. Read for example this blog post and see the demonstrations above. A common form is VGG16, but there is also VGG19 that we used above.

Here is a schematic of the VGG19 architecture. Note that the green blocks contain several convolutional layers each.

VGG19 - Image courtesy of Clifford K. Yang

To contrast VGG with AlexNet it is useful to consider the concept of the receptive field. The receptive field of a neuron in a CNN is defined as the region in the input space (image) that the neuron is looking at. See for example this comprehnsive blog post. VGG uses combinations to smaller convolutions to achieve similar receptive fields to AlexNet (which uses bigger convolutions).

- Inception (GoogleNet): This network (or better stated as ‘family of networks’) is in many ways close to the current state of the art of general convolutional neural networks. It is heavily used today. See for example this blog post. Key is the usage of inception modules which conctanate seeral convolutional operations together. See for example this short video:

- Resnets: The resnet (residual network) architecture introduces a new idea: residual connections. The main idea is to have layers work on the sum of the output from the direct previous layer as well as several layers back (2 or more). This architecture allows to overcome training problems that occur with very deep networks. Here is a comprehensive video describing Resnets:

5.7 Inner layer interpretation

In general deep learning models and particularly CNNs are not interpretable models. That is, unlike simpler statistical models such as GLMs, the trained parameters of deep neural networks do not have an immediate interpretation.

Nevertheless, the convolutional filters of deep learning networks can be visualized and in certain cases one may make some sense of what certain filters ‘do’. Watch this video which presents several ways for getting some interperation of innner layers.

5.8 Beyond Classification

When learning about CNNs the basic first task to consider is image classification - and this is what we have done above. However, CNNs can be used for several additional important tasks.

These include (among others):

- Object localization: Finding the location (and type) of an object within an image. E.g. looking at an image of a street and identifying cars, pedestrains, and other objects.

- Landmark detection: Finding the location of multiple landmarks within in image. E.g. looking at an image of a face and finding the tip of the nose, the edges of the eyes, etc.

- Object detection: Finding several objects and their type in an image.

- Region proposals: Determining which regions in an image denote different objects.

- One shot learning: A face verification algorithm that uses a limited training set to learn a similarity function that quantifies how different two given images are.

- Style transfer: Transfering style from one type of image to a different type of image.

CNNs have been adapted to deal with tasks such as those above and many others. As an example, one very popular state of the art algorithm that deals with object detection is YOLO (You Only Look Once). See this nice video (again by Siraj Raval):

We don’t cover the full breadth of these tasks in the course, but instaed focus on the simplest task possible, object localization.

5.8.1 Object localization

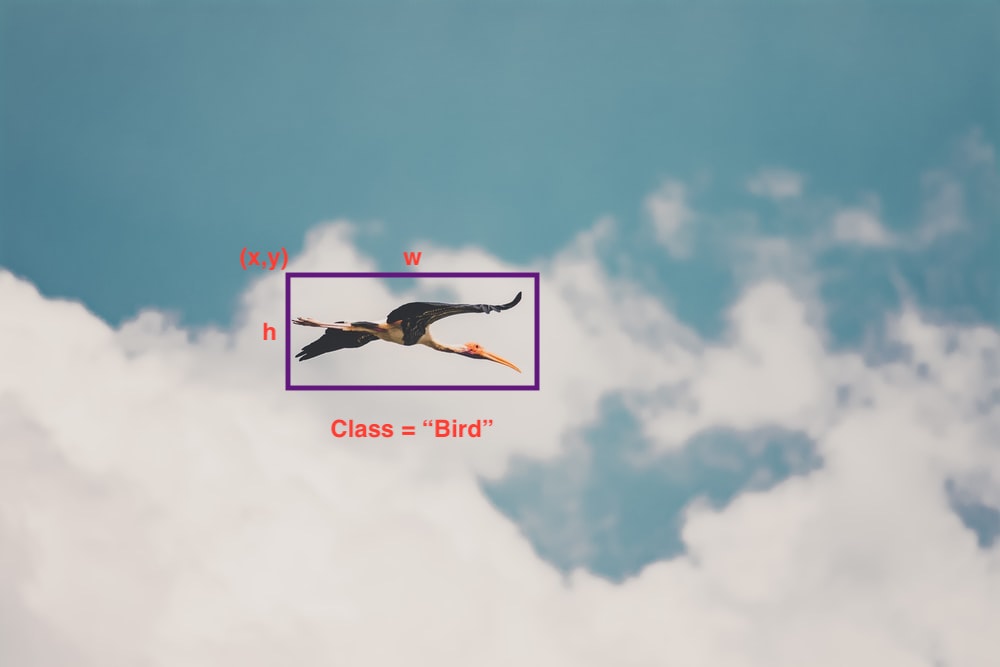

Here assume you are presented with an image such as this one,

Assume you wish to classify the object in the image as well as to locate where it is in the image. The classification will be to one of classes (say “bird”, “plane”, or “nothing”) and the location to a 4-tuple where is the location of the top right corner pixel, is the width, and is the height. The result of such object localization (and classification) may yield something as follows:

A standard way to carry out this task is to have the network output both the probability vector for the class labels and the tuple.

A typical way to do this is to have output labels as, where indicates the probability that there is an object (0 or 1 on a training image), , , , and , are as described above, is the probability of the object (if there is one) being of class 1 (“bird”), and is the probability of the ojbect (if there is one) beiung of class (“plane”).

Note that for images that have “nothing”, the training label will be, where are ‘don’t care’ values - meaning they can be anything.

Note that we use square loss here for simplicity, however CE loss can be used for the probabilities instead. In this case, a possible training loss is,

Page built: 2021-03-04 using R version 4.0.3 (2020-10-10)