See the latest book content here.

2 Logistic Regression Type Neural Networks

Learning outcomes from this chapter

- Logistic regression view as a shallow Neural Network

- Maximum Likelihood, loss function, cross-entropy

- Softmax regression/ multinomial regression model as a Multiclass Perceptron.

- Optimisation procedure: gradient descent, stochastic gradient descent, Mini-Batches

- Understand the forward pass and backpropogration step

- Implementation from first principles

2.1 Logistic regression view as a shallow Neural Network

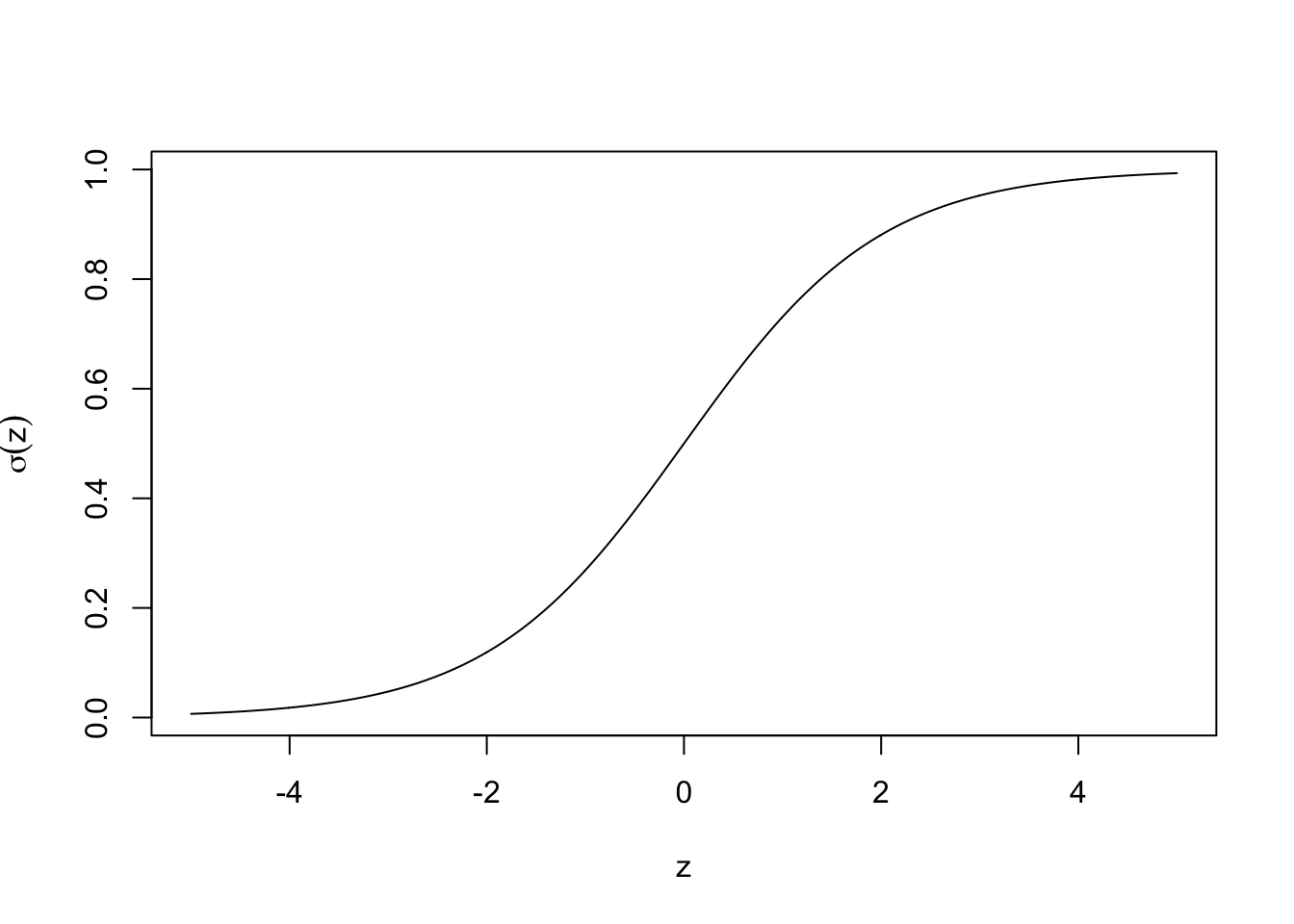

2.1.1 Sigmoid function

The sigmoid function , also known as the logistic function, is defined as follows:

Figure 2.1: Sigmoid function

Figure 2.1: Sigmoid function

z <- seq(-5, 5, 0.01)

sigma = 1 / (1 + exp(-z))

plot(sigma~z,type="l",ylab=expression(sigma(z)))2.1.2 Logistic regression

The logistic regression is a probabilistic model that aims to predict the probability that the outcome variable is 1. It is defined by assuming that . Then, the logistic regression is defined by applying the soft sigmoid function to the linear predictor :

The logistic regression is also presented:

where .

Remark about notation

- represent a vector of features/predictors and by convention

- is the vector of parameter related to the features

- is called the intercept by the statistician and named bias by the computer scientist (noted generally )

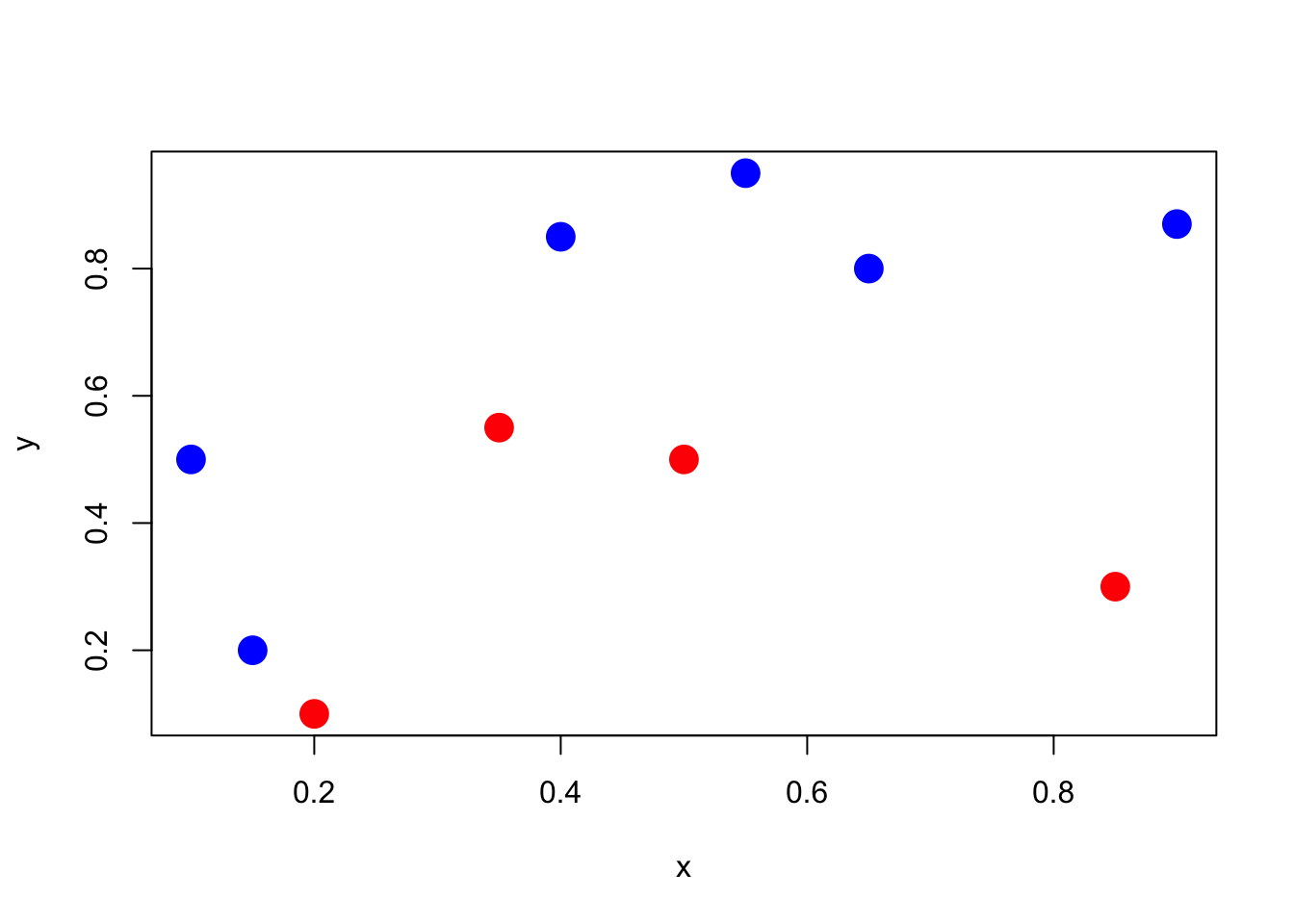

2.1.3 Logistic regression for classification

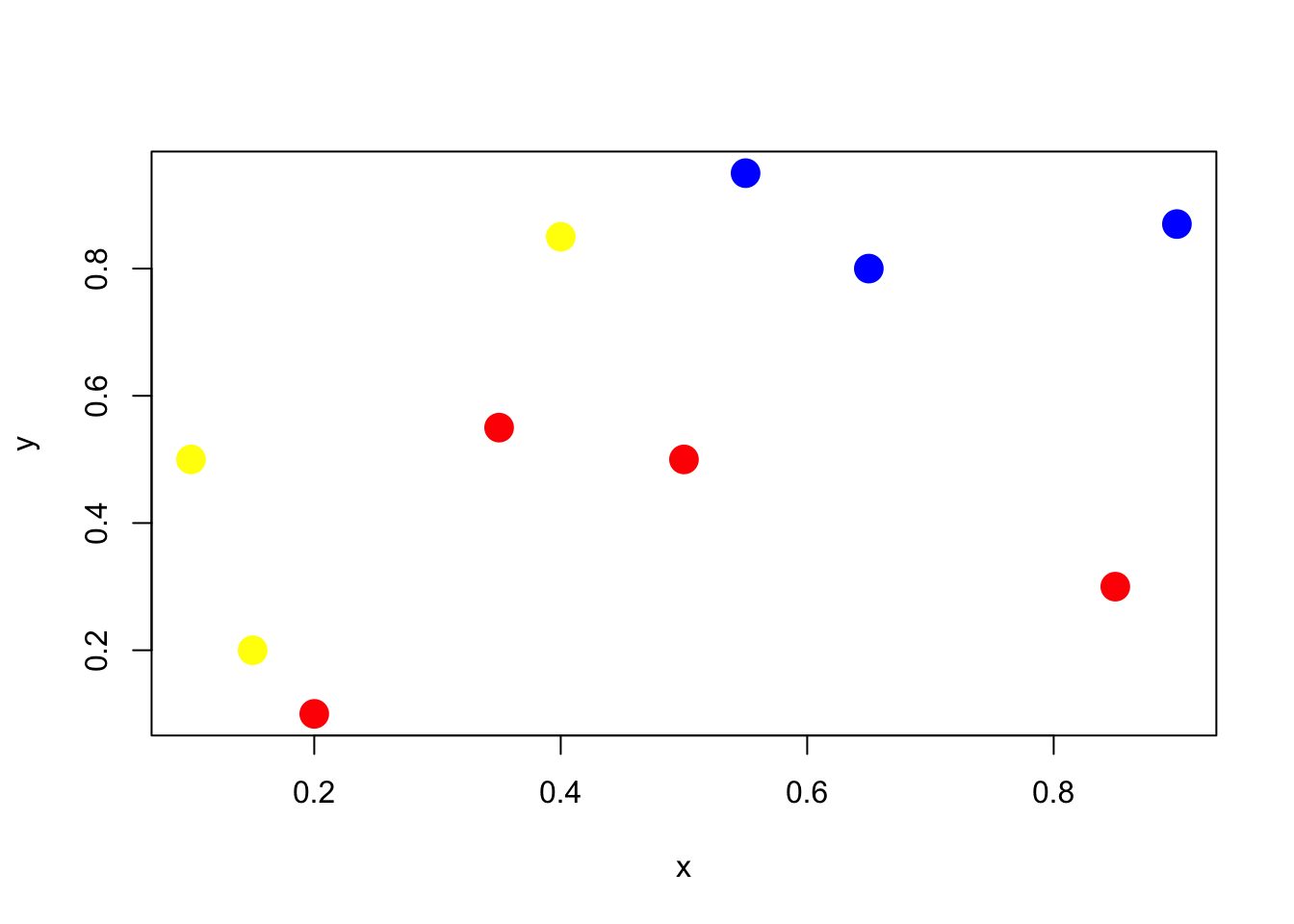

Let’s play with a simple example with 10 points, and two classes (red and blue)

Figure 2.2: Classify red and blue points

Figure 2.2: Classify red and blue points

clr1 <- c(rgb(1,0,0,1),rgb(0,0,1,1))

clr2 <- c(rgb(1,0,0,.2),rgb(0,0,1,.2))

x <- c(.4,.55,.65,.9,.1,.35,.5,.15,.2,.85)

y <- c(.85,.95,.8,.87,.5,.55,.5,.2,.1,.3)

z <- c(1,1,1,1,1,0,0,1,0,0)

df <- data.frame(x,y,z)

plot(x,y,pch=19,cex=2,col=clr1[z+1])In order to classify the points, we run a logistic regression to get predictions

model <- glm(z~x+y,data=df,family=binomial)

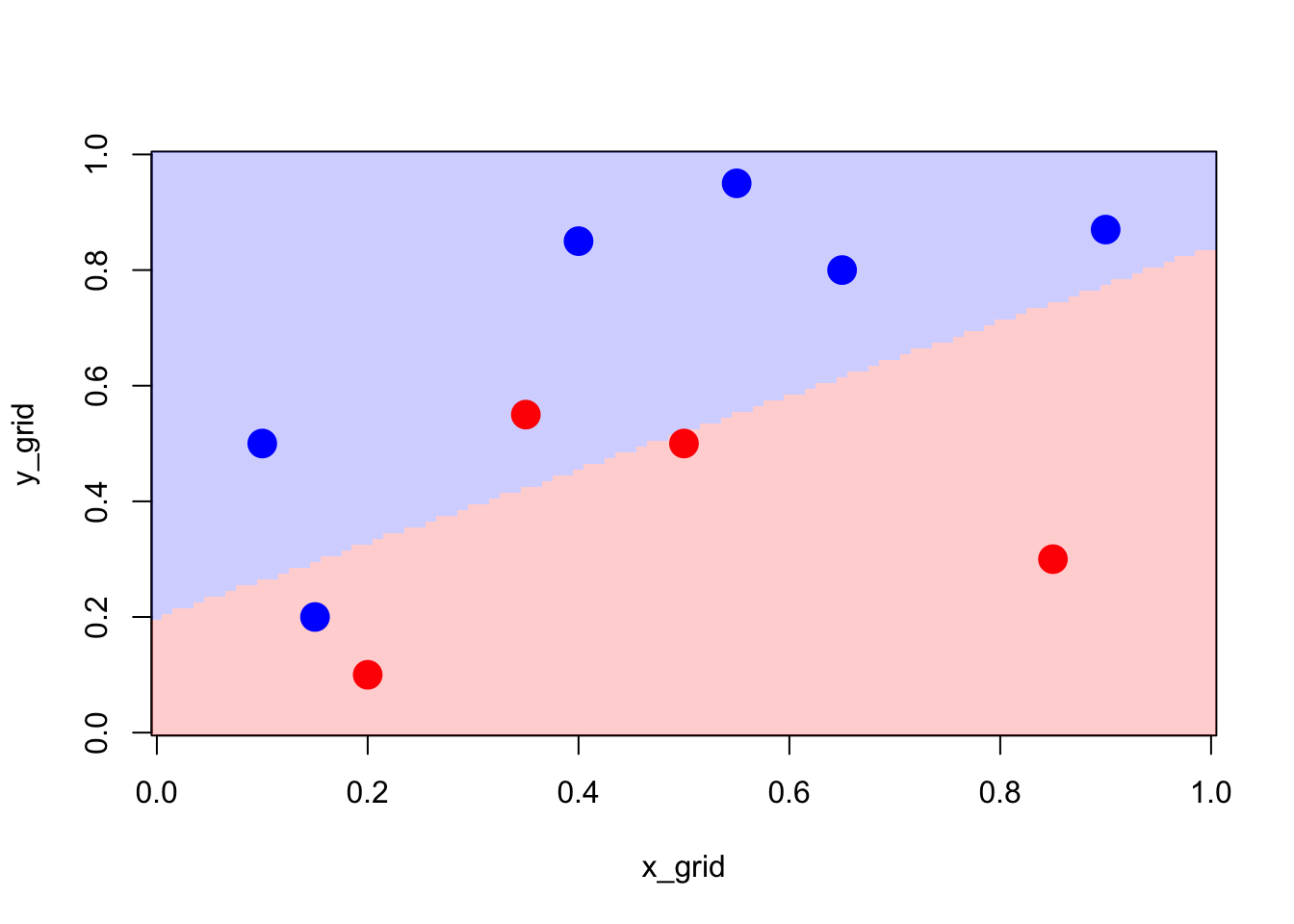

#summary(model)Then, we use the fitted model to define our classifier which is defined as attributed the class that is the most likely.

pred_model <- function(x,y){

predict(model,newdata=data.frame(x=x,

y=y),type="response")>.5

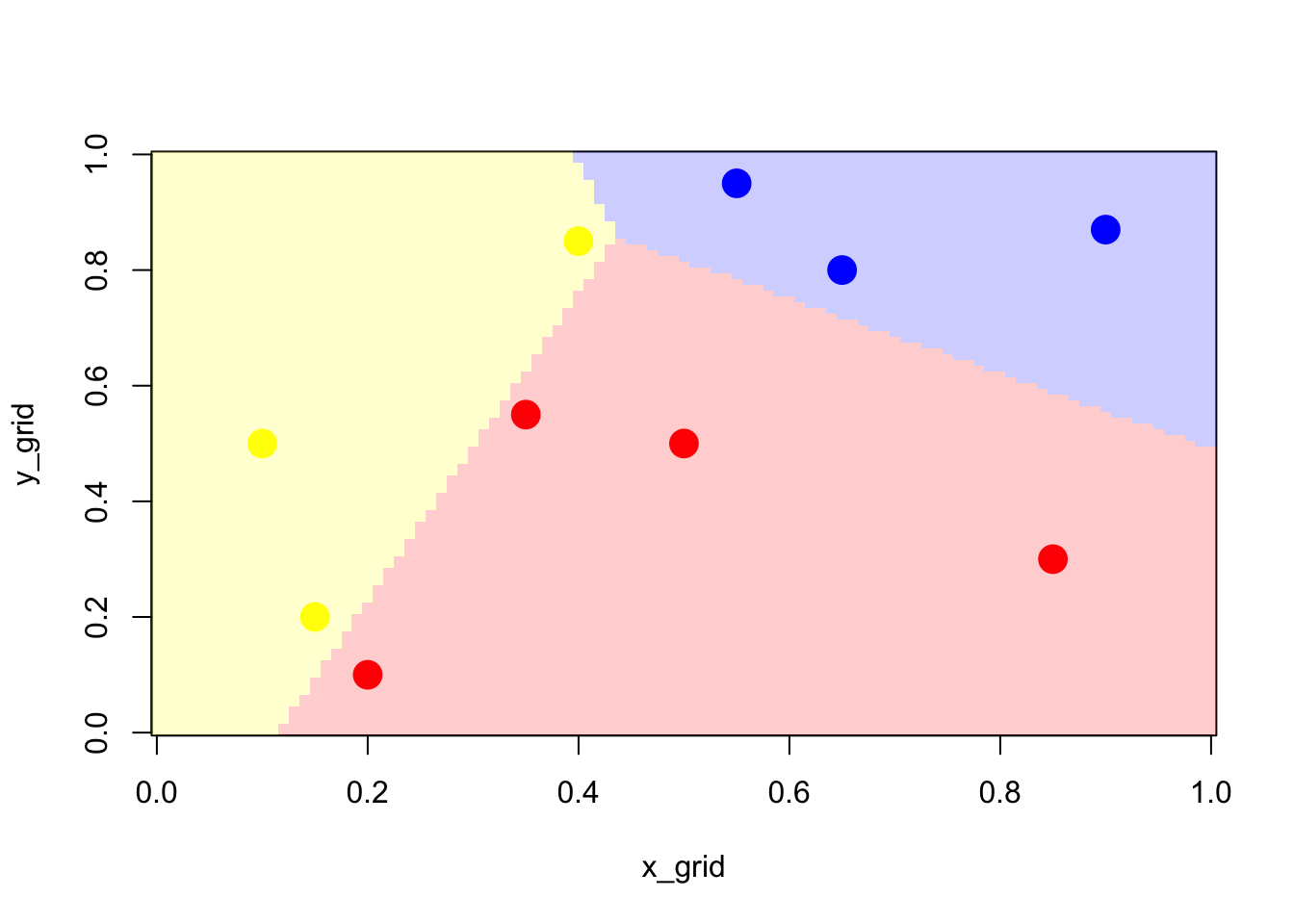

}Using our decision rule, we can visualise the produced partition of the space.

Figure 2.3: Partition using the logistic model

Figure 2.3: Partition using the logistic model

x_grid<-seq(0,1,length=101)

y_grid<-seq(0,1,length=101)

z_grid <- outer(x_grid,y_grid,pred_model)

image(x_grid,y_grid,z_grid,col=clr2)

points(x,y,pch=19,cex=2,col=clr1[z+1])2.1.4 Likelihood of the logistic model

The maximum likelihood estimation procedure is generally used to estimate the parameters of the models .

which could be written as

Consider now the observation of training samples denoted by as i.i.d. observations from the logistic model. The likelihood is

Then, the following log likelihood is maximized to the estimates of :

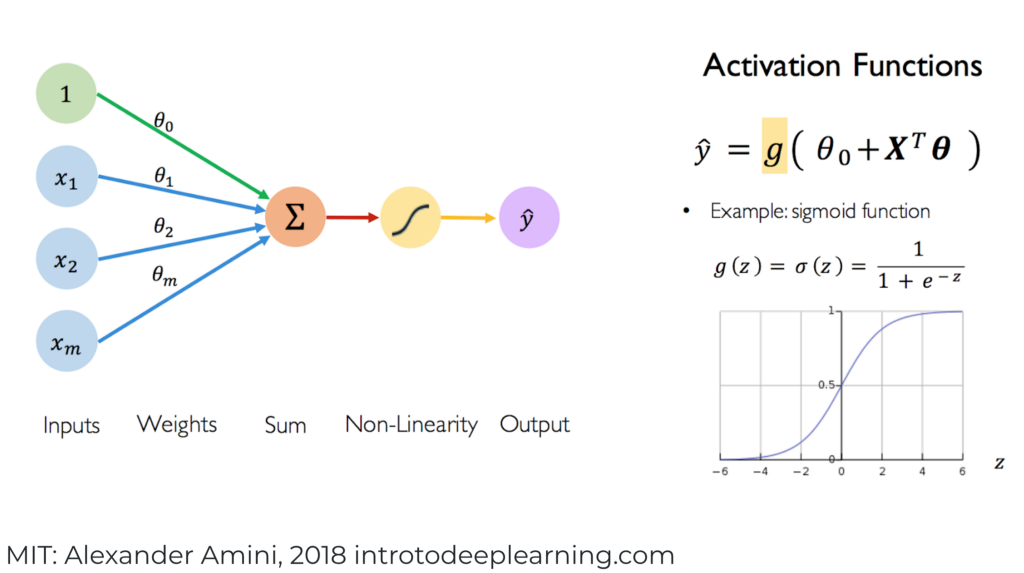

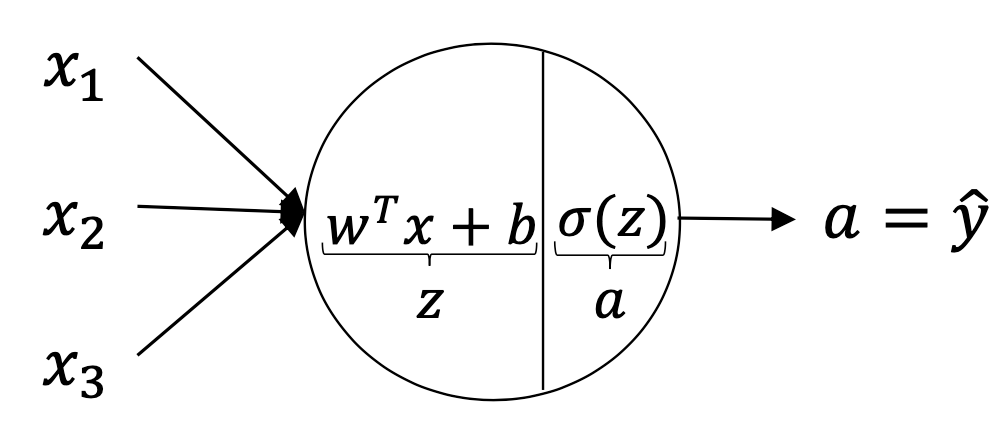

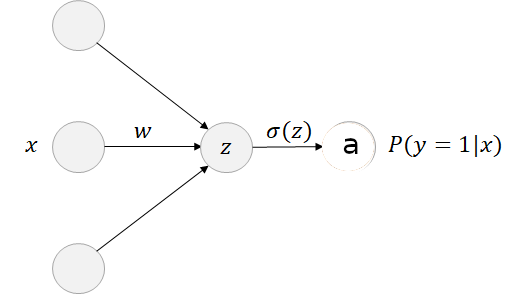

2.1.5 Shallow Neural Network

The logistic model can ve viewed as a shallow Neural Network.

This figure used here the same notation as the regression logistic model presented by the statistical point of view. However, in the following we will adopt the notation used the most frequently in deep learning framework.

Figure 2.4: Shallow Neural Network

In this figure, is the linear combination of the features/predictors and is called the activation function which is the non-linear part of the Neural Network to get a close prediction .

Remark: In the sequel, we will adopt the following notation:

- representz a vector of features/predictors

- is the vector of weight related to the features

- is called the biais

- We consider the observations of training samples denoted by

2.1.6 Entropy, Cross-entropy and Kullback-Leibler

Let’s first talk about the cross-entropy which is widely used as loss function for classification purpose.

Cross Entropy (CE) is related to the entropy and the kullback-Leibler risk.

The entropy of a discrete probability distribution is defined as

which is a ``measurement of the disorder or randomness of a system’’.

Kullback and Leibler known also as KL divergence quantifies how similar a probability distribution is to a candidate distribution .

Note that the divergence is not a distance measure as . is non-negative and zero if and only if for all .

One can easily show that

where the first term of the right part is the cross entropy:

And we have the relation

Thus, the cross entropy can be interpreted as the uncertainty implicit in plus the likelihood that the distribution could have be generated by the distribution .

2.1.7 Mathematical expression of the Neural Network:

For one example , the ouput of this Neural Network is given by:

where is the sigmoid function.

We aim to get the weight and the biais such that .

The loss function used for this network is the cross-entropy which is defined for one sample :

Then the Cost function for the entire training data set is:

Further, it is easy to see the connection with the log-likelihood function of the logistic model:

where and .

The optimization step will be carried using Gradient Descent procedures and extension which will be briefly presented in the sub-section Optimization

2.2 Softmax regression

A softmax regression, also called a multiclass logistic regression, is used to generalized logistic regression when there are more than 2 outcome classes (). The outcome variable is a discrete variable which can take one of the values, . The multinomial regression model is also a GLM (Generalized Linear Model) where the distribution of the outcome is a Multinomial where is a vector with probabilities of success for each category. This Multinomial is more precisely called categorical distribution.

The multinomial regression model is parameterize by parameters, , where , and .

By convention, we set , which makes the Bernoulli parameter of each class be such that

where are the parameters of the model. This model is also called softmax regression and generalize the logistic regression. The output of the model is the estimated probability that , for every value of .

2.2.1 Multinomial regression for classification

We illustrate the Multinomial model by considering three classes: red, yellow and blue.

Figure 2.5: Classify for three color points

Figure 2.5: Classify for three color points

clr1 <- c(rgb(1,0,0,1),rgb(1,1,0,1),rgb(0,0,1,1))

clr2 <- c(rgb(1,0,0,.2),rgb(1,1,0,.2),rgb(0,0,1,.2))

x <- c(.4,.55,.65,.9,.1,.35,.5,.15,.2,.85)

y <- c(.85,.95,.8,.87,.5,.55,.5,.2,.1,.3)

z <- c(1,2,2,2,1,0,0,1,0,0)

df <- data.frame(x,y,z)

plot(x,y,pch=19,cex=2,col=clr1[z+1])One can use the R package to run a mutinomial regression model

library(nnet)

model.mult <- multinom(z~x+y,data=df)# weights: 12 (6 variable)

initial value 10.986123

iter 10 value 0.794930

iter 20 value 0.065712

iter 30 value 0.064409

iter 40 value 0.061612

iter 50 value 0.058756

iter 60 value 0.056225

iter 70 value 0.055332

iter 80 value 0.052887

iter 90 value 0.050644

iter 100 value 0.048117

final value 0.048117

stopped after 100 iterationsThen, the output gives a predicted probability to the three colours and we attribute the color that is the most likely.

pred_mult <- function(x,y){

res <- predict(model.mult,

newdata=data.frame(x=x,y=y),type="probs")

apply(res,MARGIN=1,which.max)

}x_grid<-seq(0,1,length=101)

y_grid<-seq(0,1,length=101)

z_grid <- outer(x_grid,y_grid,FUN=pred_mult)We can now visualize the three regions, the frontier being linear, and the intersection being the equiprobable case.

Figure 2.6: Classifier using multinomial model

Figure 2.6: Classifier using multinomial model

image(x_grid,y_grid,z_grid,col=clr2)

points(x,y,pch=19,cex=2,col=clr1[z+1])2.2.2 Likelihood of the softmax model

The maximum likelihood estimation procedure consists of maximizing the log-likelihood:

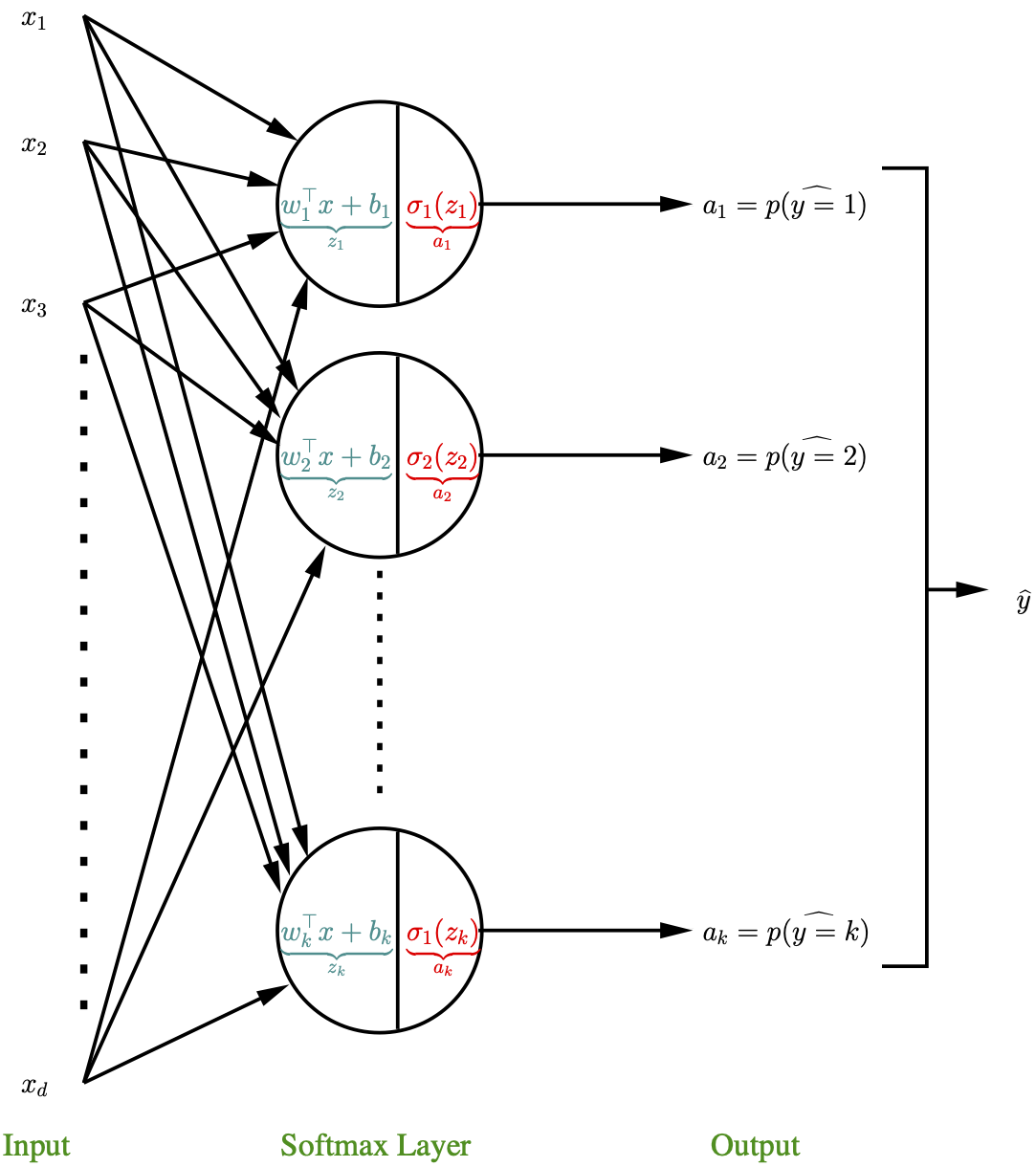

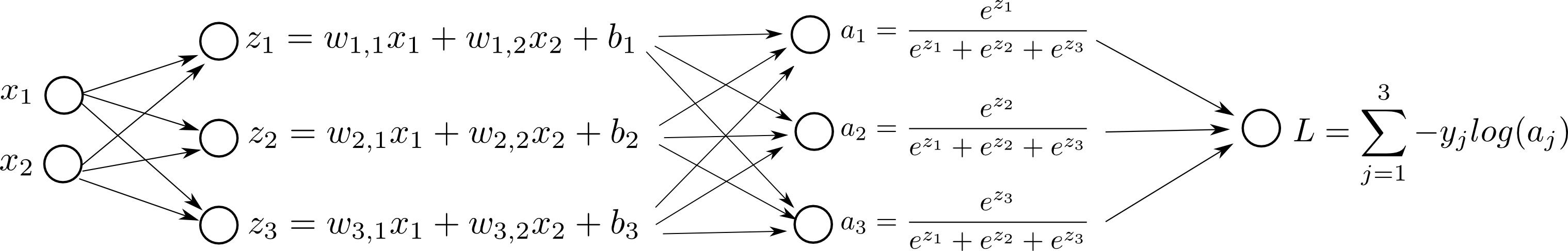

2.2.3 Softmax regression as shallow Neural Network

The Softmax regression model can be viewed as a shallow Neural Network.

In this representation, there are neurons where each neuron is defined by his own set of weights and a bais term . The linear part is denoted by and the non linear part (activation part) is . Note that the denominator of the activation part is defined using the weights from the other neurons. The output is a vector of probabilities and the function is used for classification purpose:

2.2.4 Loss function: cross-entropy for categorical variable

Let consider first one training sample . The cross entropy loss for categorical response variable, also called Softmax Loss is defined as:

where is a binary variable indicating if is in the class .

This expression can be rewritten as

Then, the cost function for the training samples is defined as

2.3 Optimisation

2.3.1 Gradient Descent

Consider unconstrained, smooth convex optimization

Algorithm : Gradient Descent

- Choose initial point

- Repeat

- Stop at some point:

Here, is called the learning rate.

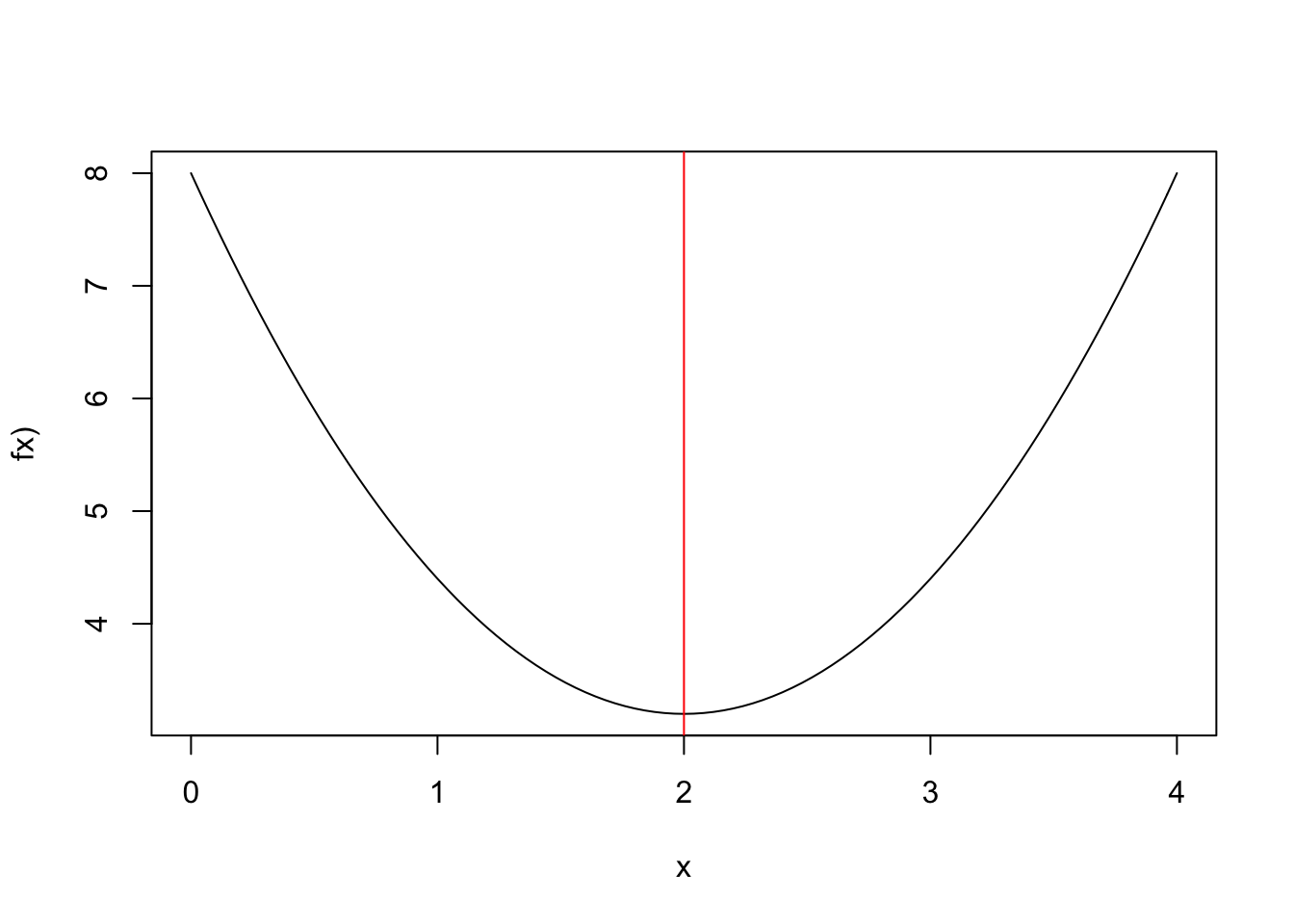

Suppose that we want to find that minimizes:

Figure 2.7: Closed from solution (red)

Figure 2.7: Closed from solution (red)

f.x <- function(x) 1.2*(x-2)**2+3.2

curve(1.2*(x-2)**2+3.2,0,4,ylab="fx)")

abline(v=2,col="red")In general, we cannot find a closed form solution, but can compute

simple.grad.des <- function(x0,alpha,epsilon=0.00001,max.iter=300){

tol <- 1; xold <- x0; res <- x0; iter <- 1

while (tol>epsilon & iter < max.iter){

xnew <- xold - alpha*2.4*(xold-2)

tol <- abs(xnew-xold)

xold <- xnew

res <- c(res,xnew)

iter <- iter +1

}

return(res)

}

result <- simple.grad.des(0,0.01,max.iter=200)

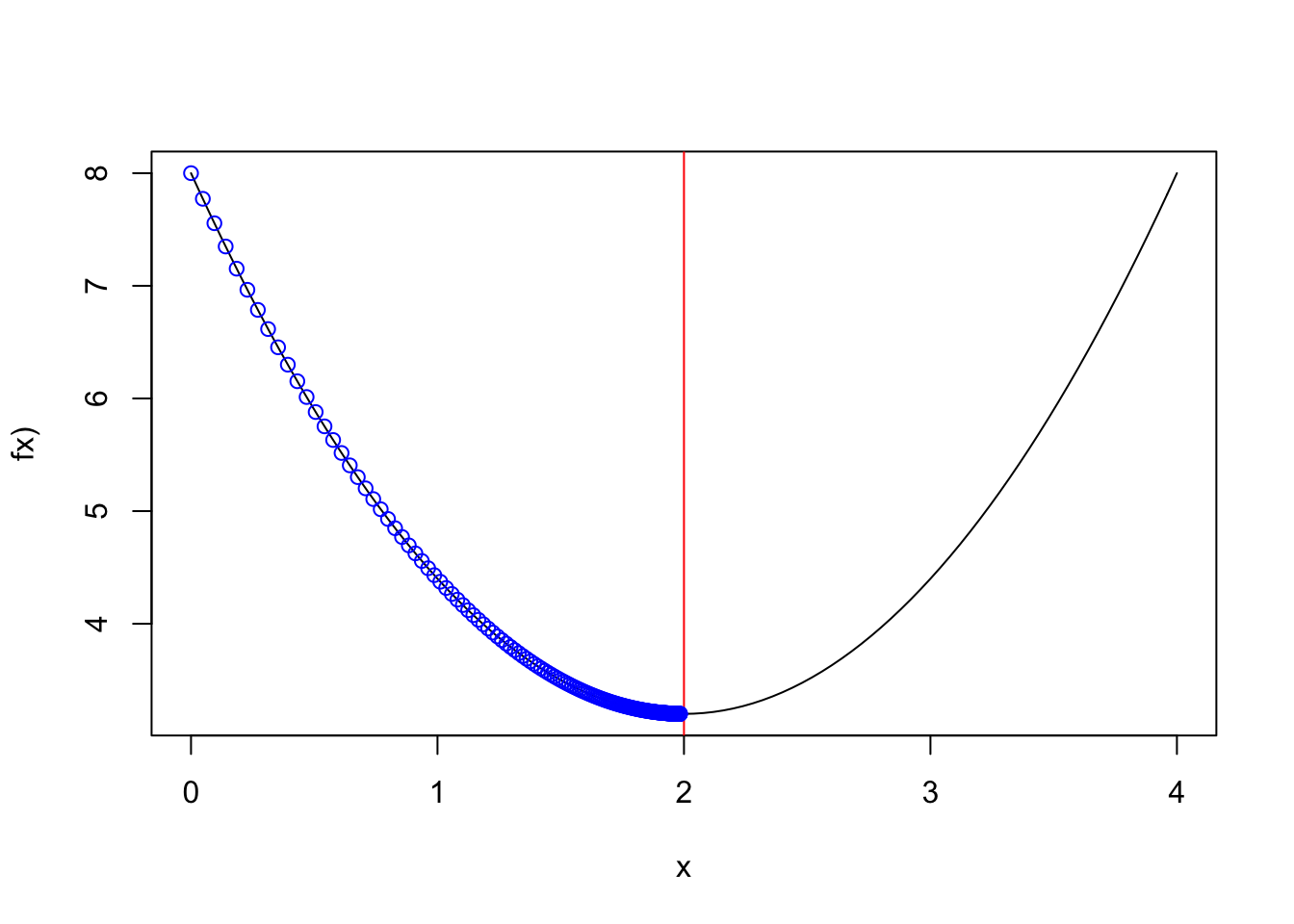

#result[length(result)]Convergence with a learning rate=0.01

Figure 2.8: alpha=0.01

Figure 2.8: alpha=0.01

curve(1.2*(x-2)**2+3.2,0,4,ylab="fx)")

abline(v=2,col="red")

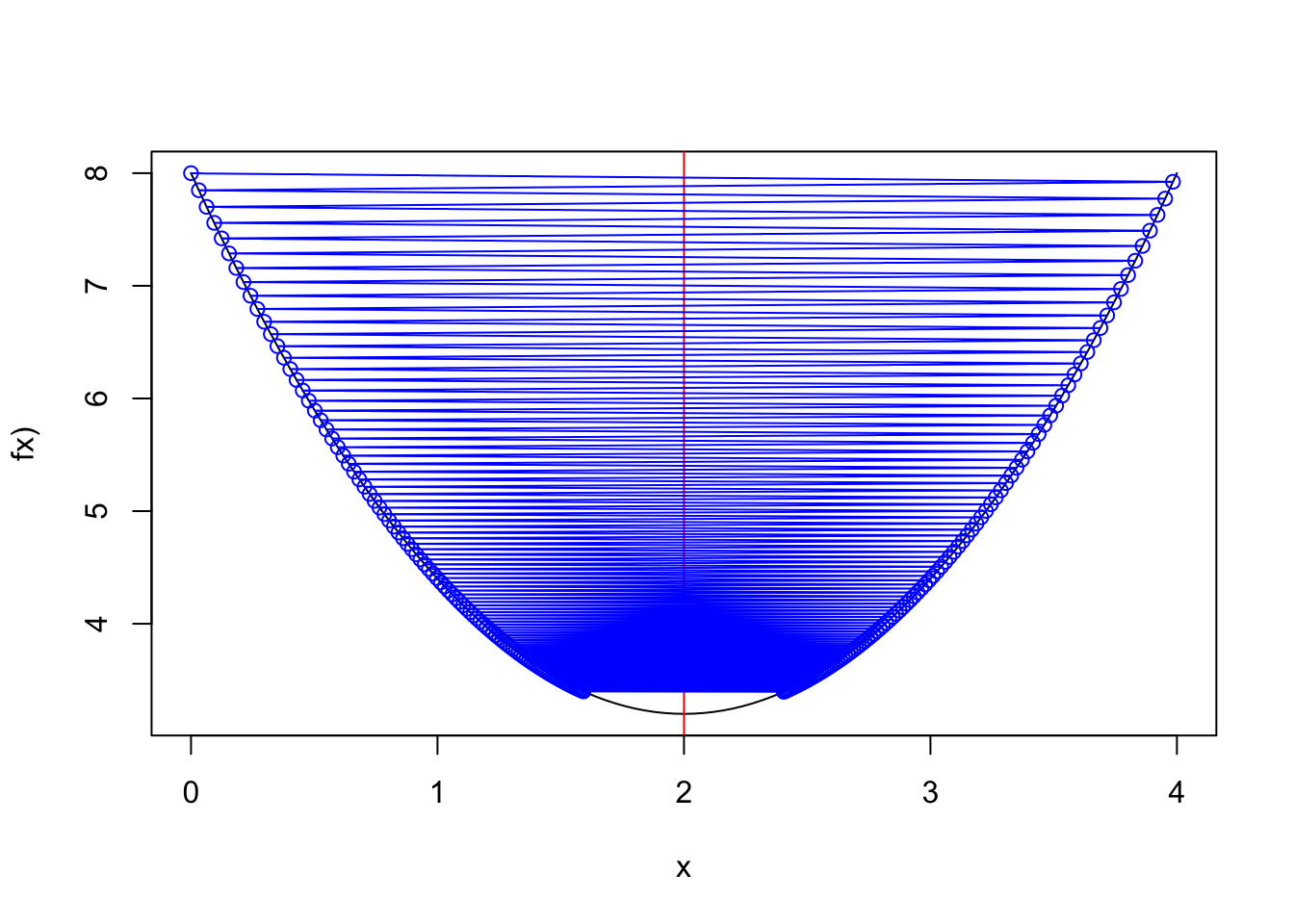

points(result,f.x(result),col="blue")Convergence with a learning rate=0.83

Figure 2.9: alpha=0.83

Figure 2.9: alpha=0.83

result2 <- simple.grad.des(0,0.83,max.iter=200)

#result2[length(result2)]

curve(1.2*(x-2)**2+3.2,0,4,ylab="fx)")

abline(v=2,col="red")

points(result2,f.x(result2),col="blue",type="o")2.3.2 Gradient Descent for logistic regression

Given for , consider the cross-entropy loss function for this data set:

The gradient is

where

Algorithm : Batch Gradient Descent

- Initialize

- Repeat until convergence

- Let be the gradient vector

- for do

- end

- End repeat until convergence

Note that algorithm uses all samples to compute the gradient. This approach is called batch gradient descent.

2.3.3 Stochastic gradient descent

Algorithm : Stochastic Gradient Descent

Initialize

-

Repeat until convergence

Pick sample

End repeat until convergence

Coefficient is updated after each sample.

Remark: The gradient computation is doable when is moderate, but not when .

One batch update costs

One stochastic update costs

2.3.4 Mini-Batches

In practice, mini-batch is often used to:

- Compute gradient based on a small subset of samples

- Make update to coefficient vector

2.3.5 Example with logistic regression

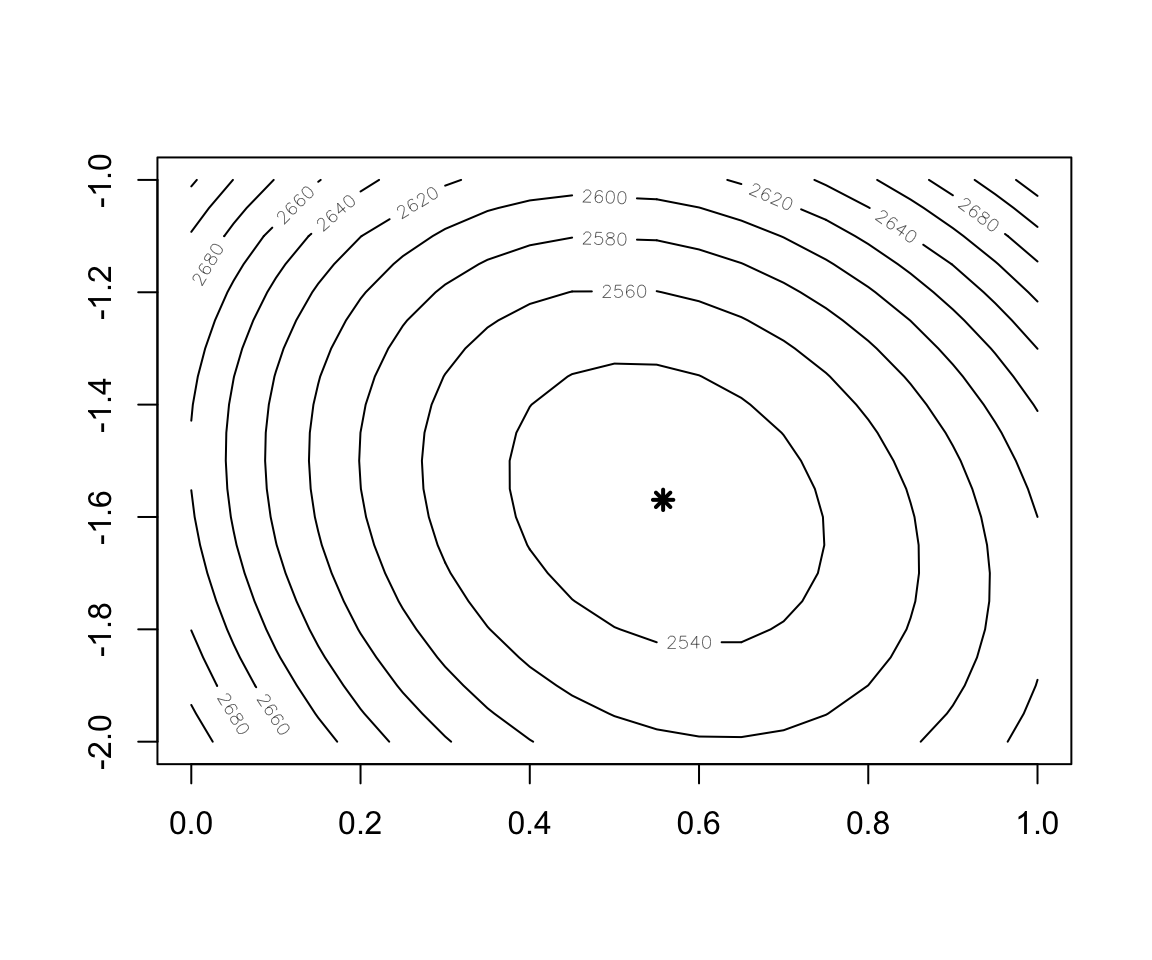

- Simulate some samples from true model:

set.seed(10)

m <- 5000 ;d <- 2 ;w <- c(0.5,-1.5)

x <- matrix(rnorm(m*2),ncol=2,nrow=m)

ptrue <- 1/(1+exp(-x%*%matrix(w,ncol=1)))

y <- rbinom(m,size=1,prob = ptrue)

(w.est <- coef(glm(y~x[,1]+x[,2]-1,family=binomial)))## x[, 1] x[, 2]

## 0.557587 -1.569509- The cross-entropy loss for this dataset

Cost.fct <- function(w1,w2) {

w <- c(w1,w2)

cost <- sum(-y*x%*%matrix(w,ncol=1)+log(1+exp(x%*%matrix(w,ncol=1))))

return(cost)

}

Figure 2.10: Contour plot of the Cost function

Figure 2.10: Contour plot of the Cost function

w1 <- seq(0, 1, 0.05)

w2 <- seq(-2, -1, 0.05)

cost <- outer(w1, w2, function(x,y) mapply(Cost.fct,x,y))

contour(x = w1, y = w2, z = cost)

points(x=w.est[1],y=w.est[2],col="black",lwd=2,lty=2,pch=8)- Implementation of Batch Gradient Descent

sigmoid <- function(x) 1/(1+exp(-x))

batch.GD <- function(theta,alpha,epsilon,iter.max=500){

tol <- 1

iter <-1

res.cost <- Cost.fct(theta[1],theta[2])

res.theta <- theta

while (tol > epsilon & iter<iter.max) {

error <- sigmoid(x%*%matrix(theta,ncol=1))-y

theta.up <- theta-as.vector(alpha*matrix(error,nrow=1)%*%x)

res.theta <- cbind(res.theta,theta.up)

tol <- sum((theta-theta.up)**2)^0.5

theta <- theta.up

cost <- Cost.fct(theta[1],theta[2])

res.cost <- c(res.cost,cost)

iter <- iter +1

}

result <- list(theta=theta,res.theta=res.theta,res.cost=res.cost,iter=iter,tol.theta=tol)

return(result)

}dim(x);length(y)## [1] 5000 2## [1] 5000

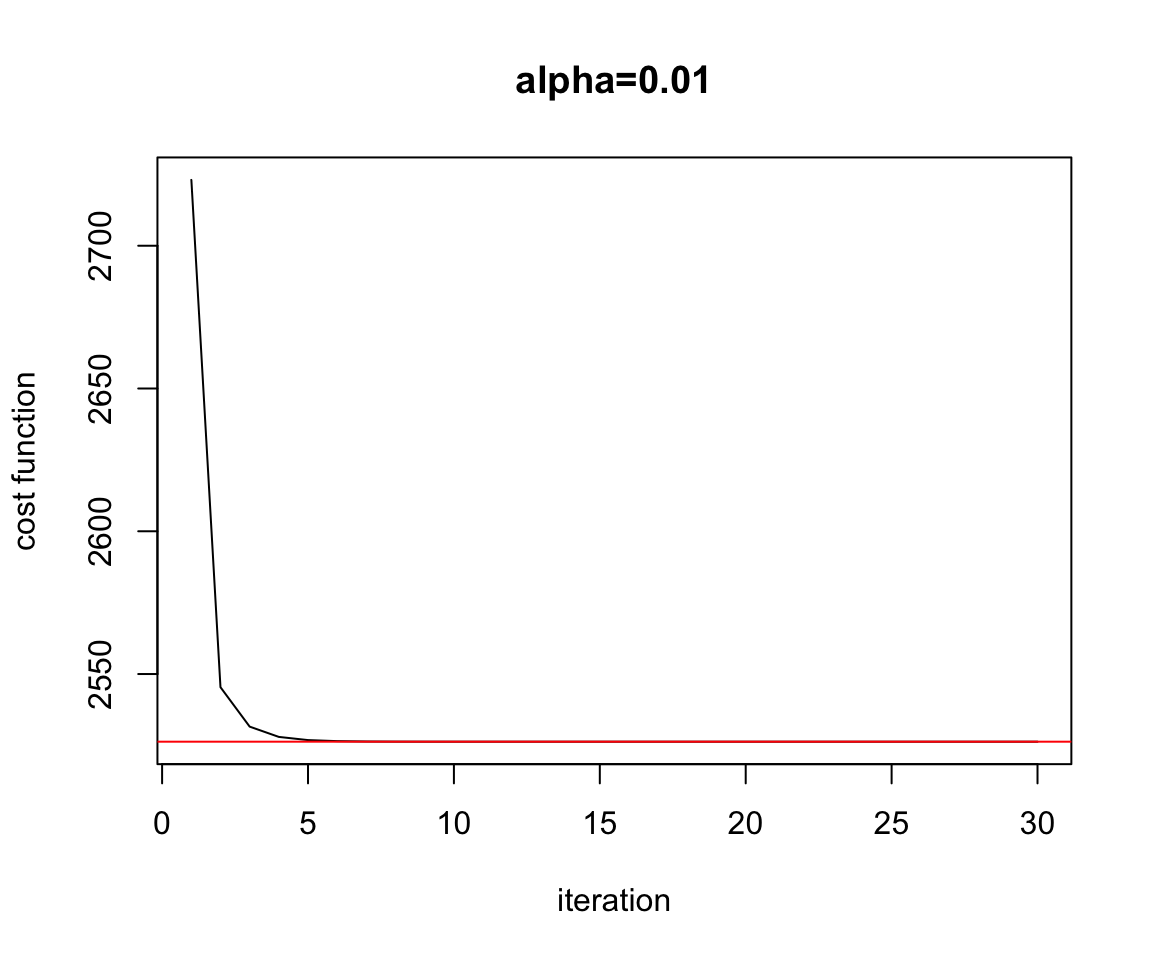

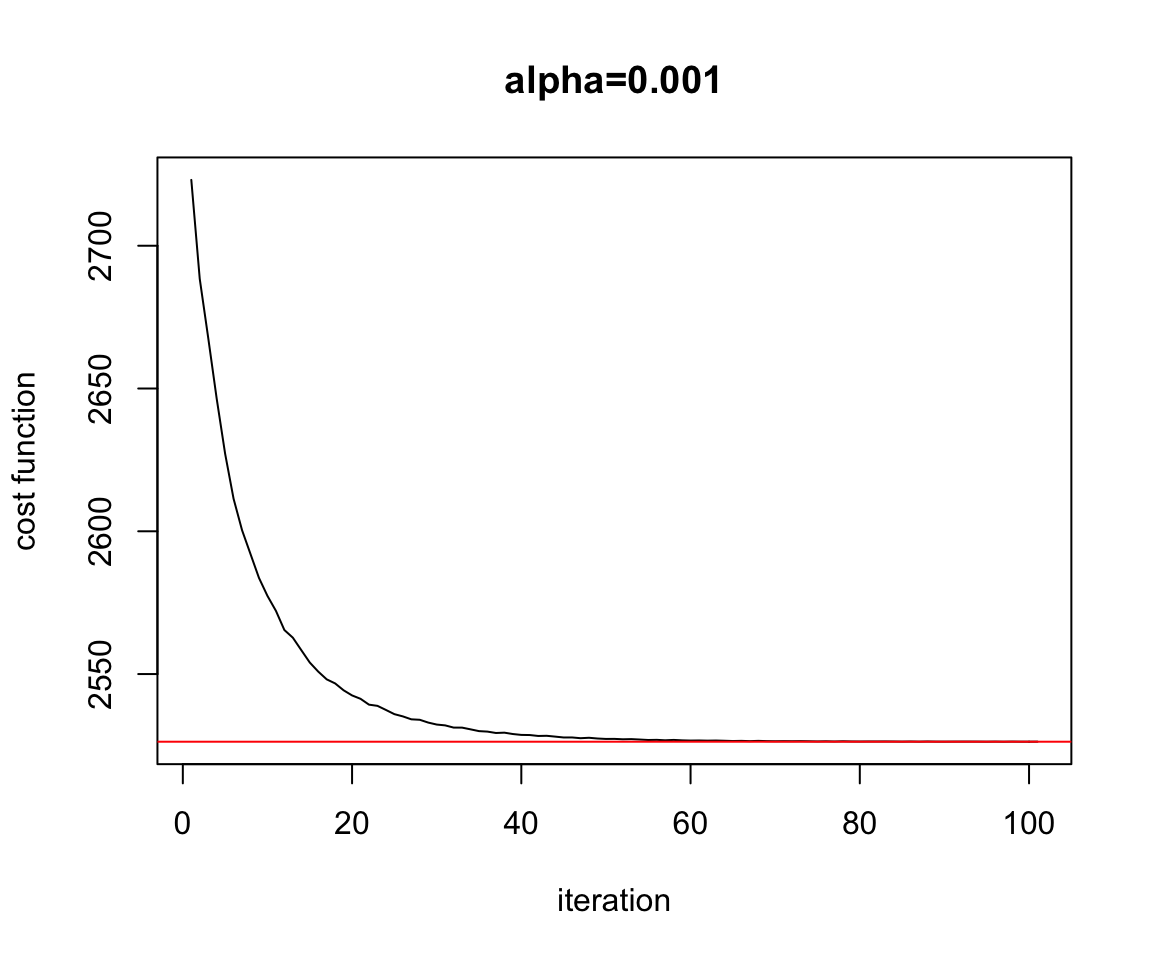

Figure 2.11: Convergence Batch Gradient Descent

Figure 2.11: Convergence Batch Gradient Descent

theta0 <- c(0,-1); alpha=0.001

test <- batch.GD(theta=theta0,alpha,epsilon = 0.0000001)

plot(test$res.cost,ylab="cost function",xlab="iteration",main="alpha=0.01",type="l")

abline(h=Cost.fct(w.est[1],w.est[2]),col="red")

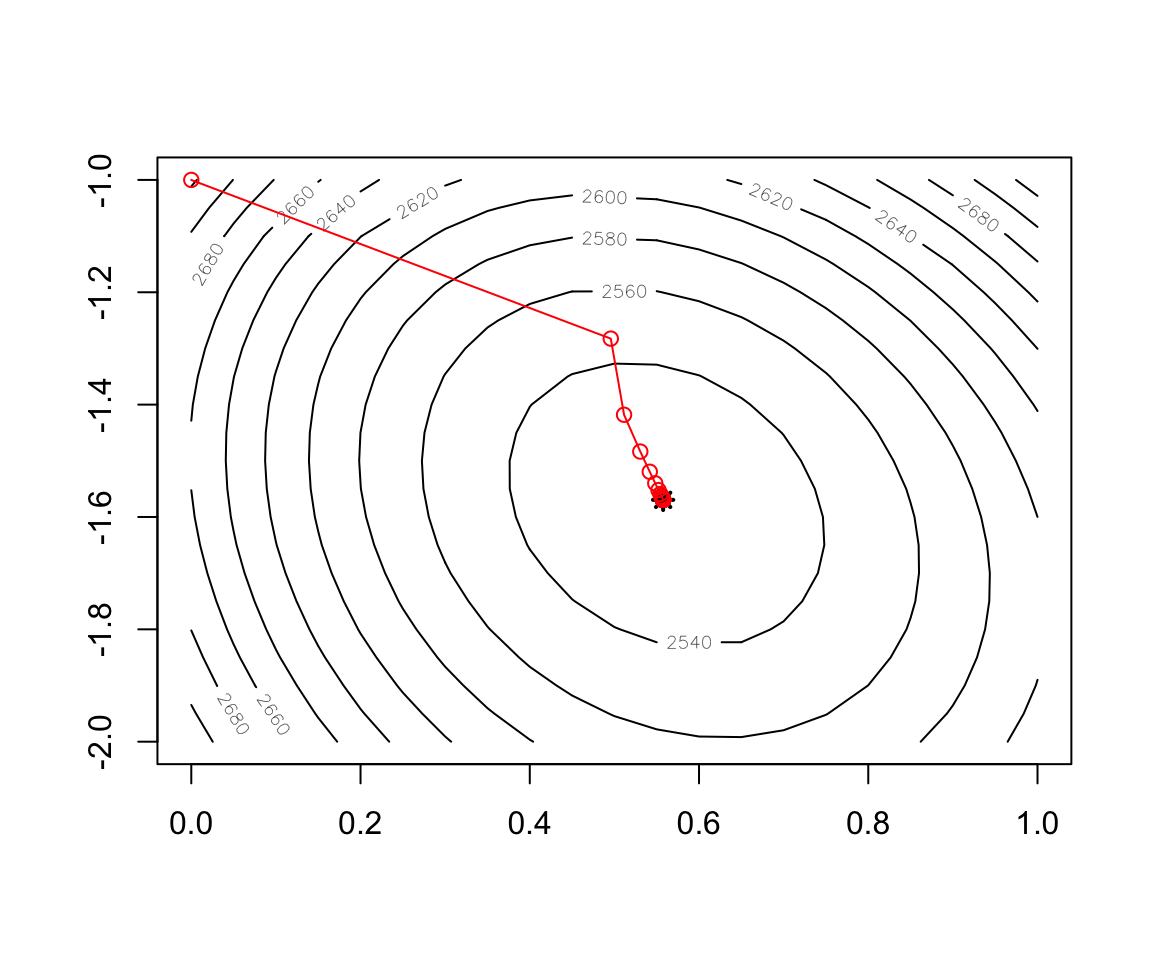

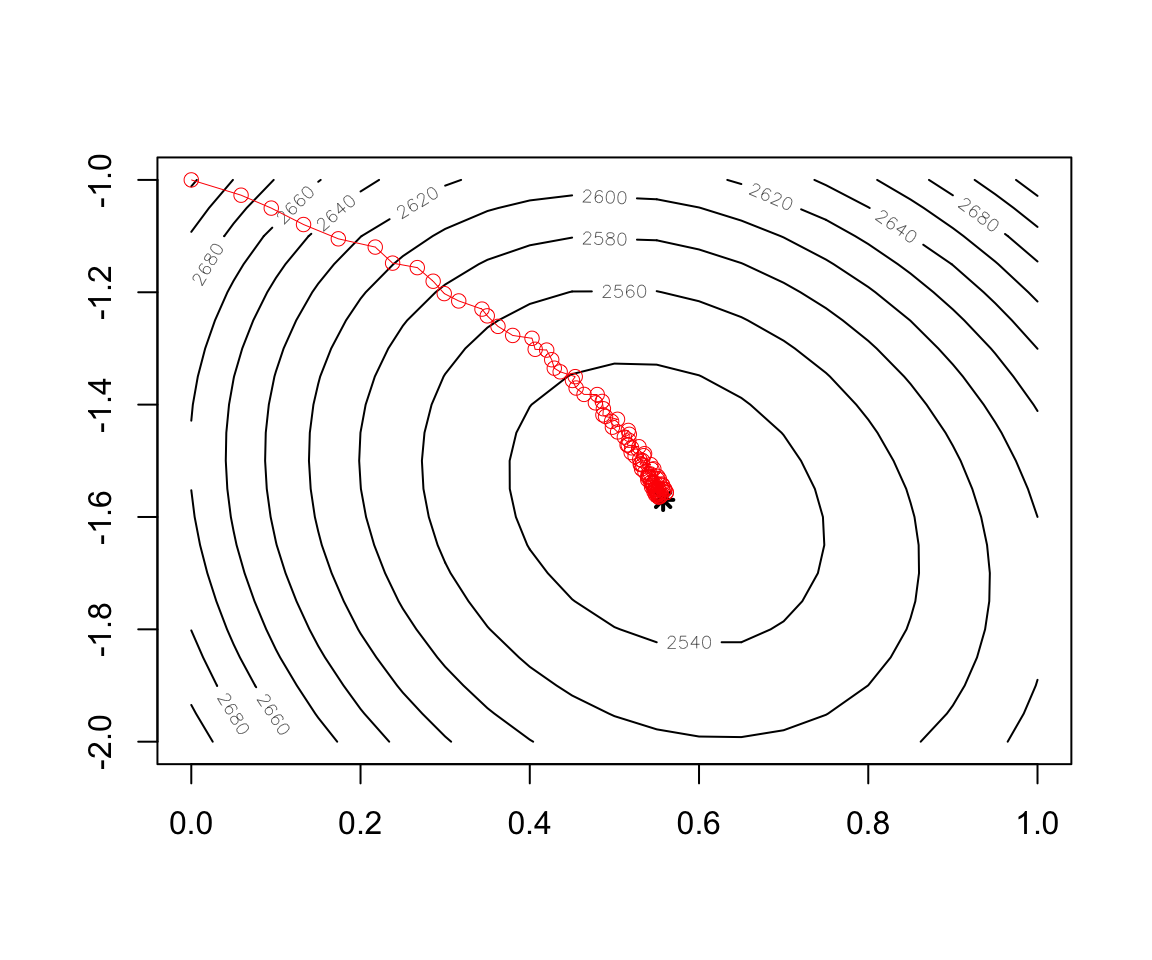

Figure 2.12: Convergence of BGD

Figure 2.12: Convergence of BGD

contour(x = w1, y = w2, z = cost)

points(x=w.est[1],y=w.est[2],col="black",lwd=2,lty=2,pch=8)

record <- as.data.frame(t(test$res.theta))

points(record,col="red",type="o")- Implementation of Stochastic Gradient Descent

Stochastic.GD <- function(theta,alpha,epsilon=0.0001,epoch=50){

epoch.max <- epoch

tol <- 1

epoch <-1

res.cost <- Cost.fct(theta[1],theta[2])

res.cost.outer <- res.cost

res.theta <- theta

while (tol > epsilon & epoch<epoch.max) {

for (i in 1:nrow(x)){

errori <- sigmoid(sum(x[i,]*theta))-y[i]

xi <- x[i,]

theta.up <- theta-alpha*errori*xi

res.theta <- cbind(res.theta,theta.up)

tol <- sum((theta-theta.up)**2)^0.5

theta <- theta.up

cost <- Cost.fct(theta[1],theta[2])

res.cost <- c(res.cost,cost)

}

epoch <- epoch +1

cost.outer <- Cost.fct(theta[1],theta[2])

res.cost.outer <- c(res.cost.outer,cost.outer)

}

result <- list(theta=theta,res.theta=res.theta,res.cost=res.cost,epoch=epoch,tol.theta=tol)

}

test.SGD <- Stochastic.GD(theta=theta0,alpha,epsilon = 0.0001,epoch=10)

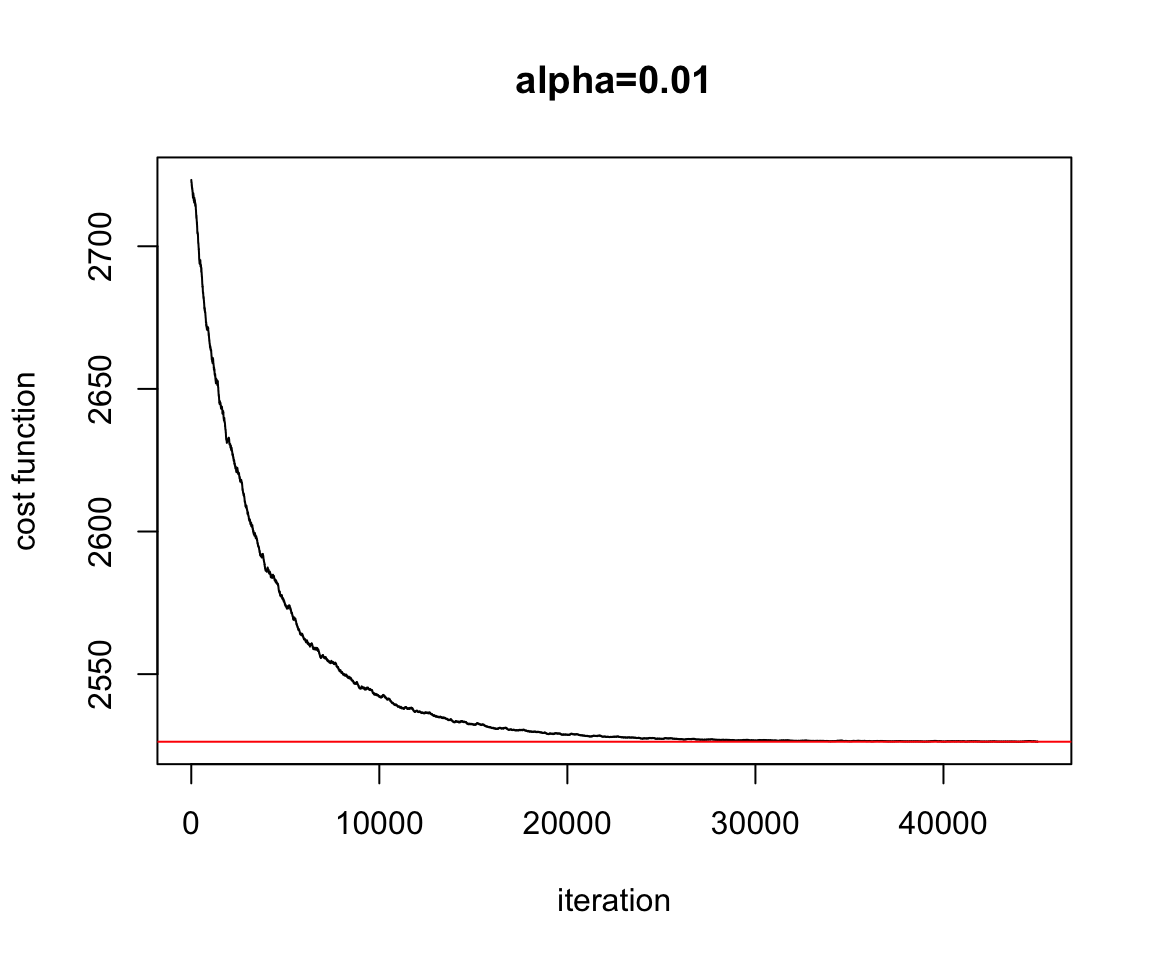

Figure 2.13: Convergence Stochastic Gradient Descent

Figure 2.13: Convergence Stochastic Gradient Descent

plot(test.SGD$res.cost,ylab="cost function",xlab="iteration",main="alpha=0.01",type="l")

abline(h=Cost.fct(w.est[1],w.est[2]),col="red")

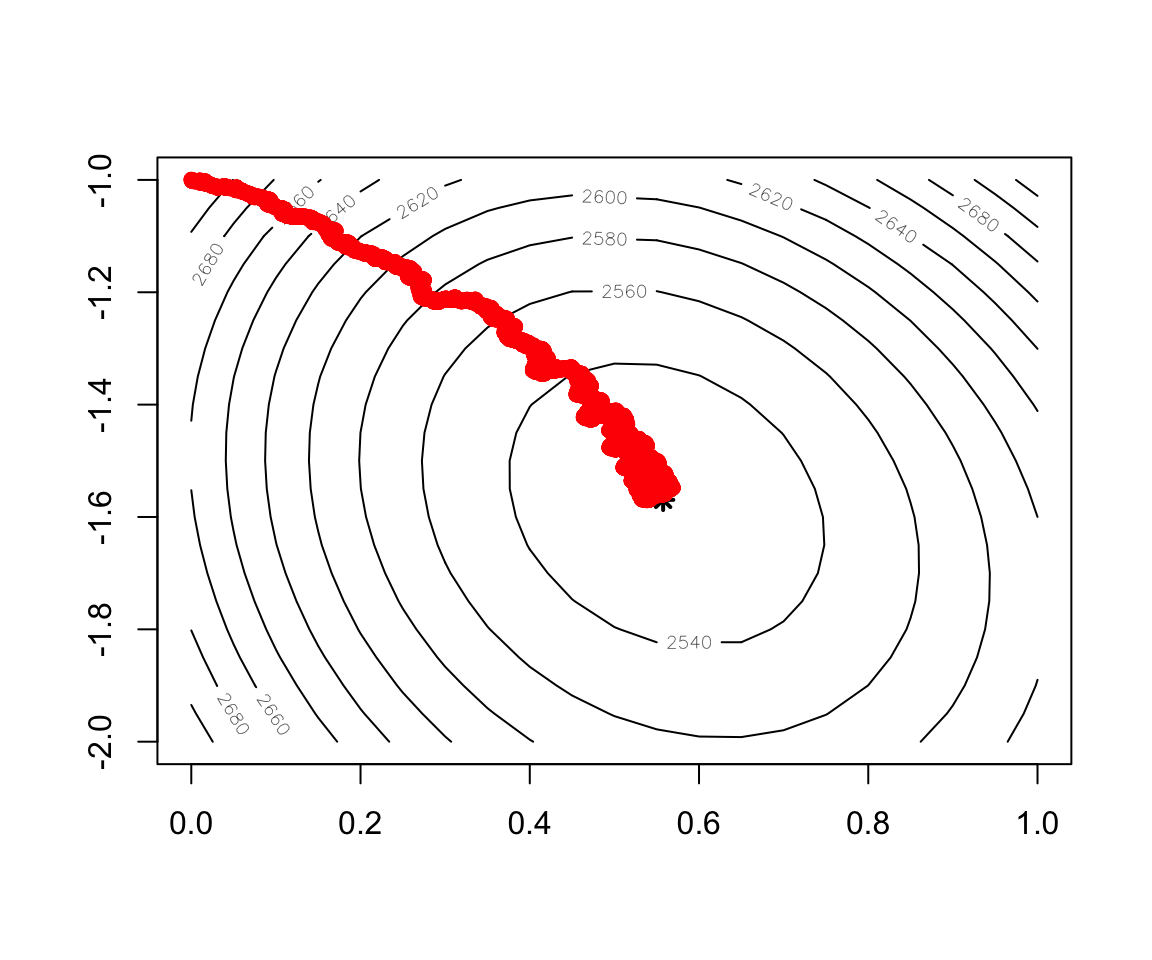

Figure 2.14: Convergence of Stochastic Gradient Descent

Figure 2.14: Convergence of Stochastic Gradient Descent

contour(x = w1, y = w2, z = cost)

points(x=w.est[1],y=w.est[2],col="black",lwd=2,lty=2,pch=8)

record2 <- as.data.frame(t(test.SGD$res.theta))

points(record2,col="red",lwd=0.5)- Implementation of mini batch Gradient Descent

Mini.Batch <- function (theta,dataTrain, alpha = 0.1, maxIter = 10, nBatch = 2, seed = NULL,intercept=NULL)

{

batchRate <- 1/nBatch

dataTrain <- matrix(unlist(dataTrain), ncol = ncol(dataTrain), byrow = FALSE)

set.seed(seed)

dataTrain <- dataTrain[sample(nrow(dataTrain)), ]

set.seed(NULL)

res.cost <- Cost.fct(theta[1],theta[2])

res.cost.outer <- res.cost

res.theta <- theta

if(!is.null(intercept)) dataTrain <- cbind(1, dataTrain)

temporaryTheta <- matrix(ncol = length(theta), nrow = 1)

theta <- matrix(theta,ncol = length(theta), nrow = 1)

for (iteration in 1:maxIter ) {

if (iteration%%nBatch == 1 | nBatch == 1) {

temp <- 1

x <- nrow(dataTrain) * batchRate

temp2 <- x

}

batch <- dataTrain[temp:temp2, ]

inputData <- batch[, 1:ncol(batch) - 1]

outputData <- batch[, ncol(batch)]

rowLength <- nrow(batch)

temp <- temp + x

temp2 <- temp2 + x

error <- matrix(sigmoid(inputData %*% t(theta)),ncol=1) - outputData

for (column in 1:length(theta)) {

term <- error * inputData[, column]

gradient <- sum(term)#/rowLength

temporaryTheta[1, column] = theta[1, column] - (alpha *

gradient)

}

theta <- temporaryTheta

res.theta <- cbind(res.theta,as.vector(theta))

cost.outer <- Cost.fct(theta[1,1],theta[1,2])

res.cost.outer <- c(res.cost.outer,cost.outer)

}

result <- list(theta=theta,res.theta=res.theta,res.cost.outer=res.cost.outer)

return(result)

}theta0 <- c(0,-1); alpha=0.001

data.Train <- cbind(x,y)

test.miniBatch <- Mini.Batch(theta=theta0,dataTrain=data.Train, alpha = 0.001, maxIter = 100, nBatch = 10, seed = NULL,intercept=NULL)

##Result frm Mini-Batch

test.miniBatch$theta## [,1] [,2]

## [1,] 0.5515469 -1.561252

Figure 2.15: Convergence Mini Batch

Figure 2.15: Convergence Mini Batch

plot(test.miniBatch$res.cost.outer,ylab="cost function",xlab="iteration",main="alpha=0.001",type="l")

abline(h=Cost.fct(w.est[1],w.est[2]),col="red")

Figure 2.16: Convergence of Stochastic Gradient Descent

Figure 2.16: Convergence of Stochastic Gradient Descent

contour(x = w1, y = w2, z = cost)

points(x=w.est[1],y=w.est[2],col="black",lwd=2,lty=2,pch=8)

record3 <- as.data.frame(t(test.miniBatch$res.theta))

points(record3,col="red",lwd=0.5,type="o")2.4 Chain rule

The univariate chain rule and the multivariate chain rule are the key concepts to calculate the derivative of cost with respect to any weight in the network. In the following a refresher of the different chain rules.

2.4.1 Univariate Chain rule

- Univariate chain rule

2.4.2 Multivariate Chain Rule

- Part I: Let , and , where and are differentiable functions. Then is a function of , and

- Part II:

- Let , and , where and are differentiable functions. Then is a function of and , and

and

- Let be a differentiable function of variables, where each of the is a differentiable function of the variables . Then is a function of the , and

2.5 Forward pass and backpropagation procedures

Backpropagation is the key tool adopted by the neural network community to update the weights. This method exploits the derivative with respect to each weight using the chain rule (univariate and multivariate rules)

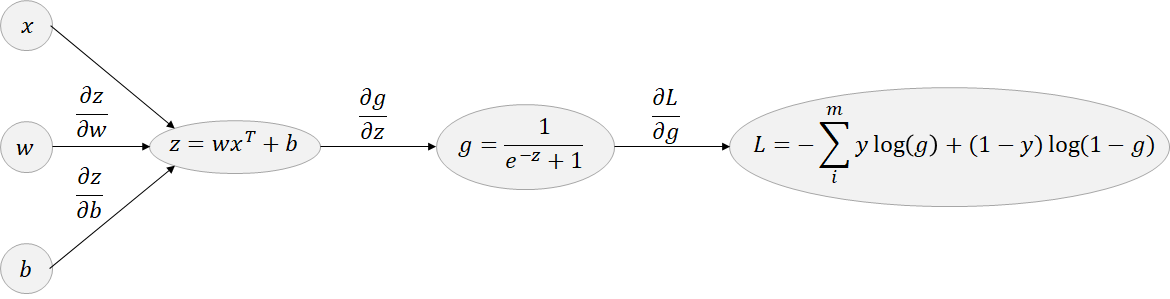

2.5.1 Example with the logistic model using cross-entropy loss

The logistic model can be viewed as a simple neural network with no hidden layer and only a single output node with a sigmoid activation function. The sigmoid activation function is applied to the linear combination of the features and provides the predicted value that represent the probability that the input belongs to class one.

Figure 2.17: Forward pass: Logistic model

Forward pass: the forward propagation step consists to get predictions for the training samples and compute the error through a loss function in order to further adapt the weights and the bias to decrease the error. This forward pass is going through the following equations:

The cost is the error we want to reduce by adjusting the weights and the bais. Variations of the gradient descent algorithm are exploited to update iteratively the parameters. Thus, we have to derive the equations for the gradients on the loss function in order to propagate back the error to adapt the model parameters and .

Backward pass based on computation graph:

The chain rule is used and generally illustrated through a computation graph:

Figure 2.18: backpropagation: Logistic model

First to simplify this illustration, remind that:

Thus

To get the chain rule is used by considering one sample (the notation is ommitted).

Computation graphs are mainly exploited to show dependencies to derive easely the equations for the gradients. Thus to compute the gradient of the cost (lost function), one only need to go back the computation graph and multiply the gradients by each other:

where and .

Thus,

and so, In the same vein, it follows

2.5.2 Updating weights using Backpropagation

For neural network framework, the weights are updated using gradient descent concepts

The main steps for updating weights are

1. Take a batch of training sample

2. Forward propagation to get the corresponding cost

3. Backpropagate the cost to get the gradients

4. update the weights using the gradients

5. Repeat step 1 to 4 for a number of iterations2.6 Backpropagation for the Softmax Shallow Network

2.6.1 Remind some notations

We consider class: . Given a sample we want to estimate for each . The softmax model is defined by , with , and we write to denote all the weights of our network. Then, a matrix obtained by concatenating into columns.

2.6.2 Chain rule using cross-entropy loss

Let consider one training sample . The cross entropy loss is

where is a binary variable indicating if is in the class .

To get ( we need to use the multivariate chain rule:

First, we derive

if

- if

So we can rewrite it as

Thus, we get

We can now derive the gradient for the weights as:

In the same way, we get

2.6.3 Computation Graph could help

Let consider a simple example with () and two features ( and ). The computational graph for this softmax neural network model help us to visualize dependencies between nodes and then to derive the gradient of the cost (loss) in respect to each parameter ( and , ).

Figure 2.19: Computation Graph: softmax

Let’s write as an example for :

In fact, we are summing up the contribution of the change of over different “paths” (in red from figure above). When we change ; and changes as a result. Then, the change of and affects . We sum up all the changes produced over and to .

import numpy as np

import matplotlib.pyplot as plt

import sympy

from scipy import optimizePage built: 2021-03-04 using R version 4.0.3 (2020-10-10)