See the latest book content here.

3 Optimization Algorithms

In this chapter we focus on general approach to optimization for multivariate functions. In the previous chapter, we have seen three different variants of gradient descent methods, namely, batch gradient descent, stochastic gradient descent, and mini-batch gradient descent. One of these methods is chosen depending on the amount of data and a trade-off between the accuracy of the parameter estimation and the amount time it takes to perform the estimation. We have noted earlier that the mini-batch gradient descent strikes a balance between the other two methods and hence commonly used in practice. However, this method comes with few challenges that need to be addressed. We also focus on these issues in this chapter.

Learning outcomes from this chapter:

Several first-order optimization methods

Basic second-order optimization methods

This chapter closely follows chapters 4 and 5 of (Kochenderfer and Wheeler 2019). There are many interesting textbooks on optimization, including (Boyd and Vandenberghe 2004), (Sundaram 1996), and (Nesterov 2004),

3.1 Preliminaries

In this section, we establish some preliminaries that are necessary for understanding the methods discussed later.

3.1.1 Directional Derivative

Consider an -dimensional multivariate function over a feasible set .

The gradient of at , when exists, is given by which is a vector capturing the local slope of the function at .

The directional derivative of at in the direction of is defined by

Note that is the directional derivative at in the direction of the vector consists of at the -th location and zeros everywhere else. This simply follows from the observation that

Thus, is the directional derivative at in the direction of the vector .

Together, we can conclude that the directional derivative at in the direction is

where denotes the dot product between vectors.

- Alternatively, we can obtain the above relation between the gradient and directional derivative by observing from the first-order approximation that

Now take the limit on both the sides.

Exercise: Show that the directional derivative is maximum in the direction of the gradient. That is, for all unit length vectors , the choice maximizes .

Hint: The Cauchy–Schwarz inequality for vectors.

3.1.2 Python Basics

SymPy is a Python library for symbolic mathematics. We illustrate the applications of this library with some examples.

Finding derivatives: Suppose that we want to find the derivative of the following univariate function: For this, SymPy package can be used as follows:

import sympy

import numpy as np

t = sympy.Symbol('t', real=True)

f = sympy.exp(-t**2 / 2) * sympy.sin(sympy.pi*t)

dfdt = f.diff(t) # Taking derivative with respect to t

print("Output:\n f'(t) =", dfdt, "\n f'(t) =", sympy.latex(sympy.simplify(dfdt)))## Output:

## f'(t) = -t*exp(-t**2/2)*sin(pi*t) + pi*exp(-t**2/2)*cos(pi*t)

## f'(t) = \left(- t \sin{\left(\pi t \right)} + \pi \cos{\left(\pi t \right)}\right) e^{- \frac{t^{2}}{2}}The second output is a latex expression for

Finding gradient: Now consider the following multivariate function:

t1, t2 = sympy.symbols('t1, t2', real=True)

f = sympy.exp(-t1**2 / 2) * sympy.sin(sympy.pi*t2)

theta = (t1, t2)

grad = [f.diff(t) for t in theta]

print("Gradient of f: \n", grad)## Gradient of f:

## [-t1*exp(-t1**2/2)*sin(pi*t2), pi*exp(-t1**2/2)*cos(pi*t2)]Evaluating function values: Evaluating function value at a point using SymPy. Again suppose that

and we want to evaluate the value of at . The following code does it using subs and evalf:

t1, t2 = sympy.symbols('t1, t2', real=True)

f = sympy.exp(-t1**2 / 2) * sympy.sin(sympy.pi*t2)

print("value of f(1, 3/2):", (f.subs([(t1,1), (t2,3/2)])).evalf())## value of f(1, 3/2): -0.606530659712633Alternatively, we can use lambdify as follows:

t1, t2 = sympy.symbols('t1, t2', real=True)

f = sympy.exp(-t1**2 / 2) * sympy.sin(sympy.pi*t2)

f = sympy.lambdify((t1, t2), f)

print("value of f(1, 3/2):", f(1,3/2))## value of f(1, 3/2): -0.6065306597126334Note: Once we have the gradient of a function, it is easy to compute its directional derivative using (3.1).

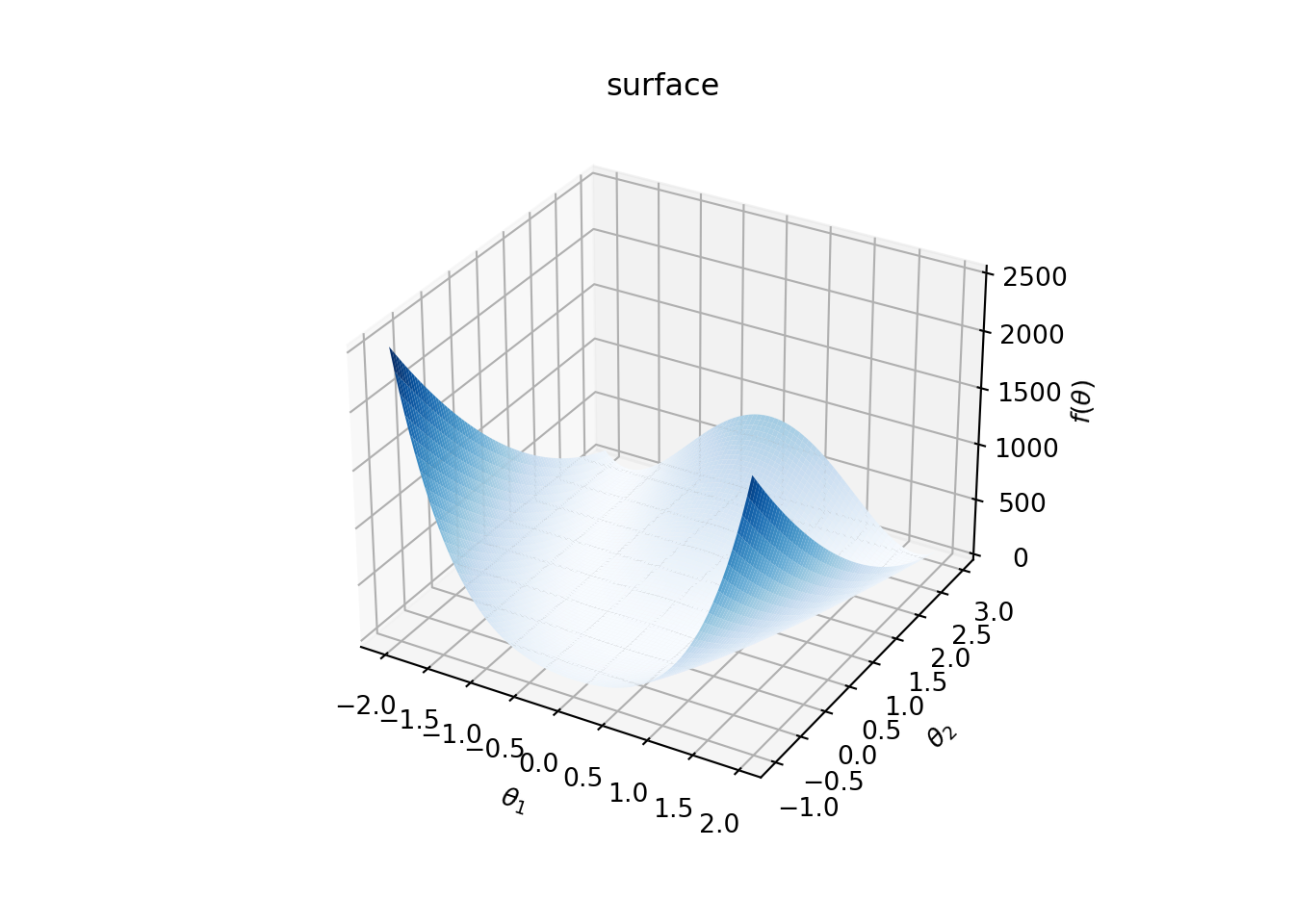

3d plot of functions: Finally, suppose that we would like make a 3d plot of Rosebrock function:

The following Python code plots for and over .

import matplotlib.pyplot as plt

a = 1.0

b = 100

t1, t2 = sympy.symbols('t1, t2', real=True)

f = (a - t1)**2 + b*(t2 - t1**2)**2

f = sympy.lambdify((t1, t2), f)

def fun(x, y):

return f(x,y)

t1_range = np.arange(-2.0,2, 0.05)

t2_range = np.arange(-1.0,3.0, 0.05)

X, Y = np.meshgrid(t1_range, t2_range)

Z = fun(X, Y)

fig = plt.figure()

ax = plt.axes(projection='3d')

ax.plot_surface(X, Y, Z, rstride=1, cstride=1,

cmap='Blues', edgecolor='none')ax.set_title('surface');

ax.set_xlabel(r'$\theta_1$')ax.set_ylabel(r'$\theta_2$')ax.set_zlabel(r'$f(\theta)$')plt.show()

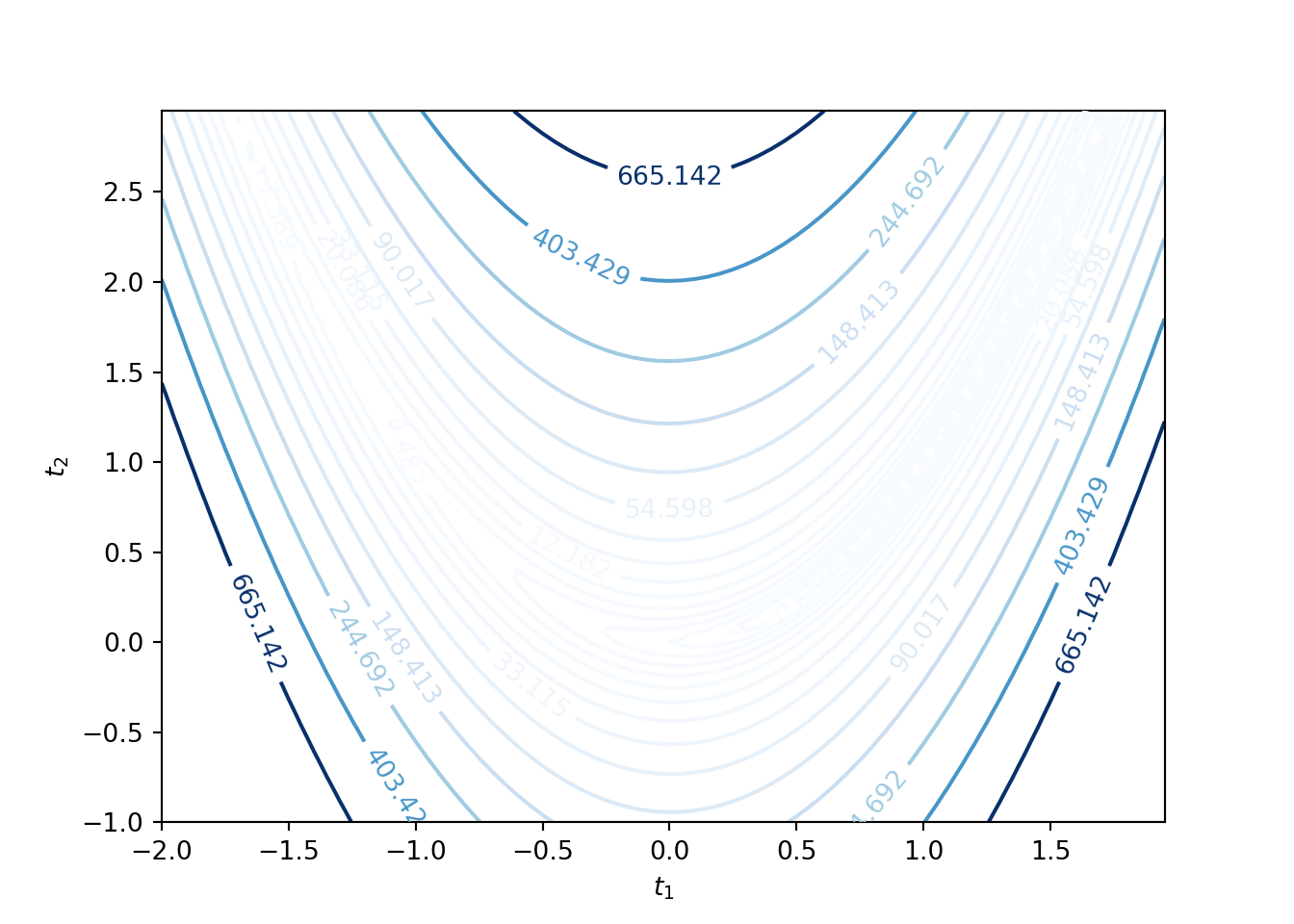

The corresponding contour plot is given by

CP = plt.contour(X, Y, Z, levels=np.exp(np.arange(-3, 7, 0.5)), cmap='Blues')

plt.clabel(CP, inline=1, fontsize=10)plt.xlabel(r'$t_1$')plt.ylabel(r'$t_2$')plt.show()

3.1.3 Local Minimum, Saddle Point, and Convexity

In this section, we look at several examples of 2-dimensional functions to understand the notions of local minimum, saddle point, and convexity.

Local Minimum: For a multivariate function , the necessary condition for a point to be at a local minumum is that the gradient and the Hessian is positive semidefinite , i.e., for all .

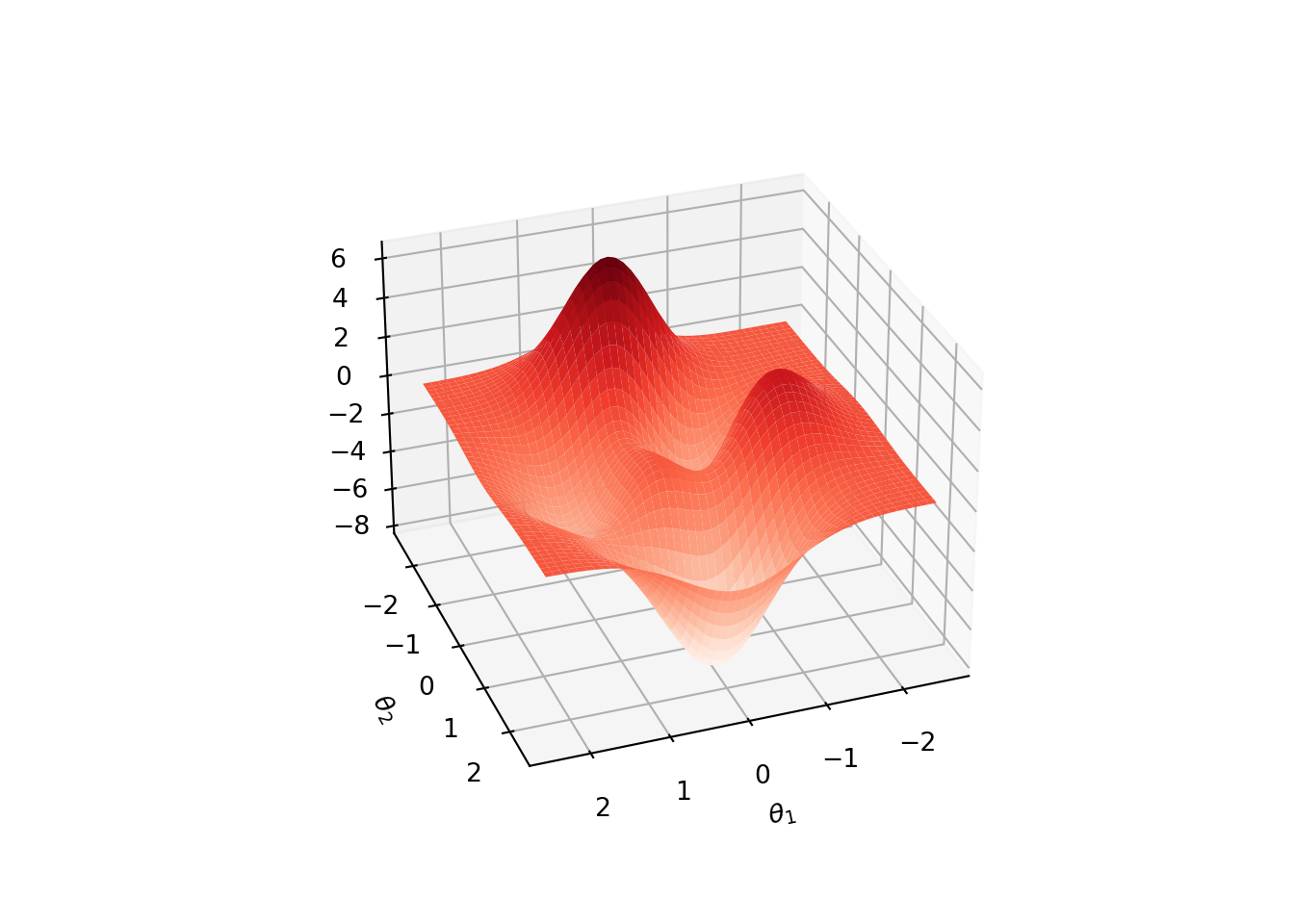

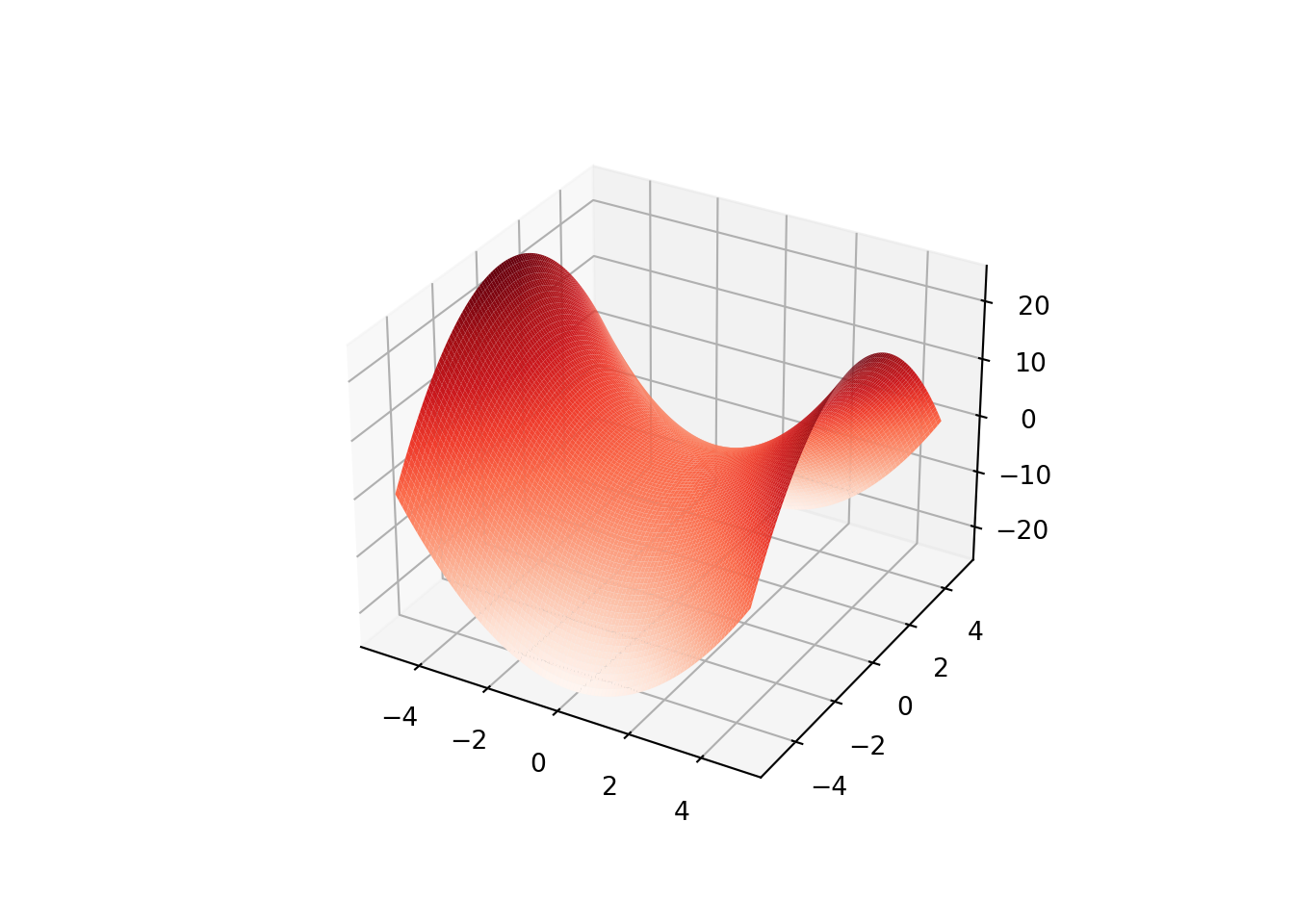

The following plot of a peaks function has multiple local minima (also mmultiple local maxima).

Convexity: A multivariate function is convex over a region if the Hessian is positive semidefinite for all . Furthermore, is strictly convex if the Hessian is poistive definite, i.e., for all .

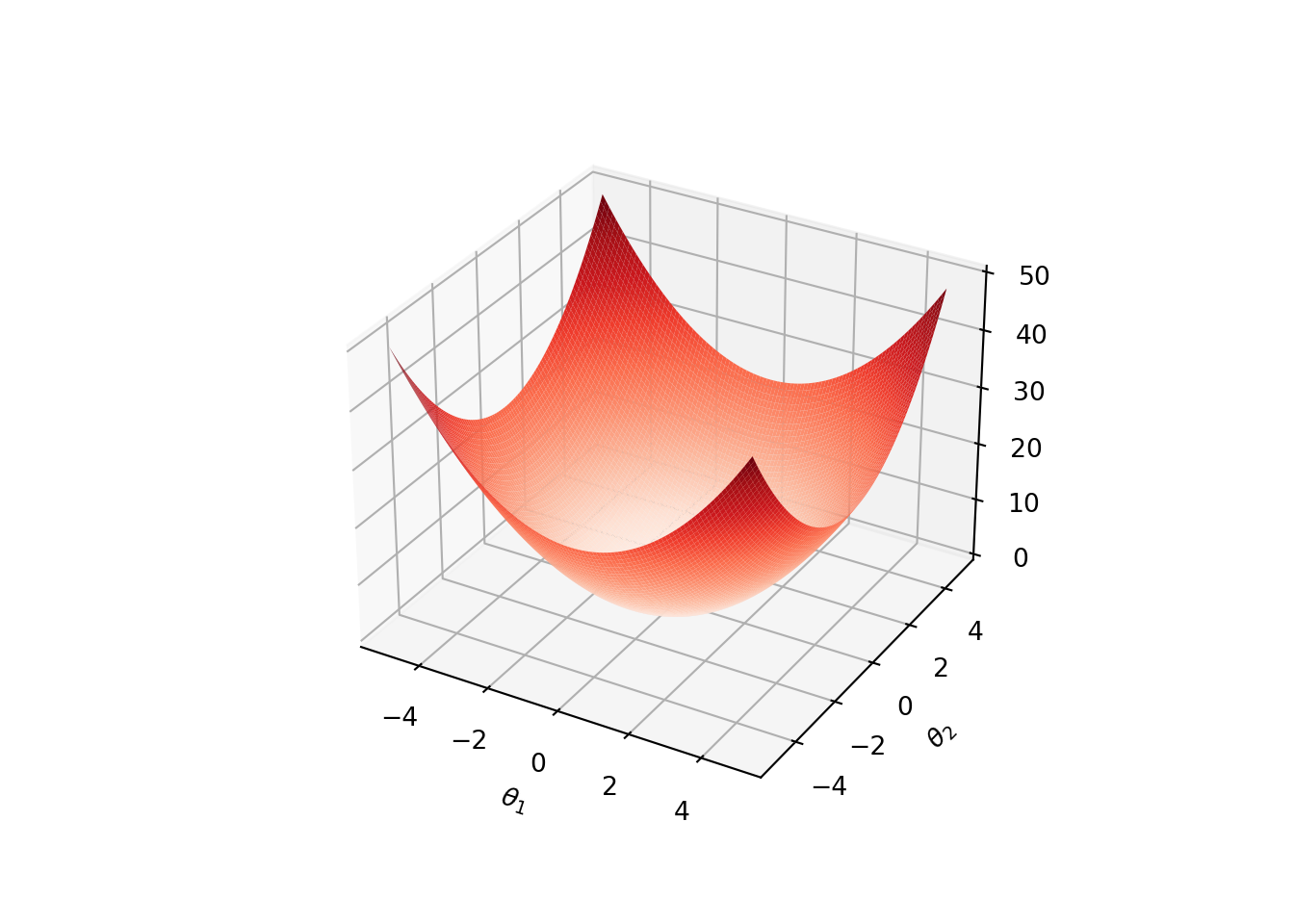

Convexity gurantees that all local minima are global minima. Strict convexity gurantees that the function has a unique global minimum. For an illustration, see the following plot of .

Saddle Point: A point is a saddle point of if the gradient , but is not a local extrememum of . Necessary condition for a point to be a saddle point is that the gradient and the Hessian is indefinite (neither positive semidefinite nor negative definite).

For plotted below, is a saddle point.

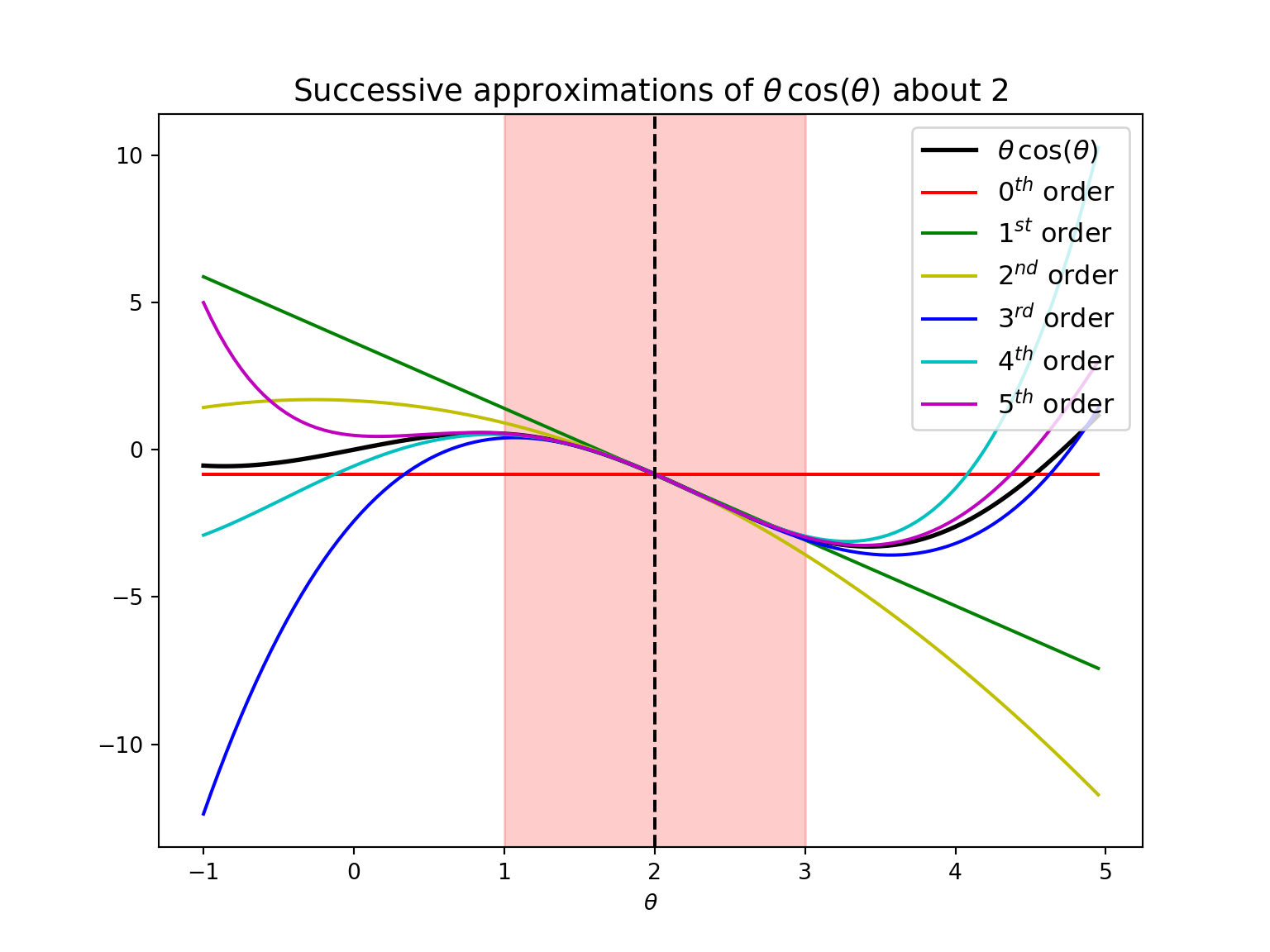

3.1.4 Taylor Expansion

Let be a univariate smooth function. Taylor expansion of about a point is given by

The order Taylor approximation of is given by ignoring all the terms of order more than in the Taylor expansion. That is,

is the order Taylor approximation of about .

Note that for order approximations, we only need to compute the first derivatives of the function.

Similarly, for a multivariate function , the Taylor expansion about a point is given by

Then, by ignoring the terms of order more than , we get order approximation of about .

3.2 General Framework of Local Descent Methods

In this section, we introduce a general framework of local descent methods.

- Given an -dimensional multivariate function over a feasible set , the general approach of optimization is to incrementally take steps on based on a local model so that the function value is decreased. That is we want to solve the following optimization problem:

We can think of being the cost function of a neural network where represents an unknown vector of parameters taking values on a known set , and the goal to find the value of that minimizes the cost.

The optimization methods that follow the common approach of the following pseudocode are called descent direction methods.

Algorithm : General approach of descent direction methods

- (Start with an initial design point )

- repeat

- Determine the descent direction

- Determine the step size of learning rate

- (The next design point)

- until satisfies a termination condition

- return

Following figure illustrates working of a descent direction method on a Rosenbrock function.

Different methods follow different approaches in finding the descent direction and step size or learning rate . Similarly the termination condition in Step 6 can change from method to method.

For each , denote the values of , and in the -th iteration of the algorithm by , and , respectively.

The descent direction of the next iteration is determined by the local information such as gradient and/or Hessian of the function at . Some methods normalize the descent direction so that for all .

The phrase step size is generally refers to the magnitude of the overall step, that is, step size in -th iteration is equal to . When , the learning rate is same as the step size.

In some algorithms, the step size is optimized so that it decreases the function maximally. However, it may come at an extra computational cost.

In conclusion, different methods use different approaches to find and .

3.3 Finding Step Size

In this section, we assume that the descent direction is given to us. Section 3.5 on first-order methods will deal with how to select . Directional derivative plays a key role in finding the step size in each iteration.

3.3.1 Line Search

- Line search is used to find the optimal learning rate for a given descent direction . That is, line search is a univariate optimization problem to find the solution of

Any univariate optimization method can be used for solving the above problem.

In many cases, the cost of the above optimization problem can be much higher. Instead, a reasonable value of can do the job.

It is indeed common to fix a sequence in advance. In particular, a well-known method is to use decaying step factor: for some .

Decaying step factors are quite popular in machine learning when minimizing noisy functions.

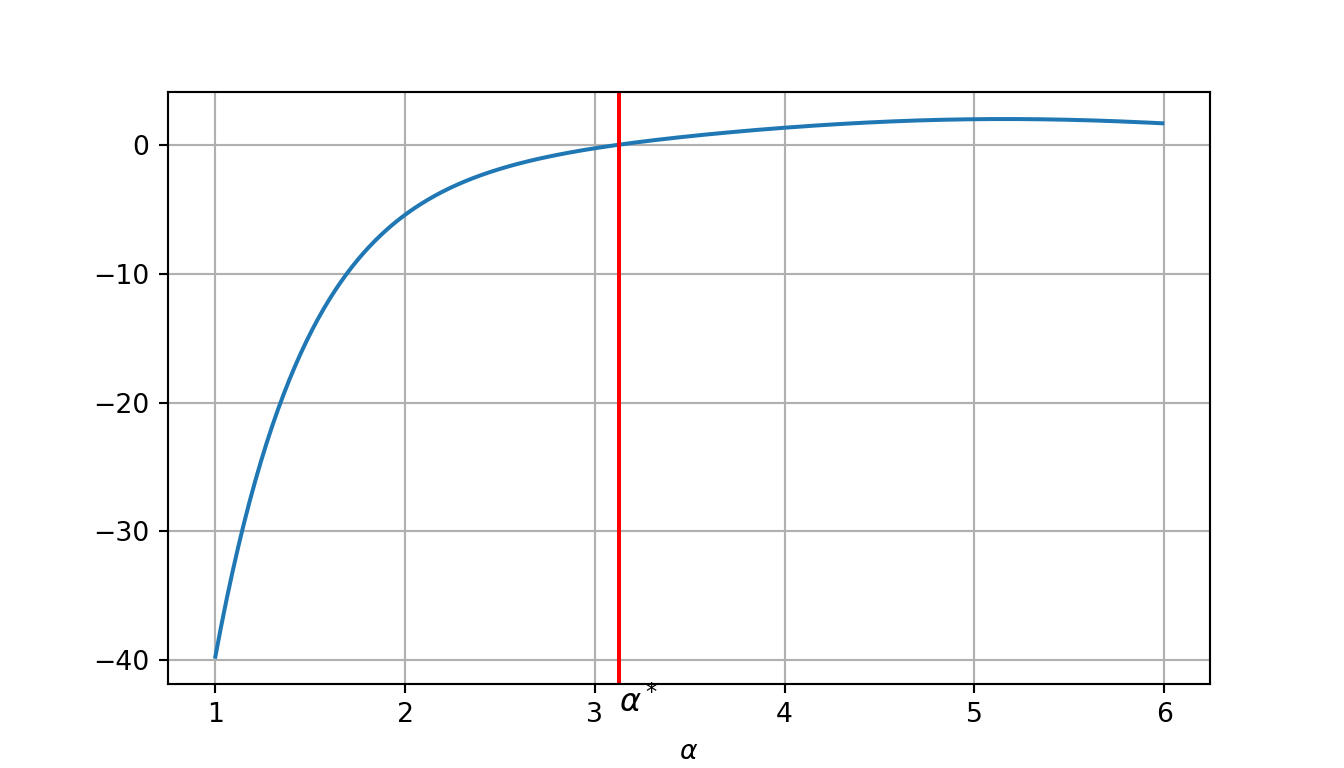

Example: Consider the function

for . The goal is to find that solves (3.3) from in the direction .

Then the optimization problem (3.3) becomes:

That is the optimal is a solution of

which can be solved using any standard optimization method like Newton-Raphson method, as shown in the following python code.

import numpy as np

import matplotlib.pyplot as plt

from scipy import optimize

g = lambda alpha : - np.cos(2 - alpha) - 2*np.exp(5 - 2*alpha) + 1

x_values = np.arange(1, 6, 0.01)

y_values = [g(x_values[i]) for i in range(x_values.shape[0])]

sol = optimize.newton(g, 0)

plt.figure(figsize=(7,4))## <Figure size 700x400 with 0 Axes>plt.plot(x_values, y_values)## [<matplotlib.lines.Line2D object at 0x7fb391b48b38>]plt.grid()

plt.axvline(x=sol, color='red')## <matplotlib.lines.Line2D object at 0x7fb391b48da0>plt.xlabel(r'$\alpha$')## Text(0.5, 0, '$\\alpha$')plt.text(sol, -44, r'$\alpha^*$', fontsize=12)## Text(3.127045611348646, -44, '$\\alpha^*$')plt.show()

print('Solution:', sol)## Solution: 3.1270456113486463.3.2 Approximate Line Search

When solving the optimization problem in each iteration of the exact line search is expensive, it is economical to take more steps with approximate line search.

Sufficient decrease condition: Select the step size and such that for a given descent direction .

Sufficient decrease condition is also known as Armijo condition or the first Wolfe condition.

Typically, in practice, is taken to be .

Issue: The first Wolfe condition doesn’t guarantee convergence to a local minimum. This is because, very small steps can satisfy the condition and that can cause premature convergence.

Backtracking line search: To remedy the above issue, backtracking line search is used. In each iteration, a largest possible step size is taken. Then, decrease it sequentially until the sufficient decrease condition is satisfied. This guarantees convergence to a local minimum.

Example: Again consider Rosenbrock function,

The backtracking line search from in the direction . Initially , and at each iteration, it is decreased as .

import numpy as np

import sympy

def backtracking_line_search(f, grad, t, d, a, p = 0.5, beta= 1e-4):

f_lam = sympy.lambdify((t1, t2), f)

z = f_lam(t[0], t[1])

g = [s.subs([(t1, t[0]), (t2, t[1])]).evalf() for s in grad]

theta_next = t + a*d

while f_lam(theta_next[0], theta_next[1]) > z + a*beta*np.dot(g, d):

a *= p

theta_next = t + a*d

return a

t1, t2 = sympy.symbols('t1, t2', real=True)

f = (1.0 - t1)**2 + 100*(t2 - t1**2)**2

theta = (t1, t2)

grad = [f.diff(t) for t in theta]

t = np.array([-0.5, 1.0])

d = np.array([-1, -1.5])

a_init = 1.0

sol = backtracking_line_search(f, grad, t, d, a_init)

print('Solution:', sol)## Solution: 0.25Curvature condition: An alternative to Backtracking line search, the curvature condition requires that where controls how shallow the next directional derivative should be. In practice it is common to take , with when it is used for the conjugate gradient method and when it is used for Newton’s method.

Strong Wolfe condition: It is an alternative to and stronger than the curvature condition. Strong Wolfe condition:

Wolfe conditions: The sufficient decrease condition and the curvature condition together are called the Wolfe conditions, which guarantee convergence to a local minimum.

strong Wolfe conditions: The sufficient decrease condition and the strong Wolfe condition together are called the strong Wolfe conditions, which again guarantee convergence to a local minimum.

Exercise: Conduct approximate line search on from in the direction such that the Wolfe conditions satisfy.

Take the maximum step size to be (i.e., start with ), reduction factor (i.e., in iteration ), the first Wolfe condition parameter , and the second Wolfe condition parameter .

Hint: The Wolfe conditions will be satisfied on the iteration, and the final and the final .

Exercise: Recall that for any point and direction , the Wolfe condition is

For , find the maximum stepsize that satisfies this condition when , , and .

3.4 Termination Conditions

Here, we discuss four commonly used termination conditions.

Maximum number of iterations: Under this condition, the algorithm is terminated either when the number of iterations exceeds a pre-selected number , or the total running time of the algorithm exceeds a pre-selected time . This is useful when there is a strict running time constraint, because for all the other termination conditions mentioned baove, it is hard to say hong the algorithm takes to terminate.

The plot in Section~3.5.6 compares the performance of different gradient methods with the same . Evidently, it will be clear that the same choice of does not work for all the methods.Absolute Improvement: Algorithm is terminated if the change in the function value is smaller than a pre-selected threshold over subsequent steps. That is, the termination condition is,

Gradient Magnitude: Algorithm is terminated if the magnitude of the derivative is smaller than a pre-selected threshold , that is,

Relative Improvement: Algorithm is terminated if the relative change is smaller than a pre-selected threshold over subsequent steps. That is, the termination condition is

Relative improvement condition is particularly useful when the region around the (local) minimum is shallow.

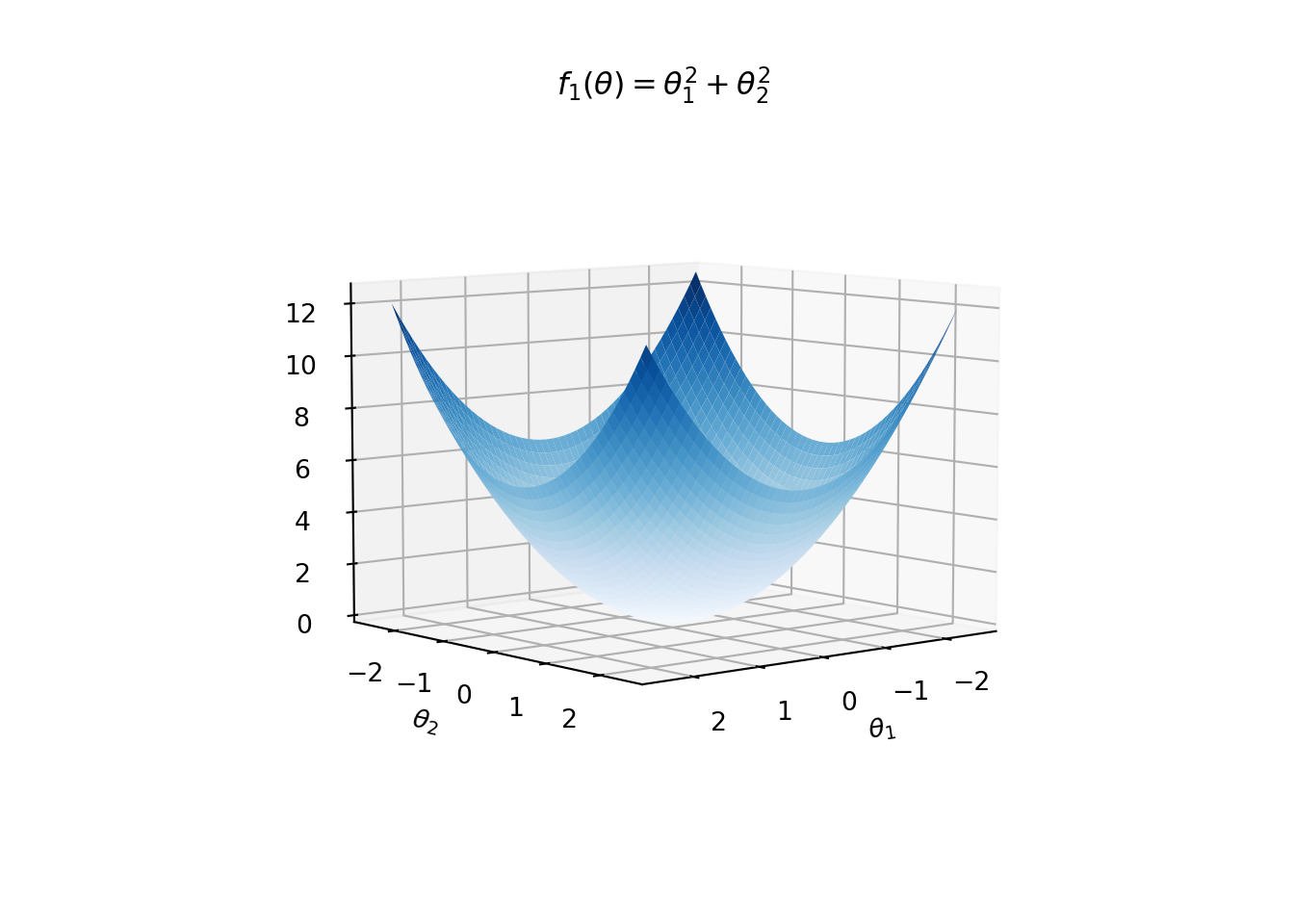

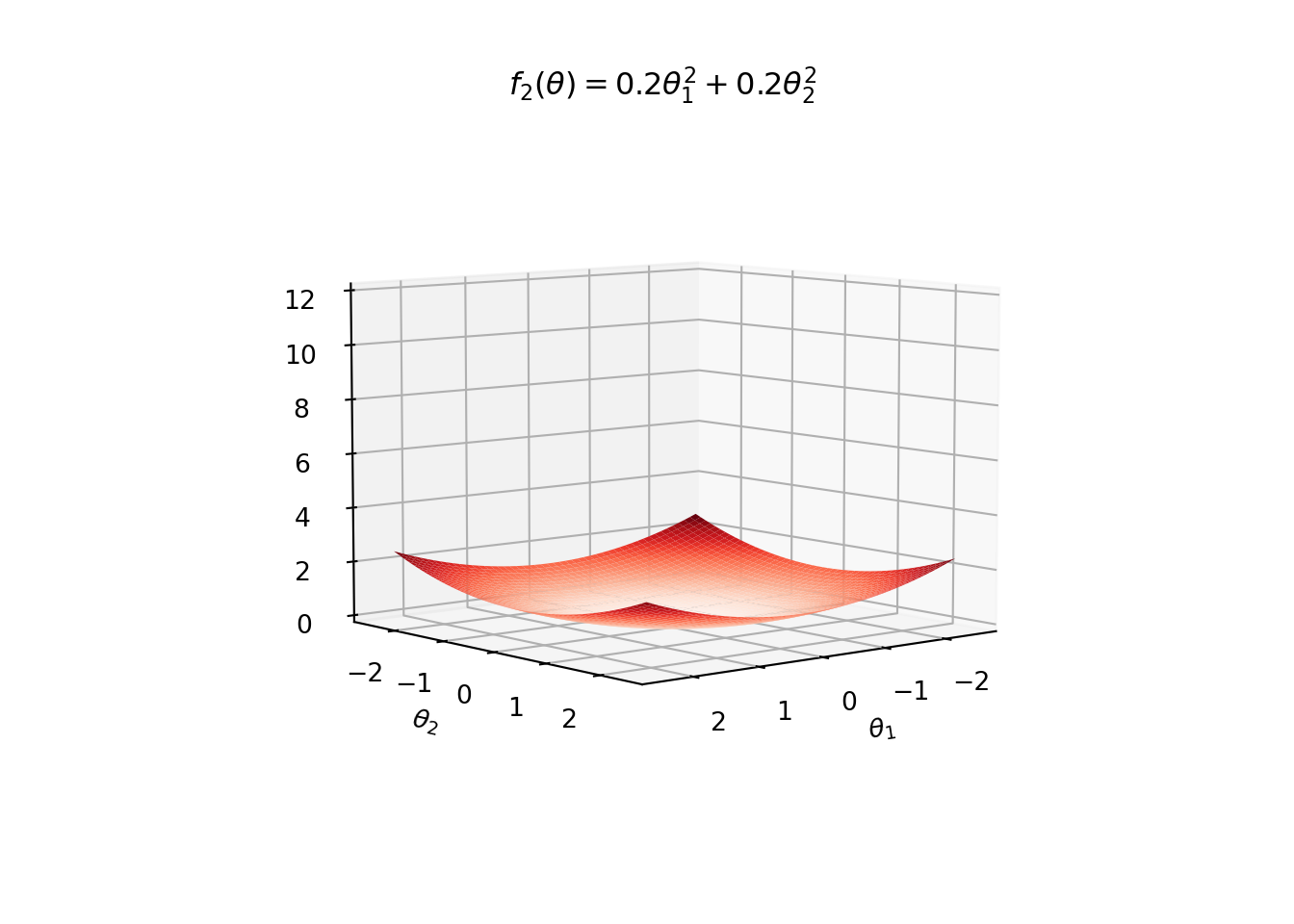

Example: For instance, in the following figure, we see surface plots for

and

Both of these functions have global minimum at . However, is shallower around than .

Suppose that a descent direction algorithm takes a step of size in each iteraction. We see that the relative improvement condition works well for both the functions.

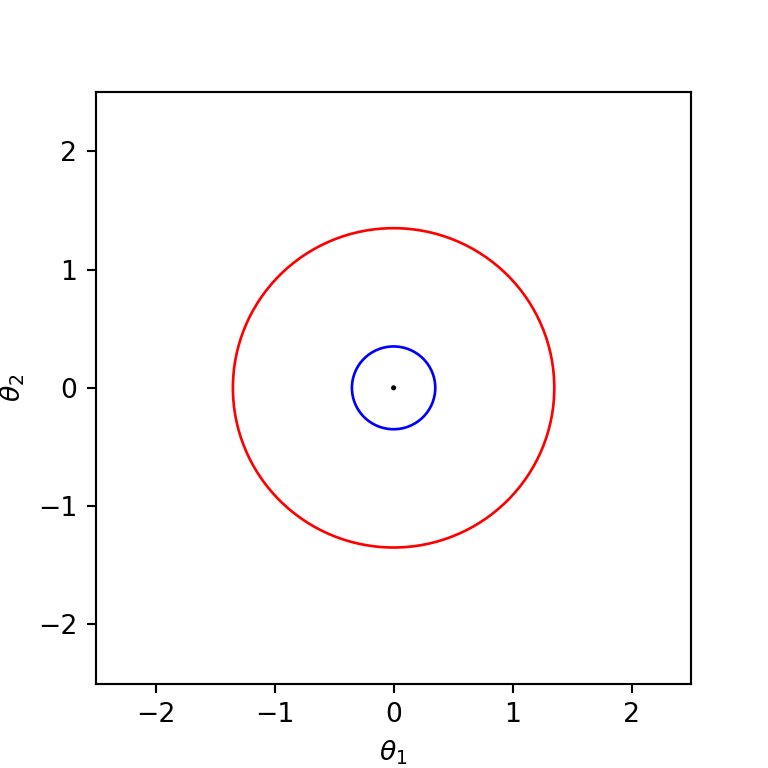

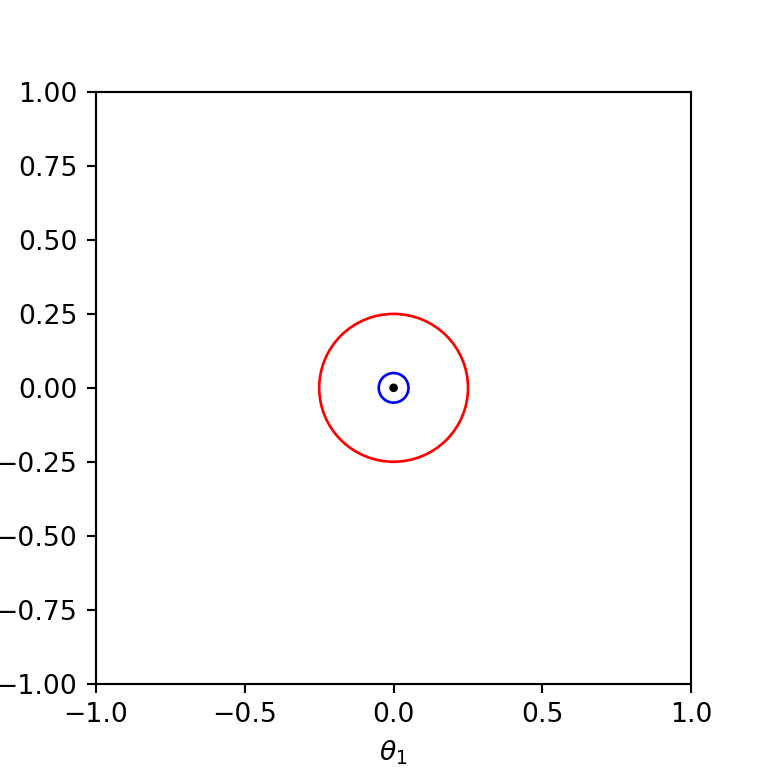

Absolute Improvement: First assume that the algorithm uses the absolute improvement condition with . Then for , should be within the blue cicle (in the figure below) before the termination, while for , the algorithm can terminate when takes a value within the red circle.

Gradient Magnitude: Assume that the gardient magnitude condition with in the algorithm. Then the algorithm terminates for when is within the blue circle shown in the following figure, while for it terminates when is within the red circle.

Relative Improvement: Finally assume that the algorithm uses the relative improvement condition with for the termination. Then for both the functions, the algorithm terminates only when is within the circle centered at the origion with radius . This is because the relative improvement is the same for both the functions, i.e.,

for any and .

3.5 First-Order Methods

Finally, we focus on the most important aspect of the local descent methods: finding the descent direction . As mentioned earlier, first-order methods use the gradient of the function for selecting appropriate descent direction in each iteration.

3.5.1 Gradient Descent

An obvious choice for the descent direction is in the direction of steepest descent.

Steepest descent direction guarantees an improvement, provided that the function is smooth, the step size is sufficiently small, and we are not at a minimum point (i.e., non-zero gradient).

Again let be the design point in the -th iteration. Define,

The steepest direction in the -th iteration is the direction opposite the gradient (hence the name gradient descent).

The descent direction is typically the normalized steepest descent, that is, where the negative sign implies that the descent direction is opposite the gradient.

Observation: If the step size is selected using exact line search: then the gradient descent can result in zig-zagging. To see this, observe that Consequently, the directional derivative , because Since we have That is, the descent direction in each iteration is perpendicular to the descent direction of the previous iteration. Therefore, we obtain jagged search paths.

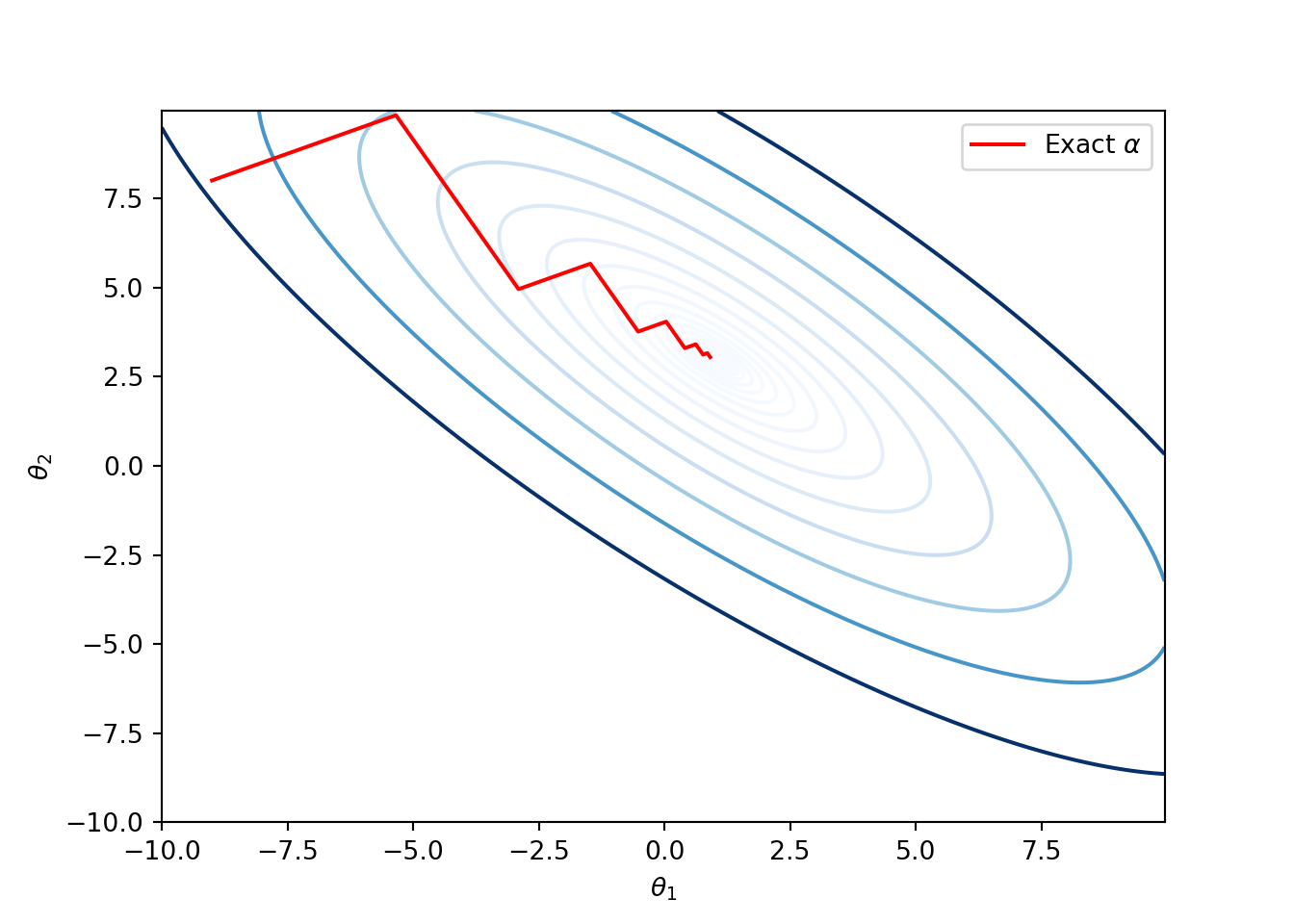

Example: Consider Booth’s function, a 2-dimensional quadratic function: This has a global minimum at . The following code implements gradient-descent method with exact line search. We start with .

def f(t):

return (t[0] + 2*t[1] - 7)**2 + (2*t[0] + t[1] -5)**2

def grad(t):

return np.array([10*t[0] + 8*t[1] - 34, 8*t[0] + 10*t[1] - 38])

def line_search_exact(t, d):

nume = (t[0] + 2*t[1] - 7)*(d[0] + 2*d[1]) + (2*t[0] + t[1] - 5)*(2*d[0] + d[1])

deno = (d[0] + 2*d[1])**2 + (2*d[0] + d[1])**2

return -nume/deno

def GradientDescent_exact(f, grad, t, niter):

g = grad(t)

norm = np.linalg.norm(g)

d = -g/norm

t_seq = [t]

d_seq = [d]

for _ in range(niter):

alpha = line_search_exact(t, d)

# Theta update

t = t + alpha*d

t_seq.append(t)

g = grad(t)

norm = np.linalg.norm(g)

d = -g/norm

d_seq.append(d)

print('Final point of GD:', t)

t_seq = np.array(t_seq)

d_seq = np.array(d_seq)

return t_seq, d_seq

t_init = np.array([-9.0, 8.0])

t1_range = np.arange(-10.0, 10.0, 0.05)

t2_range = np.arange(-10.0, 10.0, 0.05)

A = np.meshgrid(t1_range, t2_range)

Z = f(A)

fig, ax = plt.subplots()

ax.contour(t1_range, t2_range, Z, levels=np.exp(np.arange(-10, 6, 0.5)), cmap='Blues')t_seq_gd, d_seq_gd = GradientDescent_exact(f, grad, t_init, niter=10)ax.plot(t_seq_gd[:, 0], t_seq_gd[:, 1], '-r', label=r'Exact $\alpha$')

plt.xlabel(r'$\theta_1$')

plt.ylabel(r'$\theta_2$')

plt.legend()

plt.show()

Run the following lines to print the sequence of directions , the sequence of parameters , and the gradient vectors .

for i in range(len(d_seq_gd)):

print('iteration:', i+1)

print('\t d = ', d_seq_gd[i])

print('\t t = ', t_seq_gd[i])

print('\t Gradient =', grad(t_seq_gd[i]))Example: Since, the gradient descent method follows the steepest descent direction, ideally speaking it should behave like water flowing from and eventually reaching the local minimum. This happens when the step size is very small as illustrated in the following figure. They key drawback of small step size is that the number computations can drastically increase compared to the earlier exact line search version. For instance, in the following code we used iterations for the exact line search and for the small step size of .

def GradientDescent_fixed(f, grad, t, alpha, niter):

g = grad(t)

norm = np.linalg.norm(g)

d = -g/norm

t_seq = [t]

d_seq = [d]

for _ in range(niter):

# Theta update

t = t + alpha*d

t_seq.append(t)

g = grad(t)

norm = np.linalg.norm(g)

d = -g/norm

d_seq.append(d)

print('Final point of GD:', t)

t_seq = np.array(t_seq)

d_seq = np.array(d_seq)

return t_seq, d_seq

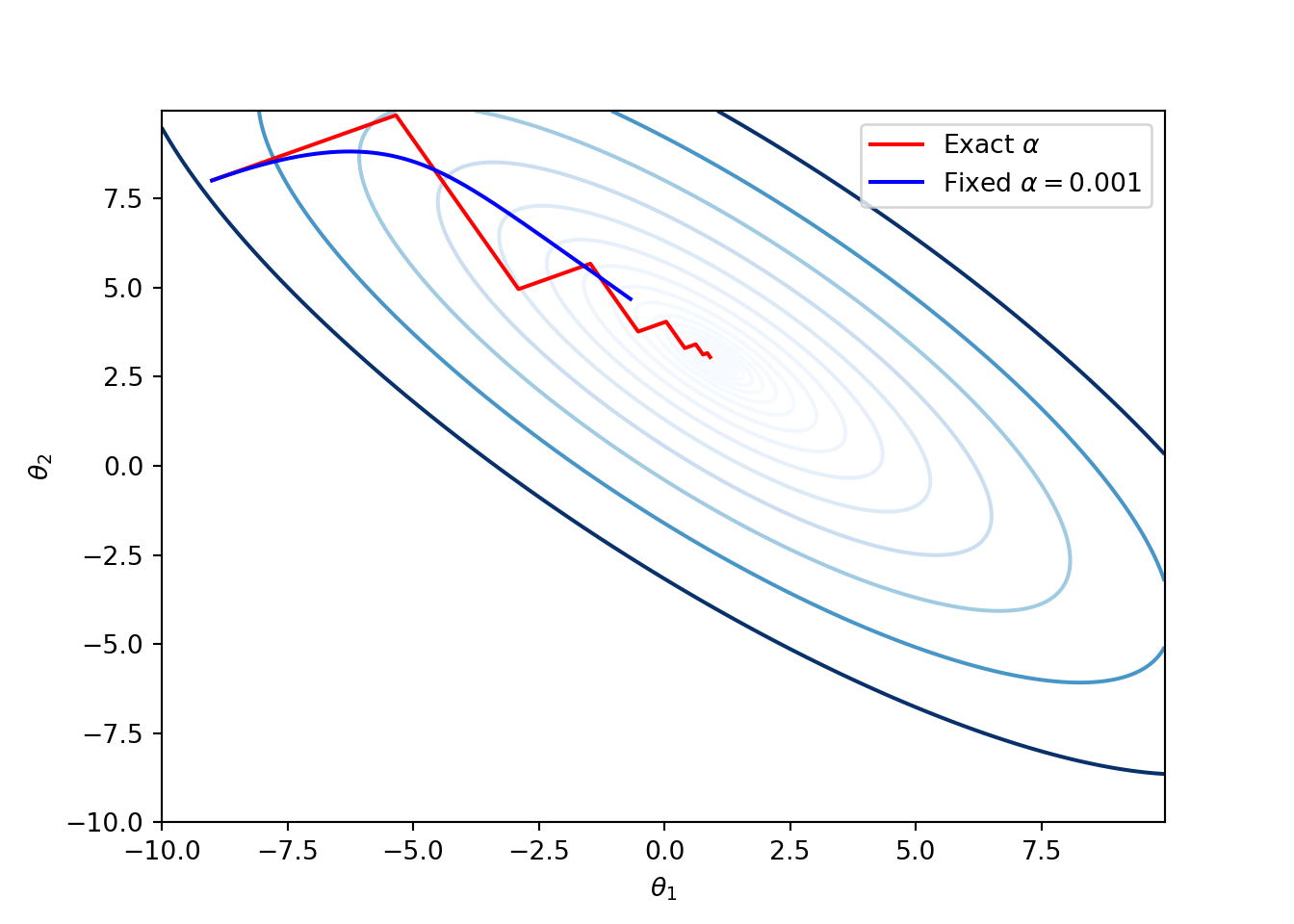

alpha = 0.001

t_init = np.array([-9.0, 8.0])

t1_range = np.arange(-10.0, 10.0, 0.05)

t2_range = np.arange(-10.0, 10.0, 0.05)

A = np.meshgrid(t1_range, t2_range)

Z = f(A)

fig, ax = plt.subplots()

ax.contour(t1_range, t2_range, Z, levels=np.exp(np.arange(-10, 6, 0.5)), cmap='Blues')ax.plot(t_seq_gd[:, 0], t_seq_gd[:, 1], '-r', label=r'Exact $\alpha$')

t_seq_gd_fixed, d_seq_gd_fixed = GradientDescent_fixed(f, grad, t_init, alpha, niter=10000)ax.plot(t_seq_gd_fixed[:, 0], t_seq_gd_fixed[:, 1], '-b', label=r'Fixed $\alpha = 0.001$')

plt.xlabel(r'$\theta_1$')

plt.ylabel(r'$\theta_2$')

plt.legend()

plt.show()

Exercise: For the above Booth’s function, prove that the global minimum is achieved at by solving , and find the value of .

3.5.2 Cojugate Gradient

Performance of the gradient descent method can be poor in narrow valleys. The conjugate descent method addresses this issue.

The Conjugate gradient method works well for optimizing quadratic functions: where is a symmetric positive definite matrix. Then the optimization problem has a unique solution.

The descent directions of the algorithm are chosen so that they are mutually conjugate with respect to , that is,

The algorithm uses line search in each iteration to find the step factor . It is an easy problem for quadratic functions. Because to solve , we can easily solve

Observe that

Equating this to zero results inThe algorithm starts in the steepest descent direction: So,

In the subsequent iterations where the scalar parameter is selected so that and are mutually conjugate with respect to , as shown below:

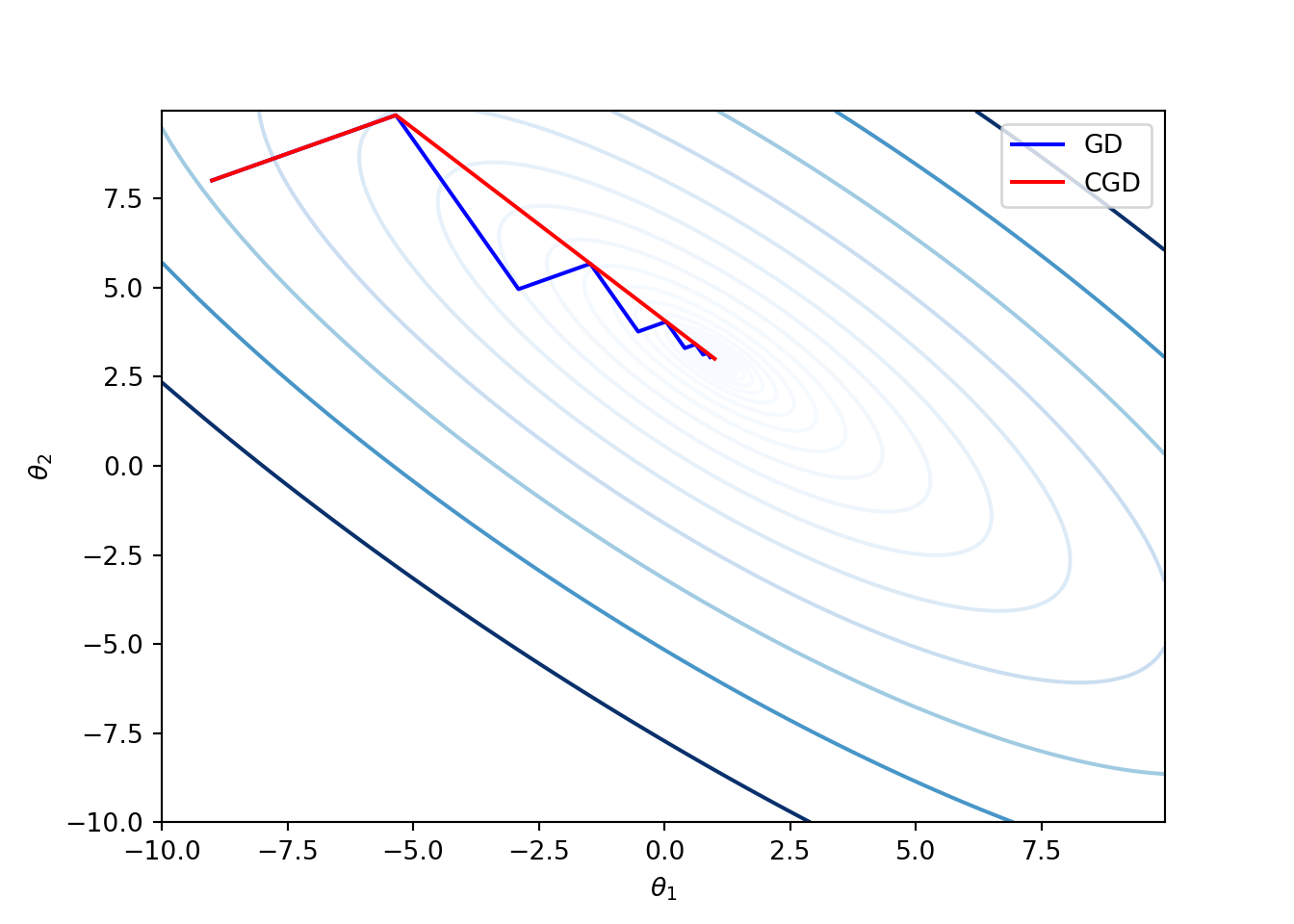

Example: Again consider the Booth’s function from the previous example:

The following code implements the conjugate gradient method.

def ConjGradientDescent_exact(f, grad, t, A, b, niter):

g = grad(t)

d = -g

t_seq = [t]

d_seq = [d]

for _ in range(niter):

alpha = line_search_exact(t, d)

# Theta update

t = t + alpha*d

g = grad(t)

Ad = A@d

beta = np.dot(g, Ad)/(np.dot(d, Ad))

d = -g + beta*d

if np.linalg.norm(d) < 10e-16:

t_seq = np.array(t_seq)

d_seq = np.array(d_seq)

return t_seq, d_seq

t_seq.append(t)

d_seq.append(d)

print('Final point of GD:', t)

t_seq = np.array(t_seq)

d_seq = np.array(d_seq)

return t_seq, d_seq

A = np.array([[10, 8], [8, 10]])

b = -np.array([34, 38])

t1_range = np.arange(-10.0, 10.0, 0.05)

t2_range = np.arange(-10.0, 10.0, 0.05)

XY = np.meshgrid(t1_range, t2_range)

Z = f(XY)

plt.contour(t1_range, t2_range, Z, levels=np.exp(np.arange(-3, 7, 0.5)), cmap='Blues')t_seq_gd, _ = GradientDescent_exact(f, grad, t_init, niter=10)plt.plot(t_seq_gd[:, 0], t_seq_gd[:, 1], '-b', label='GD')t_seq_cgd, _ = ConjGradientDescent_exact(f, grad, t_init, A, b, niter=10)

plt.plot(t_seq_cgd[:, 0], t_seq_cgd[:, 1], '-r', label='CGD')plt.xlabel(r'$\theta_1$')plt.ylabel(r'$\theta_2$')plt.legend()plt.show()

The above figure illustrates that the conjugate gradient method can have faster convergence compared to the gradient descent method.

-

The conjugate method can also be applied to non-quadratic functions as well because smooth continuous functions behave like quadratic functions close to the minimum. However, unfortunately, it is hard to find that best approximates locally. Observe that is needed for computing values. The following choices for seems to work well in practice.

Fletcher-Reeves:

Polak-Ribiere:

Exercise: Modify and run the python code for conjugate gradient with the above two choices of update.

3.5.3 Challenges Posed by the Above Gradient Descent Methods

Gradient descent methods offer a few important challenges that meed to be addressed to make them useful in the neural network set-up.

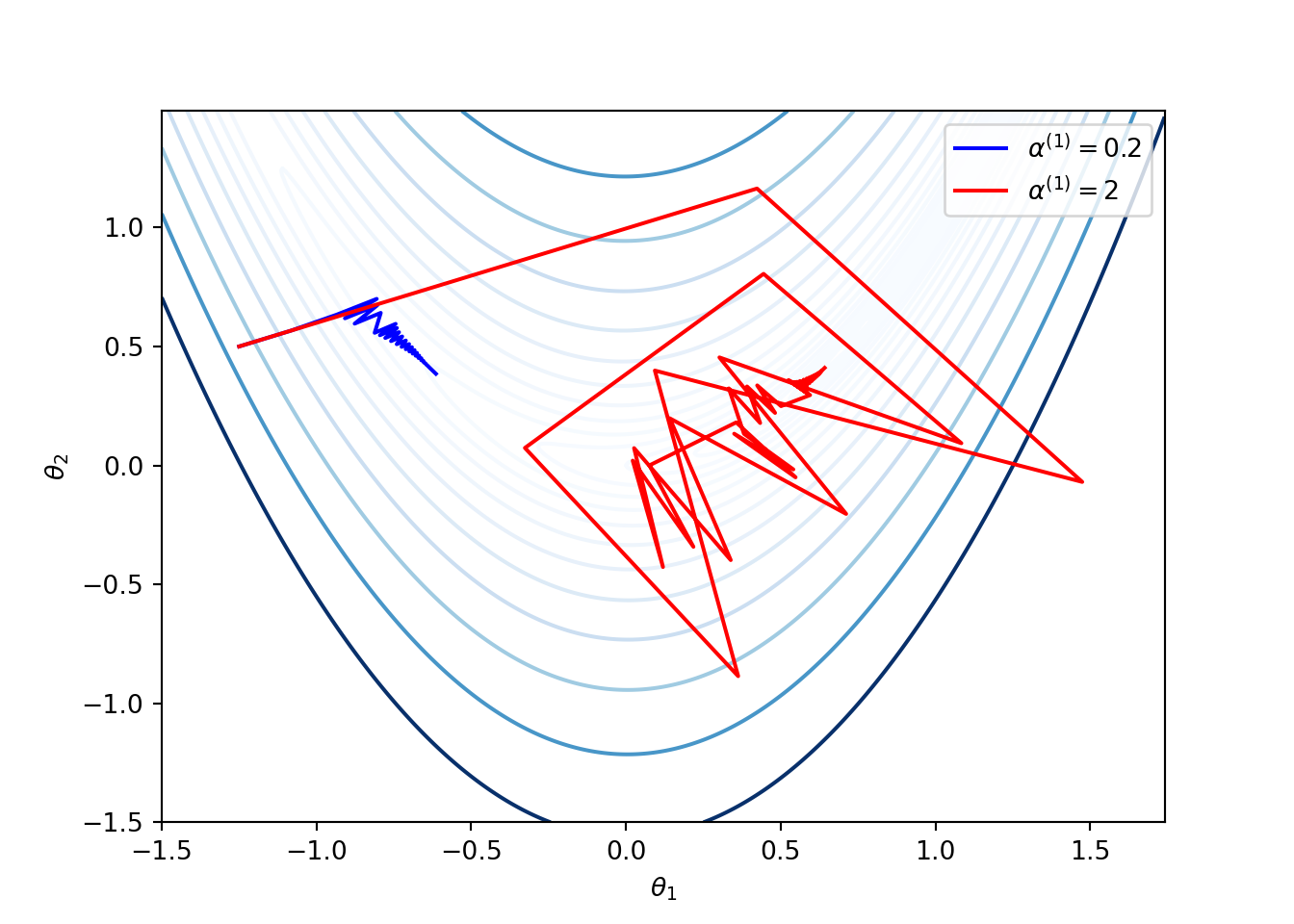

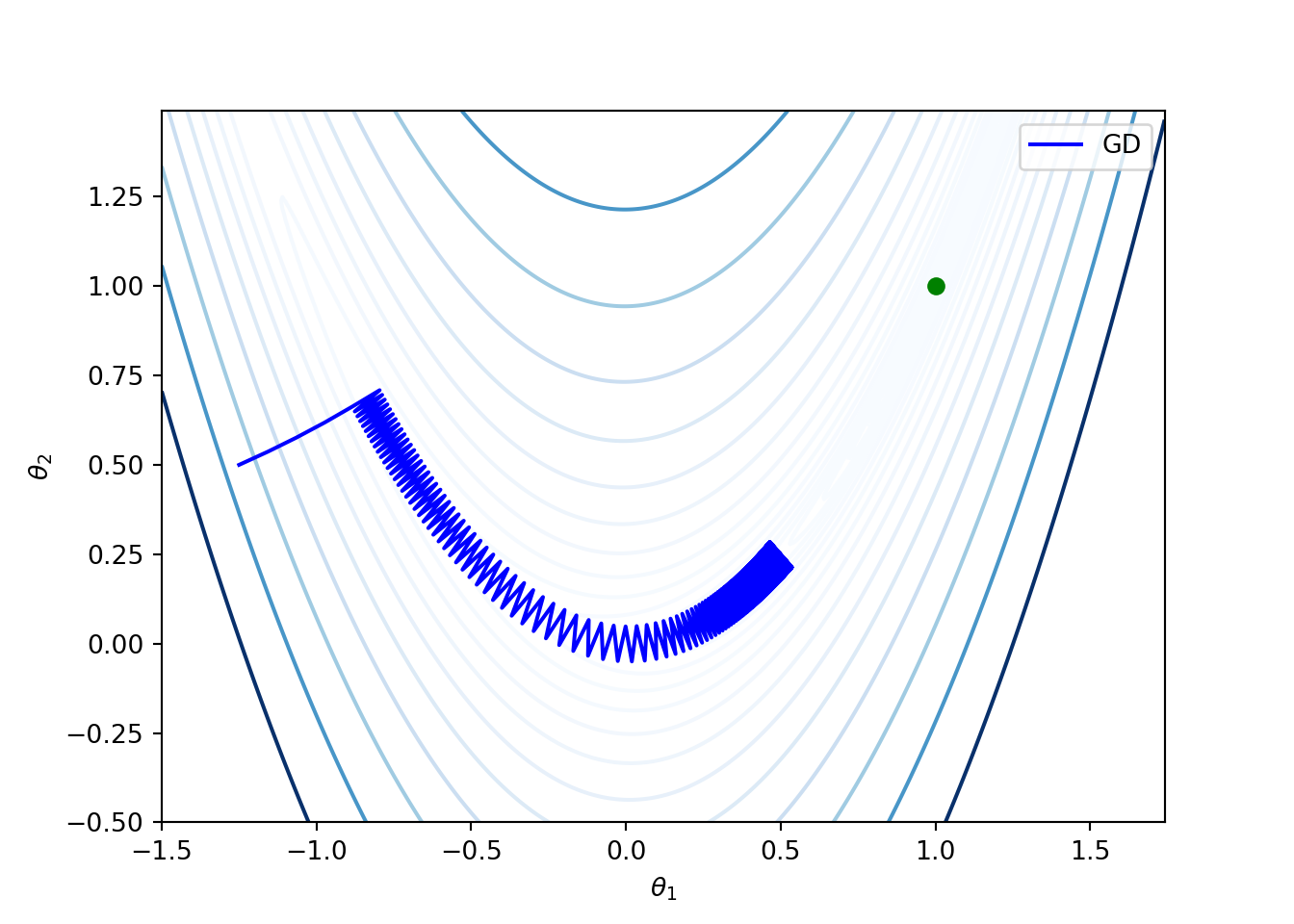

- Selection of the learning rate can be difficult. Small values of learning rate can result in slow convergence and large values can result in the loss function to fluctuate around the minimum, or even diverge. The following figure shows applications of the gradient descent method for Rosenbrock function given in (3.4) with two choices of , where the learning rates are updated as .

Some gradient descent methods try to adjust the learning rate during training by reducing the rate according to a pre-defined schedule or when the change in cost function between epochs falls below a threshold. These schedules and thresholds are unable to adapt to the characteristics of the dataset as they are defined in advance.

Applying the same learning rate to all parameter updates might not be a good idea as larger updates on rarely occurring features may result in faster convergences.

Gradient descent methods take time to traverse plateaus surrounding saddle points as the gradient in this region is close to zero in all directions.

For instance, once again consider the Rosenbrock function given in (3.4). Note that for this function, the global minimum is achieved at . The following figure shows that the gradient descent method is slow along the valley. This is because the gradient over a nearly flat region has small magnitude, and thus the gradient descent method can require many iterations to converge to a local minimum (same as the global minimum for the Rosenbrock function). The this experiment, the initial point , and the learning rate is fixed at .

From now onwards, we focus on a sequence of modified gradient descent methods for addressing the above issues.

3.5.4 Momentum

As mentioned above, the gradient methods take a long time to traverse over almost flat surfaces. Incorporating momentum accelerates the algorithm in the relevant directional and dampens oscillations.

The momentum updates at the -the iteration are

for a scalar parameter . When , we have the standard gradient descent method.Interpretation: Think of a ball rolling down a nearly flat surface. Due to the gravity, the ball accumulates momentum as it rolls down, becoming faster and faster with time.

3.5.5 Nesterov Momentum

One problem with the momentum is that the steps do not slow down enough near the local minimum at the bottom of the valley. Hence, it has a tendency to overshoot the valley floor.

For the ball rolling scenario, we would want to the ball to be smart enough know where it is going to be in the next step so that it can slow down before the hill slopes up again.

Nesterov momentum captures this notion by using the gradient at the projected future position. The update is given by,

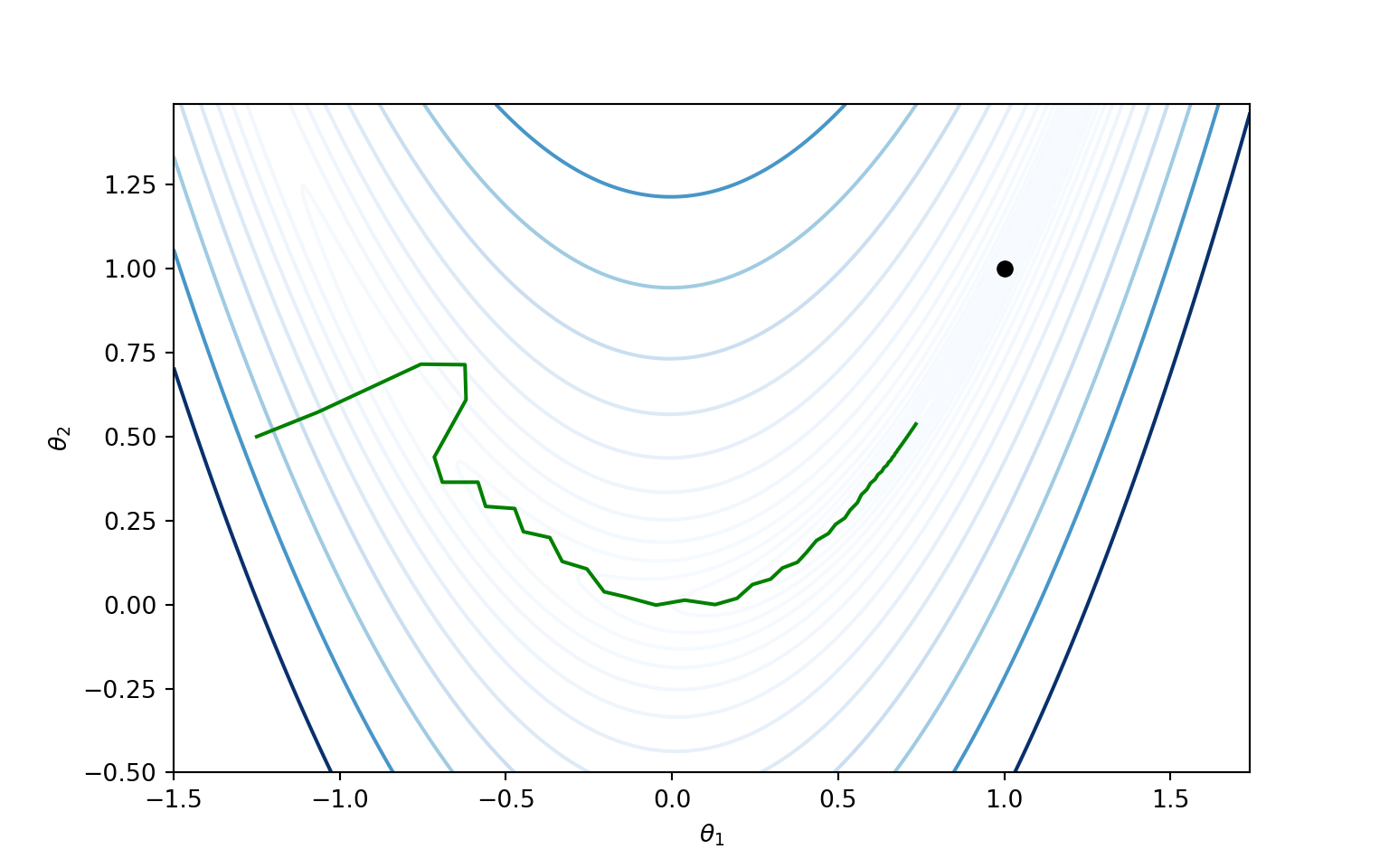

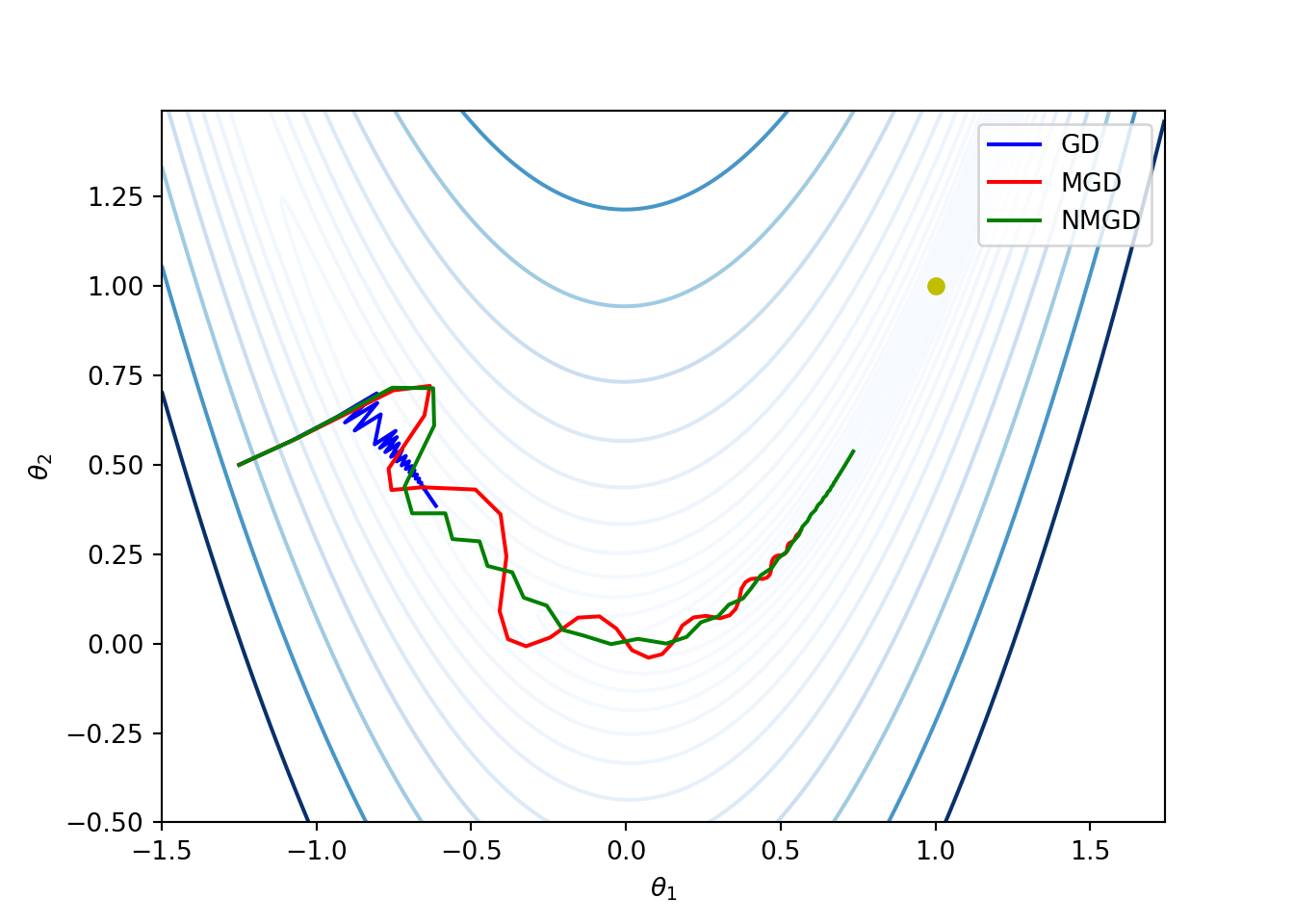

Example: We once again consider the Rosenbrock function given by (3.4). The following code compares the basic gradient descent, momentum, and Nesterov momentum methods. We start with and . The learning rate decreases as where . For momentum and Nesterov momentum methods, we have taken .

f = lambda t: (1 - t[0])**2 + 100*(t[1] - t[0]**2)**2

def grad(t):

dx = -2*(1 - t[0]) - 4*100*t[0]*(t[1] - (t[0]**2))

dy = 2*100*(t[1] - (t[0]**2))

return np.array([dx, dy])

def GradientDescent(f, grad, t, alpha, gamma, niter=100000):

g = grad(t)

norm = np.linalg.norm(g)

d = -g/norm

t_seq = [t]

d_seq = [d]

for _ in range(niter):

alpha *= gamma

t = t + alpha*d

t_seq.append(t)

g = grad(t)

norm = np.linalg.norm(g)

d = -g/norm

d_seq.append(d)

t_seq = np.array(t_seq)

d_seq = np.array(d_seq)

return t_seq, d_seq

def MomentumGradientDescent(f, grad, t, alpha, gamma, beta, niter=100000):

v = np.zeros(t.shape[0])

t_seq = [t]

v_seq = [v]

for _ in range(niter):

g = grad(t)

norm = np.linalg.norm(g)

v = beta*v - alpha*g/norm

v_seq.append(v)

alpha *= gamma

t = t + v

t_seq.append(t)

t_seq = np.array(t_seq)

v_seq = np.array(v_seq)

return t_seq, v_seq

def NesterovMomentumGradientDescent(f, grad, t, alpha, gamma, beta, niter=100000):

g = grad(t)

norm = np.linalg.norm(g)

v = np.zeros(t.shape[0])

t_seq = [t]

v_seq = [v]

for _ in range(niter):

g = grad(t + beta*v)

norm = np.linalg.norm(g)

v = beta*v - alpha*g/norm

v_seq.append(v)

alpha *= gamma

t = t + v

t_seq.append(t)

t_seq = np.array(t_seq)

v_seq = np.array(v_seq)

return t_seq, v_seq

# Initialization

alpha_init = 0.2

gamma = 0.9

beta = 0.8

t_init = np.array([-1.25, 0.5])

niter = 100000

t1_range = np.arange(-1.5, 1.75, 0.01)

t2_range = np.arange(-0.5, 1.5, 0.01)

A = np.meshgrid(t1_range, t2_range)

Z = f(A)

plt.contour(t1_range, t2_range, Z, levels=np.exp(np.arange(-10, 6, 0.5)), cmap='Blues')t_seq_gd, d_seq_gd = GradientDescent(f, grad, t_init, alpha_init, gamma, niter)

plt.plot(t_seq_gd[:, 0], t_seq_gd[:, 1], '-b', label='GD')t_seq_mgd, d_seq_mgd = MomentumGradientDescent(f, grad, t_init, alpha_init, gamma, beta, niter)

plt.plot(t_seq_mgd[:, 0], t_seq_mgd[:, 1], '-r', label='MGD')t_seq_nmgd, d_seq_nmgd = NesterovMomentumGradientDescent(f, grad, t_init, alpha_init, gamma, beta, niter)

plt.plot(t_seq_nmgd[:, 0], t_seq_nmgd[:, 1], '-g', label='NMGD')plt.plot(1, 1, 'oy')plt.xlabel(r'$\theta_1$')plt.ylabel(r'$\theta_2$')plt.legend()plt.show()

3.5.6 Adagrad

Observe that both momentum and Nesterov momentum methods update all components of with the same learning rate.

The adaptive subgradient, or simply adgrad, adapts a learning rate for each component of so that the components with frequent updates have low learning rates and the components with infrequent updates have high learning rate. As a consequence, it increases the influence of components of with infrequent updates.

The update of each component in is as follows. where is the -th component of , is a small value of order to avoid division by zero, and is the learning rate parameter.

The adagrad method is less sensitive to the parameter , which is typically set to be .

Drawback: The key drawback of the adagrad is that each component is strictly non-deceasing. As a consequence the accumulated sum keeps increasing and hence the effective learning rate decreases during training, often making the effective learning rate infinitesimally small before convergence.

The first-order methods discussed below overcome this drawback.

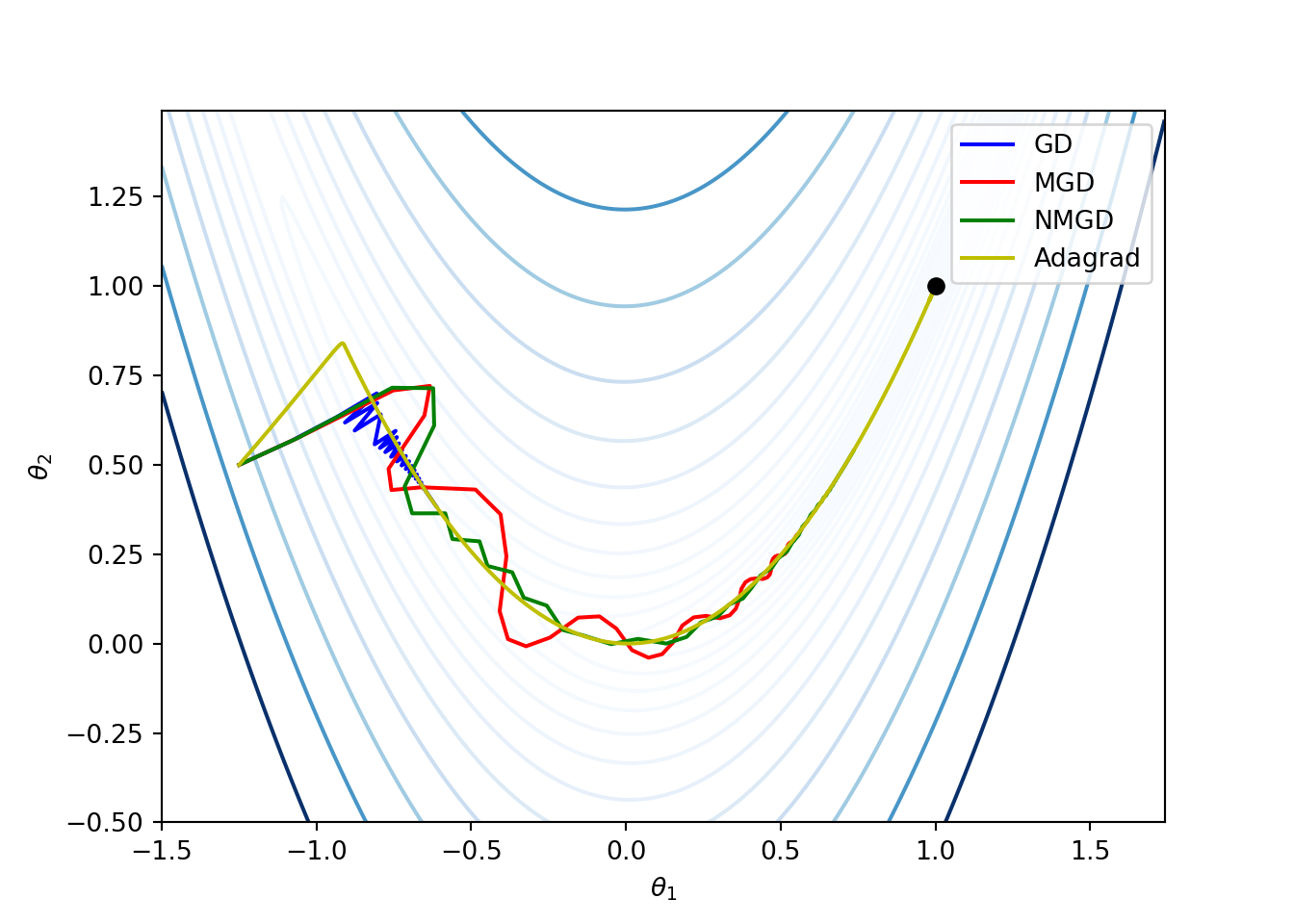

Example: The following python function implements the adagrad method for the earlier example on Rosenbrock function. The corresponding plot compares the other methods with the adagrad method. The parameters chosen are and .

def Adagrad(f, grad, t, alpha = 0.01, epsilon=10e-8, niter=100000):

t_seq = [t]

s = np.zeros(t.shape[0])

#while norm > 10**(-8):

for _ in range(niter):

g = grad(t)

norm = np.linalg.norm(g)

g = g/norm

s += np.multiply(g, g) # element-wise multiplication

d = -np.divide(g, epsilon + np.sqrt(s)) # element-wise division

t = t + alpha*d

t_seq.append(t)

t_seq = np.array(t_seq)

return t_seq

3.5.7 RMSprop

- RMSprop method comes from Lecture 6 of Geoff Hinton’s Coursera class.

Here, RMS stands for root mean square.

- RMSprop method overcomes the problem of monotonically decreasing learning rate by maintaining a decaying average of squared gradients. This average vector is updated according to where is the decay factor, typically taken to be , and denotes the element-wise product between vectors. Thus, the update of each element of is given by

If the initial value , then for each i,

Then the component-wise update of is given by where the learning parameter is typically set to be .

The expression is called decaying root mean square of the time series , and is denoted by . Then,

Exercise: Implement the RMSProp method for the Rosenbrock function considered earlier. Take , , and . Compare the performance with the adagrad method with and .

3.5.8 Adadelta

Adadelta method is an extension of Agagrad method and it overcomes the problem of monotonically of the learning parameter.

The authors of RMSprop noticed that the units in gradient descent, stochastic gradient descent, momentum, Adagrad methods do not match. To fix this they use an exponentially decaying average of the squared updates as follows.

In addition to (3.5), let be the average vector updated according to for each component of , where , where is also decay parameter close to and .

Then, in each iteration update the decaying average of the parameter vector by where .

The above expression eliminates the learning parameter completely.

3.5.9 Adam

The adaptive moment estimation, or simply adam, is a another method that adapts learning rates to each parameter .

In addition to using the exponentially decaying average of squared gradients (like RMSprop and Adadelta), the adam method also uses exponentially decaying average of gradients (like the momentum method).

Recall that the momentum method can be seen as a ball rolling down a slope. The adam method can be seen as a heavy ball with friction rolling down the slope.

The corresponding updates in each iteration of the adam method are:

and are estimates of the moment and the second moment of the gradient, respectively (hence the name of the method).

Since the initial values , the estimates and are biased towards zero, particularly when decay rates are small (i.e., and are close to 1).

Since and are close to , for small , and are biased towards . To eliminate this bias, the corrected (or unbiased) updates are

Finally, the parameter vector update is given by

where is again on the order of to avoid division by zero.

The authors propose that a good default choice to be , , and .

3.5.10 Hypergradient Descent

The learning rate decides how sensitive the descent method to the gradient, and it has a substantial influence on the performance of the algorithm.

The accelerated descent methods presented above are either extremely sensitive to the learning rate or go to great extent to adapt the learning rate during execution.

The goal of the hypergradient methods is to use derivative of the learning rate parameter for improving the optimizer performance. This method applies gradient descent on the learning rate parameter.

The update of the learning parameter consists of the partial derivative of the objective function with respect to the learning parameter: where is the hypergradient learning rate.

For gradient descent method,

Similarly, hypergradient descent idea can be applied to other gradient-based methods.

The key advantage of the hypergradient method is that it reduces the sensitivity of the algorithm to the hyperparameter.

3.6 Second-Order Methods

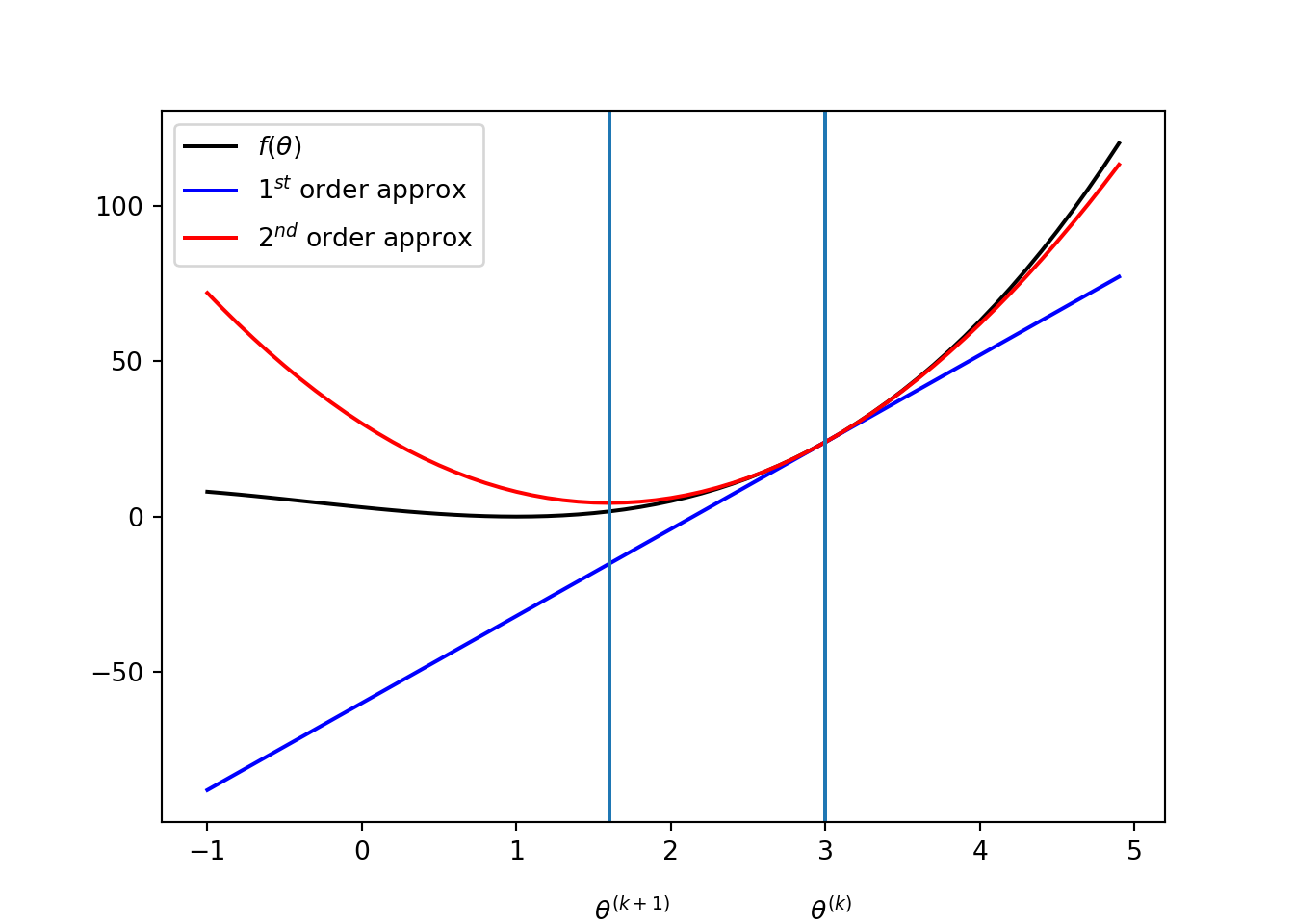

In the previous section on first-order methods, we used the gradient of the objective function in the first-order approximation. In this section, we present some well-known methods that use second order approximations of .

3.6.1 Newton’s Method

As we have seen in the first-order methods, objective function value and its gradient help us in finding the direction of the next step. However, this information does not imply how far we need to travel.

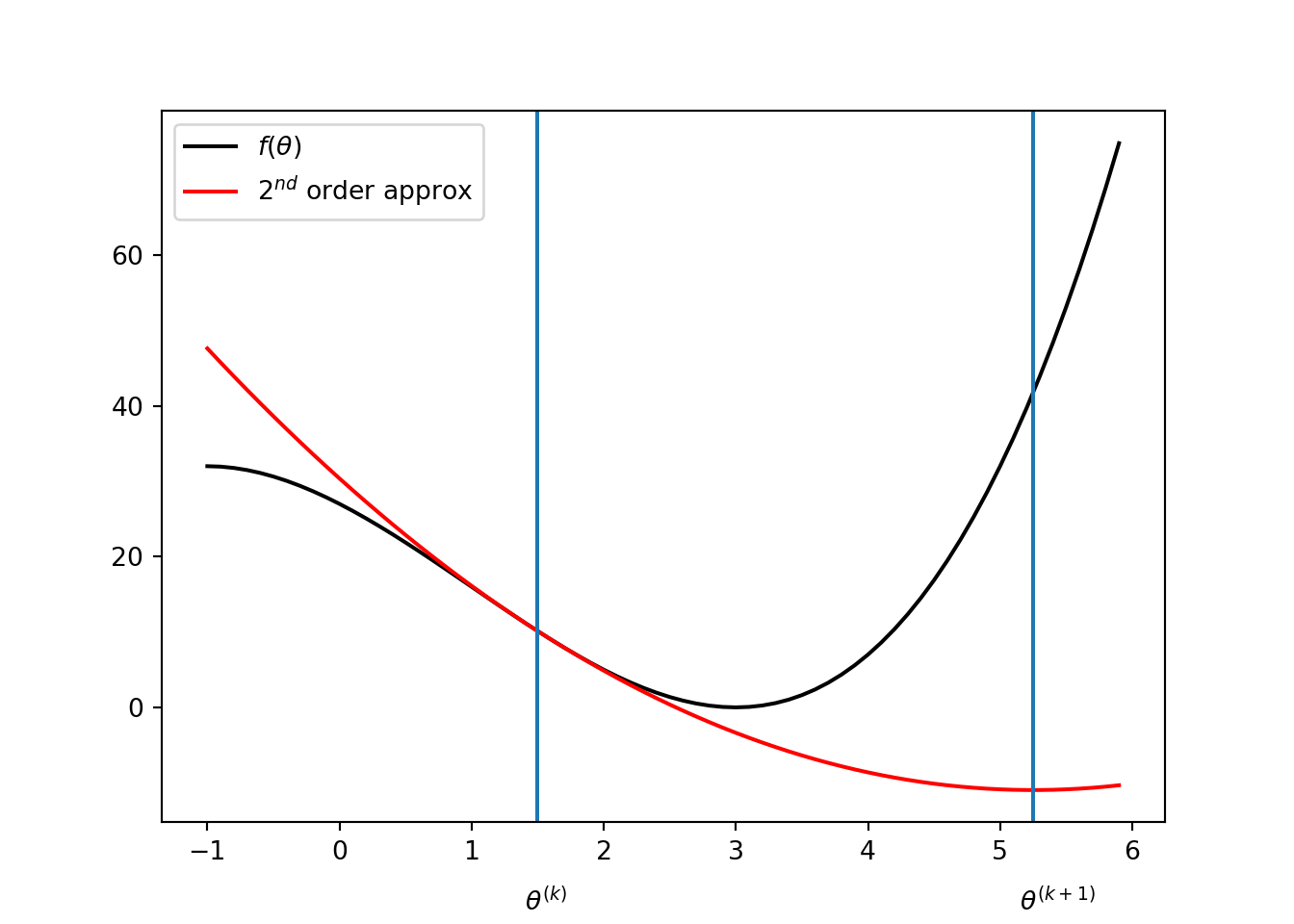

On the other hand, Newton’s method uses a quadratic approximation of the objective function and finds the next step size by minimizing the quadratic function.

Consider the univariate case. In the iteration, let be the quadratic approximation of the objective function given by

Then the update is given by setting the derivative of to zero: then the solution is, For instance, suppose that and . Then,

For multivariate objective functions, update of Newton’s method is very similar to the univariate case: gradient replaces the derivative and Hessian replaces the second-order derivative. The quadratic approximation is given by where and are, respectively, the gradient and Hessian of at . Then, the update can be obtained by taking gradient of : Therefore, the update is given by given that is non-singular (i.e., inverse exists).

Under certain conditions, the Newton’s method can exhibit a qudratic convergence, that is, there exists a constant such that for all , where is the limit of the sequence . In other words, the error in each iteration is proportional to the squared error of the previous iteration.

3.6.2 Instability of the Newton’s method

Update of involves decision by the second derivative . So, the update is undefined if .

It can be instable when the second derivative is very close to zero, because then can be too far from to make the quadratic approximation invalid.

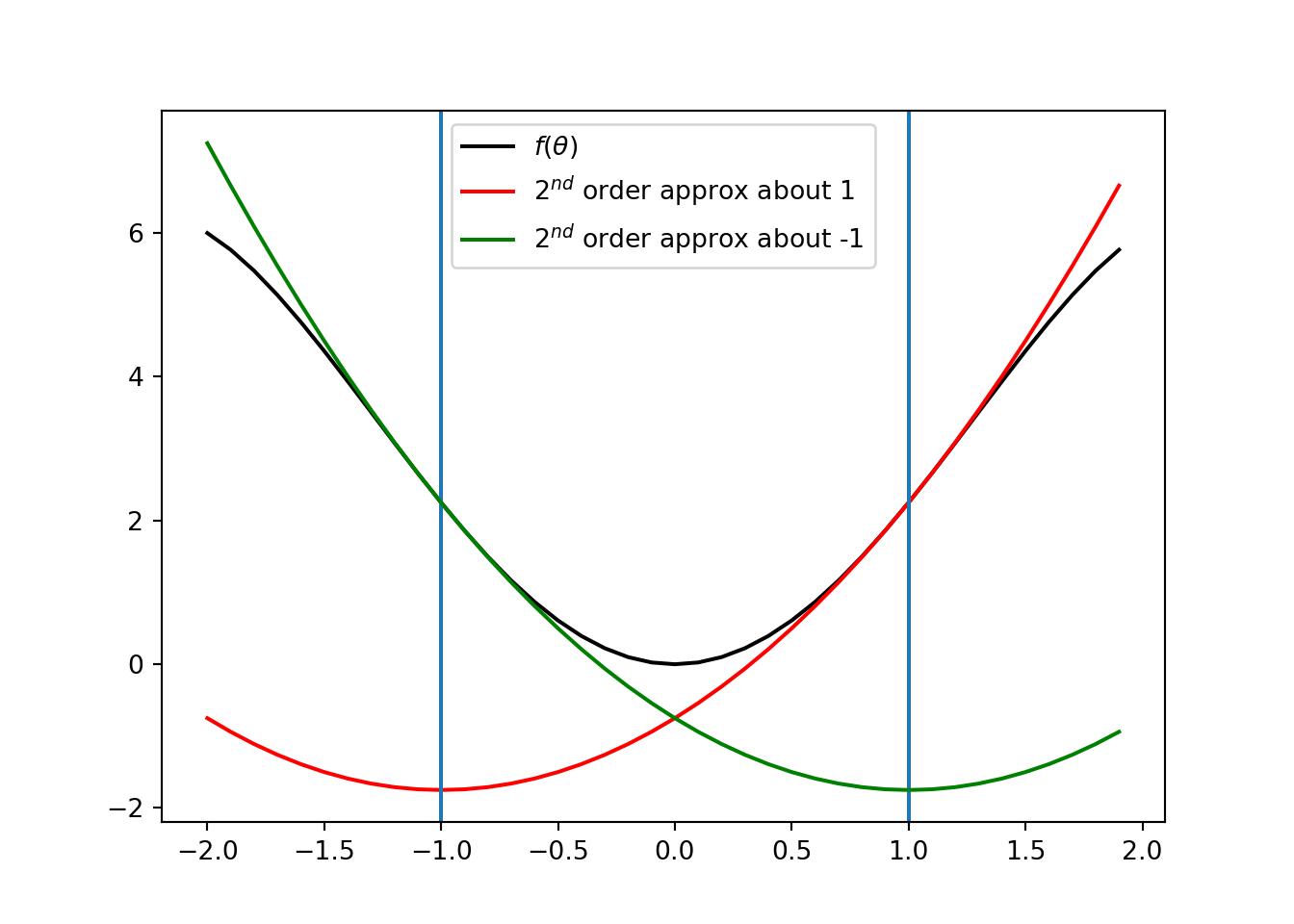

The following figures show situations where Newton’s method fail to work:

Overshoot: Consider and . Then, . It is clearly a overshoot.

Oscillation: Consider . If , then we get . That gives, . So, the Newton’s methods continue to oscillate between and .

3.6.3 Secant Method

Consider the univariate case. Implementation of Newton’s method requires both the first derivative and the second derivative . In many scenarios, we can have access to , but not .

The secant method is a modification of Newton’s method where the second derivative in each iteration is approximated by the last two iterations: That is, the updates in Secant method are given by

This method may take more iterations for convergence than Newton’s method and it also suffers from the problems associated with Newton’s method.

3.6.4 Quasi-Newton Methods

Consider multivariate cases where computing the inverse of Hessian is difficult or impossible.

As in the case of the secant method, quasi-Newton methods modifies the Newton’s method by approximating the inverse of Hessian.

The general update form the quasi-Newton methods is where is scalar step factor and is an approximation of at . That is, the descent direction is given by

Quasi-Newton methods typically starts with being the identity matrix.

To simplify the notation, define and recall that

We now discuss three quasi-Newton methods.

Davidon-Fletcher-Powell (DFP): This method uses the following update on :

where denotes the transpose operation.

-

The update of in (3.6) has the following properties:

If is symmetric and positive definite, is symmetric and positive definite in every iteration ;

If has a quadratic form, that is, then . Thus, for this case, DFP and conjugate gradient methods have the same rate of convergence;

Storing and updating can be computationally demanding for high-dimensional problems, compared to conjugate gradient method;

Exercise: Prove properties (i) and (ii).

Broyden-Fletcher-Goldfarb-Shanno (BFGS): This method is an alternative to DFP method, and the update is as follows: The BFGS is known to work better than the DFP method with approximate line search. However, storing matrix is challenging for higher dimensional problems.

Limited-memory BFGS (L-BFGS): To remedy the above issue with BFGS, limited memory BFGS (L-BFGS) approximates BFGS by storing the last of and , rather than the storing the entire Hessian matrix.

In each iteration, the procedure has two steps to find the descent direction when current design point is .

First, backward procedure: Take , and define for each backward, Then forward procedure: Take and define for , Finally, the descent direction is given by

Exercise: Consider the Rosenbrock function mentioned earlier. Impliment and compare DFP, BFGS, and L_BFGS (with ).

Page built: 2021-03-04 using R version 4.0.3 (2020-10-10)